5.3: CHEM ATLAS_3

- Page ID

- 408787

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)How This Connects: Unit 3, Lectures 21-30

The purpose of this document is to serve as a guide and resource that gives you a quick overview of each lecture. For each lecture, there is a summary of the main topics covered, the Why This Matters moment, and the new Why This Employs section, plus a few example problems. So why did we make this? We hope it’s useful to get a good snapshot of any given lecture. Whether you couldn’t make it to a lecture or you couldn’t stop thinking about a lecture, this is a way to quickly get a sense of the content. It also gives me a chance to provide additional details that I may not have time for in the Why This Matters example, and also it lets me try out the Why This Employs section, which I certainly will not have time to discuss much in the lecture. Hopefully you find it useful!

One point about these lecture summaries. Please note that the lecture summaries are not meant to be a substitute for lecture notes. If you were to only read these summaries and not go to lecture, yes you’d get a good sense of the lecture from a very high level view, but no, you wouldn’t get enough out of it for it to be your only resource to learn the material!

Below is an image of the Exam 3 Concept Map. This demonstrates how each of the aspects of the course fit together: you have lots of resources! The Practice Problems, Recitations, Goodie Bags, and Lectures are ungraded resources to help you prepare for the quizzes and exams. All of the material listed on this concept map is fair game for Exam 3.

Lecture 21: Bragg’s Law and x-ray Diffraction

Summary

X-ray diffraction (XRD) is a method used for characterizing solids. It relies on the diffraction of x-rays upon striking crystal planes (the Miller planes we’ve learned about!) By assuming that each plane of atoms is continuous, and that they reflect the incoming x-rays such that the incident angle and the reflected angle are equal, the Braggs derived the equation that bears their name and relates the distance between repeating planes (\(\mathrm{d}\)) and the x-ray angle of incidence (\(\theta\)) to the x-ray wavelength:

Two x-rays striking equivalent Miller planes with the same angle of incidence will constructively interfere if the additional distance that one of the travels is equal to the wavelength of the x-ray. Quantitatively, if \(\lambda=2 d \sin \theta\), the intensity of the outgoing x-rays with wavelength \(\lambda\) are enhanced. the constructive interference will occur whenever the path length distance is an integer multiple of the wavelength: \(2 d \sin \theta=n \lambda\) for integer \(\mathrm{n}\). For \(3.091\), we'll assume \(n=1\). When constructive interference occurs, a signal will reach the detector in the XRD machine and a peak will be observed in a plot of the x-ray intensity. For destructive interference, no peak will be observed. Knowing the angle that gives rise to a peak as well as the wavelength of the incident x-rays allows us to obtain the distance between the planes that produced the reflection. This is known as the Bragg condition:

\(2 d_{h k l} \sin \theta_{h k l}=\lambda\)

For a given Miller plane, denoted by \((h k l)\), the Bragg condition is satisfied by a pair \((d, \theta)\) of inter-planar spacing and incident angle.

For each of the crystal structures (SC, BCC, or FCC), there are reflections that even when the Bragg Condition is met lead to destructive interference, due to crystal symmetry. The pattern of peak absences was used to derive a set of rules called selection rules, which allow us to know, or at least narrow down the possibilities of, the crystal structure of a material based on its XRD peaks. For the case of SC, there are no rules and any plane is fine. There are no forbidden reflections.

For the case of \(\mathrm{BCC}\), allowed reflections are those where \(\mathrm{h}+\mathrm{k}+\mathrm{l}\) is an even number. Forbidden reflection are those for which \(\mathrm{h}+\mathrm{k}+\mathrm{l}\) is an odd number. For the case of \(\mathrm{FCC}\), allowed reflections are those where \(\mathrm{h}, \mathrm{k}, \mathrm{l}\) are all odd or \(\mathrm{h}, \mathrm{k}, \mathrm{l}\) are all even. Forbidden reflections of FCC are those where h,k,l is mixed odd/even.

Why this matters

For solids, structure can be as important as the chemistry itself, and they are deeply connected. When I look up the crystal structure of a element in the Periodic Table I see what it is for the ground or lowest energy state of that element. This is the overall “happy place,” energetically speaking, of the material. But materials can take on other, metastable structures and be very happy there, too. And Why This Matters is because the properties can be completely different depending on which crystal structure the material takes, and XRD is the single most important characterization method we have to determine crystal structure. We’ve already seen the difference between graphite and diamond, which contains the same exact element (carbon) but just arranged in a different structure. The same is true for, well, pretty much everything. Take another element, iron, as an example.

Here’s a phase diagram for iron. As you may remember from Lecture 14, a phase diagram is a plot of the different phases of a material as a function of some variables, in this case pressure and temperature. Notice that at normal, or “ambient,” temperature (\(\approx 300\mathrm{K}\)) and pressure (\(\approx 1 \mathrm{bar}\)) conditions, iron is a \(\mathrm{BCC}\) crystal. This is also what you’ll find if you look up its crystal structure in the PT. But notice from reading the phase diagram that if we raise the temperature it becomes \(\mathrm{FCC}\), and if we raise the pressure it goes into the HCP (it’s not cubic so we haven’t covered it) phase. Fun fact: if you keep raising the temperature eventually it will go back to being \(\mathrm{BCC}\).

The reason all of this matters is that the structure changes the properties. In the case of iron, the element’s magnetic properties are affected. If it’s FCC then it will be ’antiferromagnetic’ as opposed to the BCC ’ferromagnetic’ behavior. I recommend taking a magnetics course in the future to learn more about these terms! This has huge implications in magnetic technologies. And I know you’re probably thinking: sure, but we don’t build too many iron-based technologies that operate at 1000\(\mathrm{K}\)! You’re right, but the trick is that often times we can coax these materials to get stuck in one of those metastable phases and then use it for technologies while it’s in that phase. Again, diamond is a great example: it’s not the ground state of carbon so it is metastable as diamond instead of graphite, but we know that it stays stuck there for a long time so for most technologies that use diamond (say, a piece of jewelry), we don’t worry about it changing out of its metastable phase.

Coming back to the topic of the lecture, when we make something, whether that something is as old as elemental iron or as new as a nanostructured perovskite, the simplest and most common way we have to tell its crystal structure is by \(\mathrm{XRD}\). In some cases, the use of \(\mathrm{XRD}\) can unravel the structural mystery of a material, as in the case of the double-helix for DNA or the many proteins since. In other cases, it’s used to not unlock the secret to a completely new structure, but rather to classify a material into one or the other well-known structures. Sometimes the reason is to understand a material, sometimes it’s to engineer the material properties, and often times it’s both. But whatever the motivation, this incredibly powerful characterization tool has revolutionized what we know about solids.

Why this employs

We’ve been referencing these crystallographers (who are very picky about notation!) for several lectures now. But who are these people? And more to the point: who hires them? A whole lot of X-ray crystallographer jobs are out there in the biotech industry, in companies of all sizes. Blueprint Medicines, which looks like a Harvard spin-out and is just down the street, has an opening now with the title, “Senior Scientist/Principal Scientist, X-Ray Crystallography,” with the first job function description being, “Provide x-ray crystallographic and protein structure-function support, including structure-based drug design, to on-going drug discovery projects and new target discovery initiatives.” And the larger pharmaceutical and biotech companies have even more jobs. Take Novartis, which also has a big headquarters near MIT, just down Mass Ave. They’re hiring people to perform ”crystallography experiments including crystallization screening using automated liquid handling. GlaxoSmithKline has an opening for someone to “enable higher throughput x-ray crystallography.” Johnson and Johnson is hiring people with X-ray crystallography expertise to do, “automated chemistry.” And I have to mention one last example because of the title of their current opening, Bristol-Myers-Squibb is looking for a, “Research Investigator, Solid-State Chemistry.” Gotta love it! They want, “an entry level scientist with background in X-ray crystallography, X-ray diffraction, and solid-state characterization.” That’s now you!

And it’s not all about pharma. Hospitals are hiring X-ray crystallographers too (these are not the same position as a radiologist), to work on research projects for example with openings at Mass General and Dana Farber. And many research positions in X-ray diffraction are out there too, from positions at the Howard Hughs Medical Institute to university labs and centers all across the country.

Example Problems

1. Determine the structure (simple cubic, body centered cubic, or face centered cubic) to which this \(\mathrm{XRD}\) pattern most likely corresponds (copper \(\kappa_{\alpha}\) x-rays were used).

- Answer

-

Given that the indices of each plane are either all odd or all even, using the selection rules we are able to determine that this structure is \(\mathrm{FCC}\).

Lecture 22: From x-ray Diffraction to Crystal Structure

Summary

This lecture we finished analyzing the \(\mathrm{XRD}\) spectrum of an \(\mathrm{Al}\) sample, shown below.

The plot was obtained by shining \(\mathrm{K}\)-alpha x-rays from a \(\mathrm{Cu}\) target onto our \(\mathrm{Al}\) sample. What we want to do is figure out the crystal structure and the lattice constant of \(\mathrm{Al}\). To answer these questions, we need our handy Miller plane separating distance equation (where ' \(\mathrm{d}\) ' is the distance between two repeating Miller planes with indices \(\mathrm{hkl}\) in a cubic system, and ' \(a\) ' is the lattice constant):

\(d_{h k l}=\dfrac{a}{\sqrt{h^2+k^2+{ }^2}}\)

and the Bragg condition:

\(2 d_{h k l} \sin \theta_{h k l}=\lambda\)

Notice that both of these equations include \(d_{h k l}\). We can use this to our advantage and substitute one equation for \(d_{h k l}\) into another to obtain the following:

\(\left(\dfrac{\lambda}{2 a}\right)^2=\dfrac{\left(\sin \theta_{h k l}\right)^2}{h^2+k^2+l^2}\)

We know the value of the wavelength, because it is fixed by the \(K_a\) x-rays from the copper source. These x-rays have a wavelength of \(1.54 \AA\). So the expression for our example becomes:

\(\left(\dfrac{1.54 \AA}{2 a}\right)^2=\dfrac{\left(\sin \theta_{h k l}\right)^2}{h^2+k^2+l^2}\)

Now we have constants on both sides of the equal sign, because the lattice parameter does not change. We can make an educated guess of the \(\mathrm{hkl}\) value by following the procedures outlined in the chart on the following page.

From the selection rules we know that \(\mathrm{Al}\) is an \(\mathrm{FCC}\) metal, since the (\(\mathrm{hkl}\)) combinations are always either all even or all odd. We can also take a value of \(\theta\) and a value of h, k, and l and plug these into the equation above to find the lattice parameter, given in the rightmost column of the chart.

Why this matters

Photo of Henry Moseley is in the public domain.

This is Henry Moseley (image, Royal Society of Chemistry). He’s pictured there in his lab, holding in his hands, of course, a modified cathode ray tube. He was experimenting with X-rays. But Moseley’s interests were less about the crystal structures and Bragg conditions and more about the X-ray lines themselves and what they meant. He carried out a systematic study of the metals used to generate the Xrays, comparing the X-ray emission from 38 different chemical elements.

Some work had already been done that led to our understanding of the characteristic and continuous parts of the X-ray generation spectrum, as we discussed a few lectures ago. But a full systematic study had not been carried out until Moseley’s work. Take a look at the difference between the two X-ray spectra generated with two different targets: \(\mathrm{Mo}\) and \(\mathrm{Cu}\). Note the \(\mathrm{K}_{\alpha}\) and \(\mathrm{K}_{\beta}\) lines for each one, and that they’re shifted to lower wavelength for \(\mathrm{Mo}\) compared to \(\mathrm{Cu}\). As we know, this is because of difference in energy between the shells of \(n=1\) and \(n=2\) (for \(\mathrm{K}_{\alpha}\)) or \(n=1\) and \(n=3\) (for \(\mathrm{K}_{\beta}\)), and it makes sense this energy difference is greater (corresponding to lower wavelength) for \(\mathrm{Mo}\) since it’s heavier than \(\mathrm{Cu}\). But what exactly is the dependence, and why is it present? Let’s look at the data for a sequence of targets, directly from a subset of Moseley’s data.

These are characteristic X-ray lines for \(\mathrm{Ca}\) up through \(\mathrm{Zn}\), so going across the \(\mathrm{d}\)-block elements of the fourth row in the PT. Note that it’s not actually \(\mathrm{Zn}\) but rather brass, which we’ve already learned is a mixture of \(\mathrm{Zn}\) with \(\mathrm{Cu}\) – that’s because \(\mathrm{Zn}\) would melt under the high energy electron bombardment before it could give off any characteristic X-rays, so Moseley gave it the extra strength it needed by making brass, and then subtracted out the emission from \(\mathrm{Cu}\). Nice trick!

What Moseley found was that if the characteristic emission lines were plotted as the square of their energy vs. atomic number, that you'd get a straight line. He fit the data by considering the lines to come from a core excitation, so a difference in energy levels from Bohr's model:

\(E_{x-r a y}=13.6[e V](Z-1)^2\left(\dfrac{1}{1^2}-\dfrac{1}{2^2}\right)=\dfrac{3}{4}(13.6[\mathrm{eV}])(Z-1)^2\)

This is now called Moseley's law. Can you see why it's \(\mathrm{Z}-1\) instead of \(\mathrm{Z}\) as in the Bohr model? It's because the electron cascading down to generate the X-ray is "seeing" a 1-electron screening of the nucleus. That's because one of those core 1s electrons was knocked out, but there's one left there that screens out a positive charge, hence the \(\mathrm{Z}-1\). So what did this trend in the data mean? Here's what Moseley said in his 1913 paper, "We have here a proof that there is in the atom a fundamental quantity, which increases by regular steps as one passes from one element to the next. This quantity can only be the charge on the central positive nucleus, of the existence of which we already have definite proof."

The reason this is such a big deal, and why I'm making it the Why This Matters for this chapter, is that even through the Mendeleev periodic table had been around and more elements were being discovered and added, there was a major flaw in the periodic table: the position predicted by an element's atomic weight did not always match the position predicted by its chemical properties. Remember that the positioning by Mendeleev was based on weight and properties and when the periodicity called for it, he chose to order the elements based on their properties, rather than their atomic weight. But was there something more fundamental than atomic weight?

Moseley's data only made sense if the positive charge in nucleus increased by exactly one unit as you go from one element to the next in the PT. In other words, he discovered that an element's atomic number is identical to how many protons it has! I know this seems kind of obvious to us now, but back then "atomic number" was simply a number with no meaning, other than the element's place in the periodic table. The atomic number was not thought to be associated with any measurable physical quantity. For Mendeleev, periodicity was by atomic mass and chemical properties; for Moseley, it was by atomic number. This led to a much deeper understanding of the periodic table and his insights immediately helped to understand some key mysteries, for example where to place the lanthanides in the PT \((\mathrm{La}=\# 57, \mathrm{Lu}=\# 71)\), or why \(\mathrm{Co}\) comes before \(\mathrm{Ni}\). And the gaps that Mendeleev brilliantly left open in his PT to create periodicity now made sense by missing atomic numbers in a sequence, for example elements \(43,61,72\), and \(75\) were now understood to contain that many protons (they were discovered later by other scientists: technetium, promethium, hafnium and rhenium).

Moseley died tragically in 1915 at age 27 in a battle in WW1. In 1916 no Nobel Prizes were awarded in physics or chemistry, which is thought to have been done to honor Moseley, who surely deserved one.

Why this employs

We've covered X-ray generation and machine manufacturers two chapters ago, and in the last chapter we looked at jobs related to X-ray crystallography. For this last Why This Employs related to Xrays, it's time to go big or go home. And when I say big, I mean really big. When electrons or for that matter any charged particles are accelerated to near light speeds, then the acceleration they experience simply to stay in a loop produces massively energetic radiation. The wavelength can vary dramatically, but very often these enormous accelerators are used to make super-high-energy X-rays. The intensity of these rays is dazzlingly bright, millions of times brighter than sunlight and thousands of times more intense than X-rays produced in other ways. This level of brightness makes them useful for pretty much any and all areas of research and fields of science. Some types of measurements are only possible when synchrotron light is used, and for other types one can get better quality information in less time than with traditional light sources. They've been shown to be useful in so many areas it's impossible to list them all, but certainly in biology, chemistry, physics, materials, medicine, drug discovery, and geology, to name only a few fields, synchrotrons have made a dramatic impact. They're in such demand that often one needs to book time on them many months in advance. A typical synchrotron can have as many as 50 "beam lines" that grab the high energy X-rays out of the loop and focus them in a beam where experiments are done. These lines are usually booked and put to use 24 hours per day, 7 days per week, all year round. Here’s a photo of one of them, the Advanced Photon Source at Argonne National Laboratory in Illinois. Their tagline overview statement reads, “The Advanced Photon Source (APS) at the U.S. Department of Energy’s Argonne National Laboratory provides ultra-bright, high-energy storage ring-generated x-ray beams for research in almost all scientific disciplines.”

The Employment part of this is pretty cool. These facilities, which are called “synchrotrons,” are all over the world, and they require thousands of people to build and then run. The international nature of them is astounding: just do a image search for synchrotron and you’ll find pictures of them all over the planet. And that means jobs in many different locations. Some are old, like the one at Berkeley National Lab (but it’s still kicking!), some are medium-sized like that APS pictured above, and some are huge like the Hadron Collider I referenced in the last lecture as a place where 3D X-ray imaging was invented. Colliders are also synchrotron light sources, since they’re built to accelerate particles at very high speeds. Even though colliders may be used to smash particles together at these speeds, they’re also often used simply as a way to generate high intensity light.

I don’t have a specific job title in mind, but if you look at a list like this one: https://en.wikipedia.org/wiki/List_of_synchrotron_radiation_facilities you’ll see where these synchrotrons are, and for each one of them there’s an “employment” link you can click on to explore possible jobs.

Extra practice

1. Determine the element that made up the sample from Lecture 21 Extra Practice Problem 1. The XRD pattern is reproduced below (copper \(k_a\) x-rays were used).

- Answer

-

To know which element was used as the sample, find the lattice parameter (a) using the equation for interplanar spacing \(\left(d_{h k l}\right)\), Bragg's law, and Moseley's law. For \(\mathrm{h,k,l}\) and \(\theta_{h k l}\), pick a plane and a corresponding angle from the chart we developed last chapter, shown below:

\begin{gathered}

d_{h k l}=\dfrac{a}{\sqrt{h^2+k^2+l^2}} \\

\lambda=2 d_{h k l} \sin \left(\theta_{h k l}\right)

\end{gathered}The energy corresponds to \(\mathrm{Cu}(\mathrm{Z}=29) k_\alpha\) radiation:

\begin{gathered}

E=\dfrac{h c}{\lambda}=13.6[e V](Z-1)^2\left(\dfrac{1}{n_f^2}-\dfrac{1}{n_i^2}\right) \\

\lambda=\dfrac{h c}{-13.6(Z-1)^2\left(\frac{1}{n_f^2}-\frac{1}{n_i^2}\right)} \\

d_{h k l}=\dfrac{h c}{-13.6(Z-1)^2\left(\frac{1}{n_f^2}-\frac{1}{n_i^2}\right) 2 \sin \theta_{h k l}} \\

a=\dfrac{h c \sqrt{h^2+k^2+l^2}}{-13.6(Z-1)^2\left(\frac{1}{n_f^2}-\frac{1}{n_i^2}\right) 2 \sin \theta_{h k l}}=3.53 A

\end{gathered}This lattice parameter corresponds to \(\mathrm{Ni}\).

2. You would like to perform an XRD experiment, but you don't know what target is used in the diffractometer in your lab. You put in a calibration sample of iron, which is BCC and has a lattice parameter of \(2.856\) angstroms. If you observe the following XRD pattern, what material is the target? You are pretty sure that there is a filter that prevents anything with lower energy than \(k_a\) radiation from hitting your sample.

The peaks observed are as follows:

| counts | 10 | 1000 | 20 | 2200 | 8 | 5 | 1200 | 2500 |

|---|---|---|---|---|---|---|---|---|

| \(2\theta\) | 17.38 | 20.87 | 24.67 | 29.62 | 30.30 | 35.15 | 36.45 | 42.37 |

a) What kind(s) of x-rays are hitting the sample?

- Answer

-

\(k_\alpha \operatorname{AND} k_\beta\)

b) How many planes are represented by the data? Which planes are they?

- Answer

-

4 planes are represented: \((110),(200),(211)\), and \((220)\)

c) What are the interplanar spacings associated with these planes?

- Answer

-

\[\dfrac{2.856}{\sqrt{h^2+k^2+l^2}} \nonumber\]

Plugging in each (\(\mathrm{hkl}\)), the spacings are 2.02, 1.43, 1.17, and 1.01 A

d) Which element was used as the target?

- Answer

-

\begin{gathered}

\lambda=2 d_{h k l} \sin \left(\theta_{h k l}\right) \\

\lambda_{k_\alpha}=0.73 A \\

\lambda_{k_\beta}=0.61 A

\end{gathered}

- Answer

-

\[E=\dfrac{h c}{\lambda}=13.6(Z-1)^2\left(\dfrac{1}{n_f^2}-\dfrac{1}{n_i^2}\right) \nonumber\]

For \(k_\alpha, n_i=2\) and \(n_f=1\). For \(k_\beta, n_i=3\) and \(n_f=1\).

\begin{aligned}

&h=4.135 \times 10^{-15} \mathrm{eV} . \mathrm{s} \\

&c=3 x 10^8 \mathrm{~m} / \mathrm{s} \\

&\mathrm{Z}=42

\end{aligned}

Lecture 23: Point Defects

Summary

A point defect is a localized disruption in the regularity of the crystal lattice. There are four types of point defects: vacancies, interstitial impurities, self-interstitials, and substitutional impurities.

Arrhenius determined a law for the temperature dependence of the rate at which processes occur:

\(k=A e^{-E_a / R T}=A e^{-E_a / k_B T}\)

where \(\mathrm{R}\) is the gas constant (or \(k_B\) is the Boltzmann constant) and \(E_a\) is the activation energy. The term in the exponent should be unitless: therefore, if the activation energy is given in \(\mathrm{J} / \mathrm{mol}\), use the version with \(R\), but if the activation energy is given in \(J\), use the \(K_B\) version. Recall that the gas constant is just \(R=K_B * N_A\). The units are determined by the prefactor \(\mathrm{A}\), which can be thought of as an average kinetic energy of the system.

Vacancies are always present in every solid because they're a result of thermally-activated processes. We can consider the rate of formation of a vacancy and the rate of removal of that same vacancy as two thermally-activated processes, each with their own rate. At any given temperature, when the rate of forming the vacancy is the same as the rate of "de-forming" the vacancy then the vacancy concentration in the crystal will be in equilibrium. Since each rate is thermally activated we can use an Arrhenius equation to describe both the forward and back process, and setting them equal for equilibrium one arrives at a formula describing how the vacancy concentration depends on temperature:

\(N_v=N e^{-E_a / k_B T}\)

where \(N_v / N\) is the fractional concentration of vacancies and \(E_a\) is the activation energy \([\mathrm{J}]\) required to remove one atom. If the vacancy occurs in an ionic solid, charge neutrality must be maintained. Therefore, the defect either forms as a Schottky defect, where a pair of charges (one cation and one anion) is removed, or as a Frenkel defect, where the vacant atom sits elsewhere in the lattice on an interstitial site. For a Schottky defect in an ionic solid like \(\mathrm{CaCl}_2\), two anions \(\left(\mathrm{Cl}^{-}\right)\) must be removed for each cation \(\left(\mathrm{Ca}_2^{+}\right)\) vacancy to maintain charge neutrality. Frenkel and a smaller cation, like \(\mathrm{AgCl}, \mathrm{AgBr}\), and \(\mathrm{AgI}\), for example.

Interstitial defects can occur in covalent solids as well: in this case, an extra atom occupies a site that is not part of the lattice, but the charge neutrality requirement doesn't necessitate the creation of a vacancy provided the interstitial atoms have the same charge as the lattice atoms. For example, a \(\mathrm{C}\) interstitial in \(\mathrm{Fe}\) is charge neutral. If the interstitial atom is the same type of atom as the lattice, like a \(\mathrm{Si}\) atom in a \(\mathrm{Si}\) lattice but not on a lattice site, the defect is called a self-interstitial. The energy required to form a self-interstitial \((2-5 \mathrm{eV})\) is much higher than for a vacancy \((0.5-1 \mathrm{eV})\), so these defects are much less common: this can be rationalized by thinking about how hard it would be to squeeze an atom between similarly-sized atoms arranged in a closely-packed lattice.

Atoms which take the place of another atom in a lattice are called substitutional defects. Generally, the Hume-Rothery rules provide guidelines to which atoms can be a substitutional defect: the atomic size must be within \(+/- 15\%\), the crystal structure must be the same, the electronegativity must be similar, and the valence must be the same or higher.

Why this matters

Let’s pick up on the interstitial defect of carbon in iron, otherwise known as steel. This particular defect is one that has positive benefits if it’s controlled carefully and the right amount of carbon (not much, it turns out) is placed in the right positions within the iron lattice (the tetrahedral holes, for example, in bcc \(\mathrm{Fe}\)). In fact, the change in iron’s properties are absolutely tremendous and represent a spectacular example of how defects can be used beneficially. If you Production vs. time figure removed due to take a piece of pure iron and ap- copyright restrictions. ply sideways strain on it, then its resolved shear stress is quite low, around 10 MPa. That means that if you push sideways on a piece of pure iron it will deform under 10 MPa of pressure. But with just 1% \(\mathrm{C}\) on interstial sites, the \(\mathrm{C}\)-doped iron can have a resolved shear stress as high as 2000 MPa, 200 times larger than the undoped case!

Now, this phenomenon has been known and practiced for over 2500 years, when people first observed the mechanical strength imparted on iron when it was heated by a charcoal fire (the charcoal was the carbon source). But that’s just it: 2500 years ago, or 1000 years ago, or even just 100 years ago, not a whole lot of steel was being made each year. This is now changing, and it’s changing dramatically, and it’s Why This Matters.

Industrial Carbon Emission Chart

Take a look at the chart above (from US Geological Survey, UN, FAO, World Aluminium Association) of the production amount for some of the materials humans make on a scale massive enough to require enormous chunks of the world’s energy consumption. Cement and steel are the top two, and estimates put them at 10-15% combined of annual global \(\mathrm{CO}_2\) emissions. If we look at just \(\mathrm{CO}_2\) emissions from industrial processes, steel has the biggest share at 25% of the total. But even more important are those slopes in the production trends: note that the use of these products is growing and will continue to grow dramatically into the future. Back 2500 years ago it didn’t matter how steel was made. They also had no idea why the charcoal gave iron those properties. Today, not only do we need to find new ways to make steel more efficiently, but we also know what’s happening in the material at the atomic and bonding scale. In other words, we understand its solid state chemistry.

How can we make steel in a more energy efficient manner? Answering that question relies on knowledge of the point defects in the material, and specifically on the energy it takes to get carbon into the interstitial lattice. And it’s not always obvious. For example, if we compare the atomic size of \(\mathrm{C}\) with the sizes of the available interstitial volumes in \(\mathrm{Fe}\), it’s clear that it doesn’t quite fit, and some type of lattice distortion will have to take place in order to accommodate the interstitial defect, even as small as a \(\mathrm{C}\) atom. But that means it’s not as simple as occupying the defect site with the most room, since we need to know how atoms get strained in response to the defect being there. Take the example of -iron: in that phase you would think a \(\mathrm{C}\) atom occupies the larger tetrahedral hole, but in fact it prefers to go to the octahedral interstitial site. The reason for this preference is that when the \(\mathrm{C}\)-atom goes into the interstitial, strain gets relieved for the octahedral site by two nearest neighbor iron atoms moving a little bit, while for tetrahedral site, four iron atoms are nearest-neighbor and the displacement of all of these requires more strain energy. This is just one phase of iron and two different sites. There may be ways to move \(\mathrm{C}\) atoms into other phases more easily, that would take less energy, and give the same strength. Or perhaps there are other ways beyond fire (which is why steel-making takes so much energy) to get the defect chemistry just right. This is a hard problem, but it’s a critical one: take a look at this chart from a recent paper published in Science that breaks down which sectors will be hardest to make “green.” Note the prominence of steel and cement! To solve such hard problems, we will need advances in defect chemistry.

Why this employs

This is an easy one: there’s actually a job position called “Defect Engineer”! At Global Foundries, they care about defects for the basic manufacture of semiconductor materials while at Intel, there are openings for Defect Engineers to work on 3D XPoint which is a new non-volatile memory technology. Intel also has an opening for a “Defect Reduction Engineer.” These and so many more similar openings are for industries where devices are made in clean rooms, often very clean cleanrooms. A “Class 1” cleanroom, for example, means that if you take a meter cubed of air at any given place in the room, you would count less than 10 particles of size 100 nanometers, less than 2 particles size less than 200 nanometers, and zero particles larger than that. The reason fabrication facilities need such a high level of air purity is that they’re making features on the order of 10’s of nanometers and so any small defect can be a big problem.

Now, then again, these are not necessarily point defects (although certainly 100 nm particles that hit a layer of silicon while its being processed can cause point defects). How about technologies where the point defect is the key part of the technology itself? In that case you may be talking about a solid oxide fuel cell (\(\mathrm{SOFC}\)). These are typically metal-oxides and they work by conducting oxygen ions through the material. But the only way an oxygen ion can move is if there are oxygen vacancies present, and in enough density at reasonable temperatures. Many companies work on developing efficient, low-cost, and low temperature solid oxide fuel cells. Every single car manufacturer for example, has an interest in this as a possible future way to power transportation (Nissan has a cool demo car). Other companies like Precision Combustion, Elcogen, or Bloom Energy all work to build \(\mathrm{SOFCs}\), with that last one stating, “better electrons” *on their homepage. Nice. (although I thought all electrons were identical. . . but anyway). The point is that there’s a growing interest in \(\mathrm{SOFC}\) and an already strong market set to reach $1B by 2024. With greater control over those oxygen vacancies, the ability to make the materials cheaper, and the ability to control defects at lower temperatures, the use of \(\mathrm{SOFC}\) could increase even much more than that!

Extra practice

1. Sketch the following defects:

a) Schottky

b) Frenkel

c) Substitutional impurity+vacancy

d) Self-interstitial

e) Substitutional impurity

f) Vacancy

- Answer

-

2. Solid oxide fuel cells rely on the reaction of fuel with oxygen to form water. A ceramic oxide can be doped to introduce oxygen vacancies that allow charge to conduct through the solid electrolyte. Zirconia \((\mathrm{ZrO} 2)\) can be doped by adding \(\mathrm{Sc}2\mathrm{O}3\). If \(0.5 \mathrm{~g}\) of \(\mathrm{Sc}2\mathrm{O}3\) can be incorporated into \(10 \mathrm{~g}\) of \(\mathrm{ZrO} 2\) while maintaining the zirconia structure, how many oxygen vacancies are generated?

- Answer

-

We are doping \(\mathrm{ZrO}_2\) with \(\mathrm{SC}_2 \mathrm{O}_3\). The \(\mathrm{Zr}^{4+}\) ions are replaced by the \(\mathrm{Sc}^{3+}\) ions, creating a charge imbalance of \(-1\) with each substitution. This means that for every 2 such replacements, or every \(2 S c^{3+}\) ions added, there will be a \(-2\) imbalance, and an oxygen vacancy \(\left(V_O^{\prime \prime}\right)\) will be created to compensate and achieve charge neutrality.

\[0.5 g \mathrm{Sc}_2 \mathrm{O}_3\left(\dfrac{1 \mathrm{molSc_{2 } \mathrm { O } _ { 3 }}}{137.9 g}\right)=0.0036 \mathrm{molSc}_2 \nonumber\]

\(0.0072 \mathrm{~mol} \mathrm{Sc} c^{3+}\) added \(=0.0036 \mathrm{~mol} V_O "\)

Total number of oxygen vacancies \(=2.18 \times 10^{21}\)

Lecture 24: Line Defects and Stress-Strain Curves

Summary

Line defects are 1-dimensional defects in a crystal that affect many macroscopic materials properties, including deformation. In 3.091, we’ll focus on two types of deformation: elastic deformation, which is reversible (strain only occurs when there is a stress applied, and it goes back once the stress is removed), and plastic deformation, which is permanent.

Elastic deformation can be likened to connecting all of the atoms with springs: Hooke’s law, F=-kx, tells us that there is a restoring force that returns the material to its initial state. This corresponds to the initial linear region in a stress-strain curve. If a material is brittle, it will likely break in the linear regime. However, if the material is ductile, it can undergo plastic deformation: the material no longer responds linearly to the applied stress. One deformation mechanism that occurs during plastic deformation is called slip: individual planes of atoms slide past each other due to the presence of a line defect. A dislocation is a type of line defect that forms when atoms are slightly misaligned: an extra plane of atoms exists in the crystal.

Dislocations can move through a crystal when a force is applied. Atoms slip over another to relieve the internal stress caused by applying a force. How can we tell what the slip planes are? In order for the material to slip, two adjacent planes of atoms must slide past each other. As this happens, the bonds between the planes must break and re-form. Therefore, slip planes must be planes that have the lowest inter-planar bond density. You can verify that this means that the most densely packed planes will be slip planes, because they have the highest intra-planar bond density. This means that slip occurs parallel to the closely packed planes: together, the slip planes and slip direction form a crystal’s slip system. Many materials can undergo dislocation-mediated slip, but metals in particular are known for this mechanism of deformation. The sea of electrons we learned about in metals allow bonds to move around (break and re-form in a new spot) with ease compared to ionic and covalent solids, which have much more rigid electronic structures.

When stress is first applied to an elastic material, dislocations form initially as planes of atoms pull apart. If two dislocations run into each other as they’re moving around, the defects can become pinned, so they can no longer move through the crystal. As more and more dislocations are pinned, they become tangled and slip doesn’t occur: the material would continue to deform elastically but it would require much more force to deform it. In other words, dislocation pinning makes materials harder. This mechanism of material strengthening is called work hardening. Work hardening causes the yield strength to increase, but at the expense of ductility.

Why this matters

Let’s talk about wind energy. Wind has the same intermittency challenges that solar has (so in other words it can only really be useful on a large scale if we can store the energy cheaply and efficiently). But wind also has many advantages and is a very appealing way to generate electricity. That’s why the installed wind capacity has seen tremendous growth globally over the last 20 years. In the U.S., more than 5% of our total electricity is now generated by the wind.

Global Cumulative Installed Wind Capacity 2001-2016 (pg. 3) chart

A wind turbine is actually based on fairly simple technology, which is one of the reasons it’s so appealing. Basically, the wind turns the blades, which provides the force to run an electric motor in reverse to generate electricity. The challenge is that the most consistent, high energy winds occur at high altitudes, so the blades are more efficient the larger and higher up one can make them go. This means that the blades have to support tremendous mechanical loads from the high wind power. And to make matters more difficult, the blades have to be able to go back and forth between high, low or no wind depending on the time of day. (as an aside, I love to look at maps and if you’re interested in seeing how the wind speed varies around the globe, there are a lot of cool maps that show this to you).

So in other words, we need blades that are strong enough that they don’t break apart under the extreme stress of the wind force, but flexible enough that they can bend without irreversible deformation. And what is it that we need to understand and engineer in order to make better blades? The line defects of course!!

Take a look at this plot of materials.

Here we’re looking at the density of the material on the x-axis and the Young’s modulus on the y-axis. The Young’s modulus is simply a measure of the elastic stiffness of the material, so if we go back up to our stress/strain curve at the beginning of this chapter, it’s related to the slope of the linear elastic regime. For a wind turbine blade, we’d like it to be lightweight and strong, but also flexible. As we learned in this lecture, the line defects are related to the plasticity, which is related to the yield stress, which is related to how much a material can be elastically strained.

From the chart, we can compare the different classes of materials (like metals vs. ceramics vs. polymers, etc) for two of these properties: density and stiffness. What’s interesting about this is that there are enormous parts of the chart that are currently empty. In other words, there’s a lot of work to be done to find and prepare materials that are both heavy and flexible, or light and strong. And you know what this means: new chemistry combined with control over line defects!

Why this employs

Let’s go big. This boat, for example, is called the SS Schenectady, and it has a bit of a problem. Mostly that it’s cracked in half. A lot of ships built during World War II were made from low-grade steel, which was too easy to fracture at the temperatures of the sea. In fact, they did test the strength of their steel but only in the dry dock, so the test temperature was higher than the operational temperature. Once the ship was in the colder water, the steel became much more brittle and it became much easier for defects to form, and once they formed it was easy for them to grow under applied stress. One certainly doesn’t want plastic deformation of a ship’s metal, but one does not want this type of brittleness either. Getting the right strength for the right application under the right conditions requires a lot of knowledge of the materials and their defect properties. And there are a lot of jobs in this space too. Think about it: pretty much everything we build today has to have some sort of operating conditions where the mechanical properties can be counted on to work as expected. Otherwise, ships crack in half, bridges collapse, and roads buckle.

Since I just gave the example of the ship, how about this one: the U.S. Department of the Navy has an opening for a “Materials Engineer” where they explicitly ask for experience in, “strength of materials (stress-strain relationships).” Corning’s Manufacturing, Technology and Engineering division is hiring a “Scientist/Engineer” to do modeling of the strength of materials, presumably mostly glasses. There’s an opening for a “Research Engineer – Materials Behavior,” at GE to design mechanical properties of new structural materials and coatings being for aircraft propulsion. There are so many jobs in this space I couldn’t possibly even categorize them all: pretty much any company that makes or deals with materials has jobs available related to their mechanical strength and failure. From ships, to buildings, medical devices to clothing, spacecraft to furniture, defects hold the key.

Extra practice

1. You obtain the following stress-strain curve for an aluminum sample (FCC).

a) Label the following regions on the plot (and the axes!):

Elastic regime Plastic regime Yield point Fracture point Elastic modulus

- Answer

-

Axes: stress, \(\sigma\) on the \(y\)-axis, has units of force/area. Strain, $\epsilon$ on the x-axis, is unitless, but often represented as length/length

b) What is the slip system in aluminum?

- Answer

-

The slip system for FCC is the close-packed direction and close-packed plane: \(\langle 110\rangle\) and \(\{111\}\) Recall that the angle brackets are used to denote families of directions, and the curly braces are used to denote families of planes!

Lecture 25: Amorphous Materials: Glassy Solids

Summary

Glasses are “amorphous materials:” all of the atoms are randomly arranged in a non-repeating structure. In \(3.091\), we'll focus on one type of glass: silica, or \(\mathrm{SiO}_2\). Each silicon atom has four valence electrons, so it is happy to form 4 single bonds. If an oxygen bonds to each of these valence electrons, each of the oxygens is left with an extra electron, forming a \(\left(\mathrm{SiO}_4\right)^{4-}\) molecule. However, when a solid is formed from these silicate molecules, the \(\mathrm{O}\) can be shared between neighboring silicates, forming a bridge.

The individual tetrahedral silicate molecules stay intact, but they can freely rotate relative to the other silicate molecules in the solid. When they don’t arrange in an ordered fashion, silica glass is formed. Whether the solid that forms is crystalline or glassy depends strongly on the processing conditions the silica undergoes.

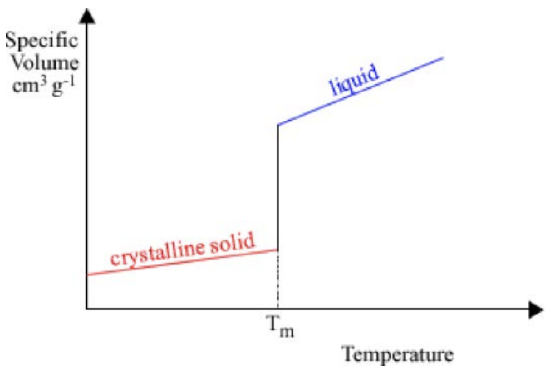

One metric to quantify the processing conditions is by looking at how the molar volume changes as a function of temperature. For a crystal, the plot looks like this. There’s a sloped line that corresponds to the solid material, then a jump, then a different sloped line that corresponds to the liquid phase. The jump occurs at the melting temperature \(T_m\): when the material melts or freezes, it undergoes a huge change in volume. The slope of each line is defined as the coefficient of thermal expansion. However, sometimes when a material is cooled, it can remain in the liquid phase below \(T_m\): this is called supercooling. When a liquid is supercooled, continues to act like a liquid until one of two things happens:

1. It crystallizes, characterized by a big jump down to the crystalline solid line and then solid behavior

2. It suddenly becomes a solid, “freezing” in its disordered state and becoming a glass. This transition is characterized by a change in slope at \(T_g\), the point at which solid forms (called the glass transition temperature), but no discontinuity in the freezing curve.

How can we know which path a material will take? It depends on materials properties: if the liquid has a high mobility (low viscosity), the molecules can move around easily and arrange into the energetically-preferential crystalline structure. Highly viscous or low mobility liquids are much more likely to get stuck in a glass. Further, if the crystalline structure is very complicated, or if the liquid is cooled very quickly, it’s hard for the atoms to find crystalline sites before the solid forms: these cases are also more likely to lead to glass formation.

The volume per mole is a good measure of the disorder in the material: the further the molar

volume is from the crystalline case, the more glassy the material is. Although a material only has one melting point, it can have multiple glass transitions depending on how it is processed. XRD is one tool that can be used to determine whether a material is a crystal or a glass: as the material gets more and more disordered, the sharp peaks observed in the XRD pattern disappear into a broad amorphous halo.

Why This Matters

\(\mathrm{SiO}_2\) glass is made from sand and there's a whole lot of it on the planet. \(\mathrm{SiO}_2\) stands out as a base material that has really awesome properties. That's partly because of its tremendous abundance. Check out this plot of the abundance of atoms in the earth's crust. Note that of all of the elements in the periodic table, oxygen and silicon are #1 and #2. This means that silica is cheap, and we're not going to run out, unlike other elements. In fact, there are many "critical elements" like \(\mathrm{Li}\) \(\mathrm{Co}\), \(\mathrm{Ga}, \mathrm{Te}\), and \(\mathrm{Nd}\) to name a few, labeled as such by the Department of Energy because there is concern that there will not be enough of them in the future to meet global demand. But \(\mathrm{Si}\) and \(\mathrm{O}\) are the opposite of critical: they are dramatically abundant. And this presents tremendous opportunity to use \(\mathrm{SiO}_2\) as a base material for wide-ranging applications.

And this is why the chemistry that we learned in this lecture matters: because we learned that

the key properties of glass, and the way that it’s processed, all come from the chemistry. The ways in which we continue to use glass on this planet can increase as human population and technological needs/use continue to increase, but how can we do utilize glass more sustainably given that the base material is so abundant? Or what other applications can glass be useful where it’s currently not used today? Can glass be made “greener”? One cool example for how this could work is in the work of Markus Keyser, who invented the “Solar Sinter.” This is a self-sufficient 3D printer of glass objects that you can drive out into the dessert, feed the sand and sunlight that are both plentiful, and print glass objects. The sunlight is used both to power the electric motor of the printer but also the create enough thermal energy to get above \(T_g\) for the sand. You can now buy commercial 3D glass printers, but I like this example because it’s emission-neutral.

Photo of Marcus Kayser's Solar Sinter

The future of how far we push technologies like this will depend on how far we’re able to push the properties of glass. In the next lecture we’re going to discuss a few different ways to control glass properties, but you only need today’s lecture and an understanding of those silicate groups to understand the key link between chemistry and why this matters.

Why this employs

Corning is one of the biggest and most well-known glass makers in the world. That video I mentioned in the lecture above that I showed in class, about Prince Rupert’s Drop, that was from the "Corning Museum of Glass" educational series. They are really, really into glass. And they're big: their 2018 revenue was \(\$ 11.4 \mathrm{~B}\) and they've got 51,500 employees currently. On their website if you click on the "engineering" section of the job openings page you'll find hundreds listings. They've also got a ton of internships for students:, "A Corning internship offers valuable hands-on experience for individuals in their chosen discipline to include but not limited to Material Science, Engineering, Research, Manufacturing, IT, HR, Marketing, Finance and Supply Chain." It's a cool place that has made it big out of glass and made glass into a big deal.

Corning works on a wide range of applications of glass, but let's focus on just one of them: fiber optic cables. Most of the backbone of today's internet is served by fiber optics, which are made of silica glass, because they have so many advantages over (older) copper wiring. For example, fiber optic cables can carry much higher bandwidth over longer distances than copper, this means the need for signal boosters is lessened, and fiber optic cables are also less susceptible to interference from external electromagnetic fields so they don't need shielding, and finally they don't break down or corrode nearly as often so they're much less expensive to maintain. It's no wonder that major U.S. companies like Comcast FiOS and Google Fiber are working to get fiber optic cables beyond being just the internet backbone, but into literally every single building in the country. Unlike Corning which many of us may have heard of already, some of the top fiber optics companies are less known even while being huge companies (meaning: lots of jobs!). Take OFS Optics, which makes fiber optics cables for over 50 different application spaces. They have over \(\$ 250 \mathrm{M}\) in annual revenues, and in one of their job postings for an "RD Engineer" states that they are looking for someone to, "lead the development of manufacturing processes for the next generation of glass optical fiber products." Cool.

Or how about going international to companies like Prysmian (based in Italy), which has over \(\$ 1 \mathrm{~B}\) in annual revenue, has a "Graduate Program" for recent grads to immerse them quickly with a mentorship program and also has the coolest name for a fiber with their "BendBright" brand. Or there's YOFC based in China with over \(\$1 \mathrm{~B}\)/year in revenue, and nice slogan, "Smart Link Better Life," or Fujikura with \(\$ 7 \mathrm{~B}\) annual sales and a claim to be, "Shaping the Future with Tsunagu Technology." (that means "connecting"). These and so many more companies are working on making next-generation fiber-optic cables, and if you dig a few layers deep into any of them, you'll see how complicated the production of fiber optic cables is, how many different ways it can be done today and will be done in the future, and how many jobs there are that directly relate to knowledge of \(\mathrm{SiO}_2\) glass!

It's not just all about processing: there is fundamental chemistry research to be done on silica glass, too. By doping silica glass with other elements, its properties can be changed. For example, by adding Erbium ions, the glass transforms from a passive light carrier to an amplifier capable of making the signal several orders of magnitude higher. And if you're interested in coding, there's a lot of work to be done to simulate how light travels in media like glass, and how it interacts with these dopants.

Extra practice

1. You obtain the following free volume vs temperature curves for a material cooled at three different rates. Label all instances of the following phenomena on the plot (and the axes!):

a) \(T_g\)

b) \(T_m\)

c) glassy regime

d) crystalline regime

e) fastest cooling rate

f) slowest cooling rate

g) liquid

h) supercooled liquid

- Answer

-

Lecture 26: Engineering Glass Properties

Summary

What does it mean to engineer glass? It can mean adding impurities that change properties like the glass transition temperature \(\left(\mathrm{T}_g\right)\), the solubility, the durability, etc. What unites most of these glass modifiers is that they are oxide donors, meaning they give up an \(\mathrm{O}^{2-}\) ion. This implies that these modifiers have stable cations, so often metals are good. For example:

\(\mathrm{CaO} \rightarrow \mathrm{Ca}^{2+}+\mathrm{O}^{2-} \quad \mathrm{Na}_2 \mathrm{O} \rightarrow 2 \mathrm{Na}^{+}+\mathrm{O}^{2-} \quad \mathrm{Al}_2 \mathrm{O}_3 \rightarrow 2 \mathrm{Al}^{3+}+3 \mathrm{O}^{2-}\)

The donated \(\mathrm{O}^{2-}\) ion attacks the \(\mathrm{Si} - \mathrm{O} - \mathrm{Si}\) bond and breaks it into two. It's like a knife that cuts the glass bond, and so this process is called chain scission. The \(\mathrm{O}^{2-}\) is able to insert itself into the bond and with its two extra electrons satisfies the charge state of the oxygen atoms that now "cap" the chains on each end. So we have that \(\mathrm{Si}-\mathrm{O}-\mathrm{Si}+\mathrm{O}^{2-} \rightarrow \mathrm{Si}-\mathrm{O} \mid \mathrm{O}-\mathrm{Si}\) with negative charge on each \(\mathrm{O}\). As shown in the figure, the \(\mathrm{Na}+\) ions hang around the oxygen. The effect of chain scission on the properties of glasses is enormous. Just take the melting temperature as an example: for crystalline \(\mathrm{SiO}_2\) (quartz) the \(\mathrm{Tm}\) is greater than \(1200^{\circ} \mathrm{C}\). For soda-lime glass, the glass transition temperature is typically around \(500^{\circ} \mathrm{C}\). If the silicate chains are cut, then the material is much less viscous, and it can find better packing more easily, leading to lower volume per mole and also a lower glass transition temperature (more supercooling).

The base chemistry of the solid, which in the case described above is \(\mathrm{SiO}_2\), is the network former. The oxide donor is called the network modifier. Adding network modifiers is another way to change a glass cooling curve. For example, curve (b) to the right could be obtained using \(\mathrm{SiO}_2\) with \(5 \% \mathrm{PbO}\) and curve (a) using \(\mathrm{SiO}_2\) with \(10 \% \mathrm{PbO}\). The reason is that more cutting of the chains makes the material less viscous, which means it can find better packing and be supercooled more.

We discussed two ways that mechanical properties are engineered in glass. First, the glass can be tempered: molten glass is cooled down with air and if the outside of the glass solidifies while the inside is still a liquid, then the outside has a completely different volume per mole than the inside. the hot melted \(\mathrm{SiO}_2\) solidifies but since it cannot have the smaller volume that it would like to have, it puts a inward pressure (compressive stress) on the already-solid outside layer. The second method of glass strengthening is called ion exchange. It involves swapping ions left in glass by network modifiers with ions of different size, which creates compressive stress.

Why this matters

The ability to engineer glass with wide-ranging properties has led to its use in a whole lot more than windows. How about: doors, façades, plates, cups, bowls, insulation, food storage, bottles, solar panels, wind turbines, mirrors, balustrades, tables, partitions, cook tops, ovens, televisions, computers, phones, aircraft, ships, windscreens, backlights, medical technology, optical glass, biotechnology, fiber optic cables, radiation barriers. And on top of that, glass is almost fully recyclable. The main reason glass has become so ubiquitous in all of these different ways is because of its massive chemical tunability as discussed in this lecture.

But here I want to focus on one particular property: strength. We talked today about using compressive stress to make glass stronger. But what if glass could be made stronger still? What if it could be made stronger than major structural materials like steel? In research labs, that is exactly what is happening. For example, in a Nature Materials paper from 2011 (doi:10.1038/nmat2930), the authors made a certain type of metallic glass stronger than steel and critically also tougher than steel. That means that not only does it have a high Young’s modulus, but when it breaks it can deform plastically as opposed to shattering. They created this new material through its chemistry, by adding a touch of palladium and a dash of silver to the mix. It already had a bit of phosphorus, silicon, and germanium, but by adding the palladium and silver, the glass was able to surpass steel in both hardness and toughness. Since then, many more demonstrations of mechanically super-strong glass have been made (often trying to avoid Palladium which costs $50,000 per kg. Here’s a plot from that same paper, showing the fracture toughness vs. the yield strength of different materials. Again, the yield is related to how much force the material can withstand without breaking, and the toughness is how much it can break without shattering. Going up on both axes can be very appealing for many applications. I love plots like this (called “Ashby plots”) since we can right away compare a bunch of different materials, in this case oxides, ceramics, polymers, metals, and of course their own new stuff (shown as “x” marks on the plot). Note how strong regular old oxide glasses are but also how little toughness they have (when they give, they shatter). But notice also how much tougher they can get by engineering their chemistry. This could put amorphous materials on a trajectory to becoming some of the most if not the most damage tolerant materials in the world!

Why this employs

In the last lecture for this section I listed glass manufacturing and companies working on innovating in glass chemistry. For this chapter on engineered glass, let’s talk about smarts. In particular, “smart glass.” For now, that label means one specific type of silicate-based glass: switchable glass. It has been around for a long time, as even in the 1980’s you may have noticed (ok, your parents may have noticed) people wearing the sunglasses that automatically tinted and de-tinted in response to the sun (that used what are called “thermochromic” materials embedded in the glass, which change color based on temperature. They never worked all that well, staying a little too shaded inside and a little too unshaded outside, but the idea was there. But now we’ve gone from thermo- to electro-chromic glass, and the possibilities are seriously exciting. With a tiny applied voltage, glass can be engineered to go back and forth between near full transparency and near full-opacity. Apart from being extremely cool, this type of technology can have a lot of positive sustainability-related benefits, since the glass can be programmed to automatically dim and brighten in response to outdoor light conditions — that can in turn dramatically reduce a building’s energy needs.

This type of smart glass is still on the early side although a number of companies are taking off, and that means jobs. These will be jobs at either mid or early-range start-ups, but in some cases they’ve closed mega (>$100M) fund-raising rounds so definitely growth is strong. Some companies in this space include Kinestral, Smartglass, View, Suntuitive, Gentex, Intelligent Glass, or Glass Apps. A lot of the investment in these companies is coming from the bigger ones like Asahi Glass or Corning, which have of course also started their own smart glass programs. Taken together all of this spells jobs in the future of glass. And its future looks very bright, far beyond switching the color or transparency, as the thermal, electronic and optical properties of the material continue to be engineered. We may or may not be living in the “Age of Glass,” as Corning likes to say, but we sure are living in an exciting time for this material.

Example Problems

1. The 2-D structure of soda-lime glass (used in windows) is shown below.

a) What compounds were used to make this glass? Do these compounds serve as network formers or network modifiers?

- Answer

-

\(\mathrm{SiO}_2\) : network former

\(\mathrm{CaO}\) : network modifier

\(\mathrm{Na}_2 \mathrm{O}\) : network modifier

b) How do each of the added compounds impact the bond structure in the glass?

- Answer

-

\(C a_O\) : breaks one bond/creates two network modifiers (coordinated with \(1 \mathrm{Ca}^{2+}\) ion)

\(\mathrm{Na}_2 \mathrm{O}\) : breaks one bond/ creates two network modifiers (coordinated with \(2 \mathrm{Na}^{+}\) ions)

3. If they are cooled at the same rate, would you expect silica glass with 14% \(\mathrm{Na}2\mathrm{O}\) or 25% \(\mathrm{Na}2\mathrm{O}\) to have a:

Higher molar volume?

Higher glass transition temperature?

Higher viscosity?

- Answer

-

\(14 \%\) would have the higher molar volume

\(14 \%\) would have the higher glass transition temperature

\(14 \%\) would have the higher viscosity

4. If a silica glass is doped with \(\mathrm{MgO}\), and then ion exchange is performed such that \(\mathrm{Ca}\) ions replace the \(\mathrm{Mg}\) ions, how would the mechanical properties of the glass change?

- Answer

-

\(\mathrm{Ca}\) ions take up more space than the \(\mathrm{Mg}\) ions, so the glass will be under internal compression (like the Prince Rupert's drop)

Lecture 27: Reaction Rates

Summary

Chemical kinetics means the study of reaction rates, which correspond to changes in concentrations of reactants and products with time. Some terms to know: concentration \(=\) moles / liter \(=\) molarity \(=[]\), rate \(=\mathrm{d}[] / \mathrm{dt}\), a rate law is some equation that relates the rate to [], an integrated rate law relates the [ ] to \(\mathrm{t}\) (ime), and the Arrhenius equation gives us the rate vs. \(\mathrm{T}\)(emperature)

Take a simple reaction where \(a A \rightarrow b B:\) since mass is conserved, A disappears no faster than \(\mathrm{B}\) appears, so the actual reaction rate is \(=1 / \mathrm{b} \mathrm{d}[\mathrm{B}] / \mathrm{dt}=-1 / \mathrm{a} \mathrm{d}[\mathrm{A}] / \mathrm{dt}\). In other words, the change in the concentration of \(\mathrm{B}\) must equal the opposite of the change in concentration of \(\mathrm{A}\) weighted by one over the molar coefficient a or b. We can have more than one reactant product and the same idea holds. For example, suppose we have 2 of each: \(a \mathrm{~A}+\mathrm{bB} \rightarrow \mathrm{cC}+\mathrm{dD}\). In this case the reaction rate would be:

\(\text { rate }=\dfrac{-1}{a} \dfrac{d[A]}{d t}=\dfrac{-1}{b} \dfrac{d[B]}{d t}=\dfrac{1}{c} \dfrac{d[C]}{d t}=\dfrac{1}{d} \dfrac{d[D]}{d t}\)

The general way to write an equation for the rate for the equation above is: rate \(=\mathrm{k}[\mathrm{A}]^m[\mathrm{~B}]^n\), where \(\mathrm{k}=\) rate constant and is dependent on conditions (\(\mathrm{T}, \mathrm{P}\), solvent), m and n are exponents determined experimentally, \(m+n\) is called the reaction order. Note that the rate units must always be M/s by definition, so this means that units of \(\mathrm{k}\) depend on \(\mathrm{n}\) and \(\mathrm{m}\). For this class we’ll cover three different orders of reactions: \(0^{th}, 1^{st},\) and \(2^{nd}\).

To know the order of a reaction based on data tables like the one below, take any two rows of data: say the \(\mathrm{t}=26 \mathrm{~min}\) and \(\mathrm{t}=70 \mathrm{~min}\) rows. The concentration ratio between these two times is \(0.0020 / 0.0034=0.5882\). The rate ratio is \(1.8 / 5.0=0.36\)

First of all the rate is changing so it can’t be 0th order. Second of all, at two different times the ratio of concentrations is not equal to the ratio of rates, so it can’t be 1st order. But if we square the ratio of concentrations, \((0.0020/0.0034)^2 = 0.35\) which is very close to \(0.36\), so now we have our answer: from the data we can say the reaction is 2nd order!

To know the role of temperature in determining reaction rates, we must first learn about collision theory. Collision theory frames the reaction between molecules, say A and B, as follows: 1) a reaction can only occur when A and B collide, 2) not all collisions result in the formation of product, 3) there are two factors that matter most: the energy of the collision, and the orientation of molecule A with respect to B at the time of collision.

We can think of the energy required for \(\mathrm{A}\) to react with \(\mathrm{B}\) to be a kind of “activation energy” or \(\mathrm{E}_a\). As we learned in chapter 14 (phases), molecules at a given temperature have a distribution of kinetic energies with that temperature being the average. That means some molecules have much more energy than the average, while other have less. Reactions are similar in that it’s the part of the distribution higher than the activation energy that matters. This plot shows how this works: the distribution of energies for a given molecule at two different temperatures shows that for higher temperature more molecules will have energies above the activation energy than for the lower temperature.

The Arrhenius equation gives us an expression that summarizes the collision model of chemical kinetics. It goes as follows: rate = (collision frequency)*(a steric factor)*(the fraction of collisions with \(\mathrm{E} > \mathrm{E}_a\)). In math terms, that’s shown here for the equation for rate (\(\mathrm{k}\)).

\(\mathrm{A}=\) the frequency factor, and its units depend on the reaction order. For example if the reaction is first order then the frequency factor must have units of \(s^{-1}\) The activation energy, \(\mathrm{E}_a\), we've already discussed. If it's given in units per mole, like \(\mathrm{J} / \mathrm{mol}\), we use \(\mathrm{R}\) as it's written, where \(\mathrm{R}\) is the ideal gas constant \(\mathrm{R}=8.314 \mathrm{~J} / \mathrm{K}^*\) mol. If the activation energy is given in units of \(\mathrm{eV}\), then the constant used would be the Boltzmann constant in units of \(\mathrm{eV}\left(8.61733 \times 10^{-5} \mathrm{eV} / \mathrm{K}\right)\).

This relationship means that if we plot the natural \(\log\) of the rate vs. \(1 / \mathrm{T}\) then it should be a straight line with slope \(=-\mathrm{Ea} / \mathrm{R}\) and intercept \(=\ln (\mathrm{A})\), as shown in the plot above.

So we’ve covered concentration, and now temperature. The last example of a way to change the rate of a reaction that we’ll mention (and unlike those other two, we’ll really just mention it and not go into detail) is the catalyst. A catalyst is a way to increase the rate of a reaction without having anything consumed as part of it. It’s a material that, in the language of our discussion on Arrhenius above, lowers the activation energy for the reaction.

Why this matters

Let's keep going with the catalyst theme for this section. It is estimated that \(\approx 90 \%\) of all commercially produced chemical products involve catalysts at some stage in the process of their manufacture! Some of these processes I've already highlighted in other Why This Matters moments, like the Haber-Bosch process for fixing \(\mathrm{N}_2\), or the depletion of \(\mathrm{O}_3\) by CFCs. At the time, we hadn't learned about reaction rates or catalysts, so I didn't go into it. But in both cases the role of the catalyst is absolutely essential (in fact, the big innovation of Haber-Bosch was not to discover the reaction (which had been known) but rather to discover a catalyst that lowers the temperature needed to make the reaction happen economically and at large scales.

Let's discuss another world-changing catalytically enhanced reaction: namely, the removal of most toxic emissions from cars and trucks. I know, you may be thinking that the tailpipe of a car smells pretty toxic. And that's because it is, but it's a whole lot better than it used to be, and the reason is the catalysts that are now part of every tailpipe in the form of what is called the catalytic converter. Why did we need these in the first place? It all goes back to the very first reaction we wrote on the first day of lecture: combustion. One example I gave was the combustion of methane:

\(\mathrm{CH}_4+2 \mathrm{O}_2 \rightarrow 2 \mathrm{H}_2 \mathrm{O}+\mathrm{CO}_2\)

It's true that \(\mathrm{CO}_2\) is harmful to the environment for reasons of climate change, but there's nothing toxic in those products... so what's the problem?

Ah, if only cars burned pure methane! But gasoline is far, far away from a pure fuel source. And furthermore, even modern car engines are far, far away from being able to burn the fuel perfectly without side-reactions. Gasoline is a mixture of about 150 different chemicals, and these include not just those hydrocarbons that combust, but also a host of additives that range in purpose from corrosion inhibitors to lubricants to oxygen boosters. Since this complex chemical soup doesn’t burn cleanly, we get both direct products and by-products that go far beyond the pure case of \(\mathrm{H}_2\mathrm{O}\) and \(\mathrm{CO}_2\). Many of these products are pollutants and some are really bad ones. An incomplete list would include: carbon monoxide (\(\mathrm{CO}\)) which is poisonous, nitrogen oxides (like \(\mathrm{NO}\) and \(\mathrm{NO}_2\), or “\(\mathrm{NOX}\)” as they’re called) which cause smog and have many adverse health effects, sulfur oxides (yes, you guessed it, “\(\mathrm{SOX}\)”) which cause acid rain, and unburned hydrocarbons or volatile organic compounds (VOCs) which cause cancer.

Similar to the removal of CFC's from refrigerants, cleaning up the tailpipe represents a fantastic example for how policy and regulation can make the world better. It was the Clean Air Act that Congress passed in 1970 that gave the newly-formed EPA the legal authority to regulate this toxic mess that came out of cars. As a result, today's cars are \(\approx 99 \%\) fewer emissions compared to the 1960s! The fuels are also cleaner (lead was removed and sulfur levels lowered), and taken together cities have much healthier air. Take a look at this picture of New York city from 1973 (left) compared to 2013 (right). In the 1970's, smog from car exhaust overwhelmed most major U.S. cities.

The key technology that enabled this dramatic clean-up is the catalytic converter. Inside a catalytic converter there are actually multiple catalysts, each enhancing different reactions. Most cars today use what is called a "three-way" catalyst, which just means that it tackles all three of the biggest pollutants: NOX, hydrocarbons, and \(\mathrm{CO}\). The key materials used as the catalysts are a combination of platinum, palladium, and rhodium. Inside a catalytic convertor one typically has a honeycomb mesh just to get a large surface area. Since the temperature gets quite high (and in fact needs to be high for the catalysts to operate, which is why cold engines pollute more than hot ones), the honeycomb mesh is made out of a ceramic material like alumina so it can handle \(\mathrm{T}=500^{\circ} \mathrm{C}\) without cracking or degradation. The \(\mathrm{Pt}\), \(\mathrm{Pd}\), and \(\mathrm{Rh}\) metals coat the \(\mathrm{Al}_2 \mathrm{O}_3\) mesh, and the exhaust flows through.

Take a look at this catalytic converter schematic. You can see two different chambers, one where the metal acts as a "reduction catalyst" for the NOX removal and the other where a different metal (or combination of metals) acts as an "oxidation catalyst" to treat \(\mathrm{CO}\) and unburned hydrocarbons. In the reduction catalyst chamber, the reaction we're trying to accelerate is \(2 \mathrm{NO} \rightarrow \mathrm{N}_2+\mathrm{O}_2\). In order to do so, the catalyst binds the \(\mathrm{NO}\) molecule to it, and is then able to pull off the nitrogen atom from \(\mathrm{NO}\) and hold it in place. Then another \(\mathrm{N}\) atom that also got pulled off of a different \(\mathrm{NO}\) molecule gets stuck to the catalyst somewhere nearby, and those Likewise, the oxygen can form \(\mathrm{O})2\). The whole point is that the catalyst finds a different way to carry out the same reaction with much lower barriers. The oxidation catalyst burns \(\mathrm{CO}\) and hydrocarbons using remaining \(\mathrm{O} 2\) gas, for example to get this reaction to go: \(2 \mathrm{CO}+\mathrm{O}_2 \rightarrow 2 \mathrm{CO}_2\). Again, that reaction would not occur at a high rate normally, but the catalyst breaks it down into steps (like splitting \(\mathrm{CO}\)) that occur much more easily.

Why this employs