4.3: Toxicity Testing

- Page ID

- 294558

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)4.3. Toxicity testing

Author: Kees van Gestel

Reviewer: Michiel Kraak

Learning objectives:

You should be able to

- Mention the two general types of endpoints in toxicity tests

- Mention the main groups of test organisms used in environmental toxicology

- Mention different criteria determining the validity of toxicity tests

- Explain why toxicity testing may need a negative and a positive control

Keywords: single-species toxicity tests, test species selection, concentration-response relationships, endpoints, bioaccumulation testing, epidemiology, standardization, quality control, transcriptomics, metabolomics,

Introduction

Laboratory toxicity tests may provide insight into the potential of chemicals to bioaccumulate in organisms and into their hazard, the latter usually being expressed as toxicity values derived from concentration-response relationships. Section 4.3.1 on Bioaccumulation testing describes how to perform tests to assess the bioaccumulation potential of chemicals in aquatic and terrestrial organisms, and under static and dynamic exposure conditions. Basic to toxicity testing is the establishment of a concentration-response relationship, which relates the endpoint measured in the test organisms to exposure concentrations. Section 4.3.2 on Concentration-response relationships elaborates on the calculation of the relevant toxicity parameters like the median lethal concentration (LC50) and the medium effective concentration (EC50) from such toxicity tests. It also discusses the pros and cons of different methods for analyzing data from toxicity tests.

Several issues have to be addressed when designing toxicity tests that should enable assessing the environmental or human health hazard of chemicals. This concerns among others the selection of test organisms (see section 4.3.4 on the Selection of test organisms for ecotoxicity testing), exposure media, test conditions, test duration and endpoints, but also requires clear criteria for checking the quality of toxicity tests performed (see below). Different whole organism endpoints that are commonly used in standard toxicity tests, like survival, growth, reproduction or avoidance behavior, are discussed in section 4.3.3 on Endpoints. The sections 4.3.4 to 4.3.7 are focusing on the selection and performance of tests with organisms representative of aquatic and terrestrial ecosystems. This includes microorganisms (section 4.3.6), plants (section 4.3.5), invertebrates (section 4.3.4) and vertebrate test organisms (e.g. fish: section 4.3.4 on ecotoxicity tests, and birds: section 4.3.7). Testing of vertebrates, including fish (section 4.3.4) and birds (section 4.3.7), is subject to strict regulations, aimed at reducing the use of test animals. Data on the potential hazard of chemicals to human health therefore preferably have to be obtained in other ways, like by using in vitro test methods (section 4.3.8), by using data from post-registration monitoring of exposed humans (section 4.3.9 on Human toxicity testing), or from epidemiological analysis on exposed humans (section 4.3.10).

Inclusion of novel endpoints in toxicity testing

Traditionally, toxicity tests focus on whole organism endpoints, with survival, growth and reproduction being the most measured parameters (section 4.3.3). In case of vertebrate toxicity testing, also other endpoints may be used addressing effects at the level of organs or tissues (section 4.3.9 on human toxicity testing). Behavioural (e.g. avoidance behavior) and biochemical endpoints, like enzyme activity, are also regularly included in toxicity testing with vertebrates and invertebrates (sections 4.3.3, 4.3.4, 4.3.7, 4.3.9).

With the rise of molecular biology, novel techniques have become available that may provide additional information on the effects of chemicals. Molecular tools may, for instance, be applied in molecular epidemiology (section 4.3.11) to find causal relationships between health effects and the exposure to chemicals. Toxicity testing may also use gene expression responses (transcriptomics; section 4.3.12) or changes in metabolism (metabolomics; section 4.3.13) in relation to chemical exposures to help unraveling the mechanism(s) of action of chemicals. A major challenge still is to explain whole organism effects from such molecular responses.

Standardization of tests

The standardization of tests is organized by international bodies like the Organization for Economic Co-operation and Development (OECD), the International Standardization Organization (ISO), and ASTM International (formerly known as the American Society for Testing and Materials). Standardization aims at reducing variation in test outcomes by carefully describing the methods for culturing and handling the test organisms, the procedures for performing the test, the properties and composition of test media, the exposure conditions and the analysis of the data. Standardized test guidelines are usually based on extensive testing of a method by different laboratories in a so-called round-robin test.

Regulatory bodies generally require that toxicity tests supporting the registration of new chemicals are performed according to internationally standardized test guidelines. In Europe, for instance, all toxicity tests submitted within the framework of REACH have to be performed according to the OECD guidelines for the testing of chemicals (see section on Regulation of chemicals).

Quality control of toxicity tests

Since toxicity tests are performed with living organisms, this inevitably leads to (biological) variation in outcomes. Coping with this variation requires the use of sufficient replication, careful test designs and good choice of endpoints (section 4.3.3) to enable proper estimates of relevant toxicity data.

In order to control the quality of the outcome of toxicity tests, several criteria have been developed, which mainly apply to the performance of the test organisms in the non-exposed controls. These criteria may e.g. require a minimum % survival of control organisms, a minimum growth rate or number of offspring being produced by the controls and limited variation (e.g. <30%) of the replicate control growth or reproduction data (sections 4.3.4, 4.3.5, 4.3.6, 4.3.7). When tests do not meet these criteria, the outcome is prone to doubts, as for instance a poor control survival will make it hard to draw sound conclusions on the effect of the test chemical on this endpoint. As a consequence, tests that do not meet these validity criteria may not be accepted by other scientists and by regulatory authorities.

In case the test chemical is added to the test medium using a solvent, toxicity tests should also include a solvent control, in addition to a regular non-exposed control (see section 4.3.4 on the selection of test organisms for ecotoxicity testing). In case the response in the solvent control differs significantly from that in the negative control, the solvent control will be used as the control for analyzing the effects of the test chemical. The negative control will then only be used to check if the validity criteria have been met and to monitor the condition of the test organisms. In case the responses in the negative control and the solvent control do not differ significantly, both controls can be pooled for the data analysis.

Most test guidelines also require frequent testing of a positive control, a chemical with known toxicity, to check if the long-term culturing of the test organisms does not lead to changes in their sensitivity.

What are the main endpoints in toxicity testing?

Which are the main groups of organisms used in toxicity testing?

Why is standardization of methods for toxicity testing required?

Which elements are included in the quality control of toxicity tests?

4.3.1. Bioaccumulation testing

Author: Kees van Gestel

Reviewers: Joop Hermens, Michiel Kraak, Susana Loureiro

Learning objectives:

You should be able to

- describe methods for determining the bioaccumulation of chemicals in terrestrial and aquatic organisms

- describe a test design suitable for assessing the bioaccumulation kinetics of chemicals in organisms

- mention the pros and cons of static and dynamic bioaccumulation tests

Keywords: bioconcentration, bioaccumulation, uptake and elimination kinetics, test methods, soil, water

Bioaccumulation is defined as the uptake of chemicals in organisms from the environment. The degree of bioaccumulation is usually indicated by the bioconcentration factor (BCF) in case the exposure is via water, or the biota-to-soil/sediment accumulation factor (BSAF) for exposure in soil or sediment (see section on Bioaccumulation).

Because of the potential risk for food-chain transfer, experimental determination of the bioaccumulation potential of chemicals is usually required in case of a high lipophilicity (log Kow > 3), unless the chemical has a very low persistency. For very persistent chemicals, experimental determination of bioaccumulation potential may already be triggered at log Kow > 2. The experimental determination of BCF and BSAF values makes use of static or dynamic exposure systems.

In static tests, the medium is dosed once with the test chemical, and organisms are exposed for a certain period of time after which both the organisms and the test medium are analyzed for the test chemical. The BCF or BSAF are calculated from the measured concentrations. There are a few concerns with this way of bioaccumulation testing.

First, exposure concentrations may decrease during the test, e.g. due to (bio)degradation, volatilization, sorption to the walls of the test container, or uptake of the test compound by the test organisms. As a consequence, the concentration in the test medium measured at the start of the test may not be indicative of the actual exposure during the test. To take this into account, exposure concentrations can be measured at the start and the end of the test and also at some intermediate time points. Body concentrations in the test organisms may then be related to time-weighted-average (TWA) exposure concentrations. Alternatively, to overcome the problem of decreasing concentrations in aquatic test systems, continuous flow systems or passive dosing techniques can be applied. Such methods, however, are not applicable to soil or sediment tests, where repeated transfer of organisms to freshly spiked medium is the only way to guarantee more or less constant exposure concentrations in case of rapidly degrading compounds. To avoid that the uptake of the test chemical in test organisms leads to decreasing exposure concentrations, the amount of biomass per volume or mass of test medium should be sufficiently low.

Second, it is uncertain whether at the end of the exposure period steady state or equilibrium is reached. If this is not the case, the resulting BSAF or BCF values may underestimate the bioaccumulation potential of the chemical. To tackle this problem, a dynamic test may be run to assess the uptake and elimination rate constants to derive a BSAF or BCF values using uptake and elimination rate constants (see below).

Such uncertainties also apply to BCF and BSAF values obtained by analyzing organisms collected from the field and comparing body concentrations with exposure levels in the environment. Using data from field-exposed organisms on one hand have large uncertainty as it remains unclear whether equilibrium was reached, on the other hand they to do reflect exposure over time under fluctuating but realistic exposure conditions.

Dynamic tests, also indicated as uptake/elimination or toxicokinetic tests, may overcome some, but not all, of the disadvantages of static tests. In dynamic tests, organisms are exposed for a certain period of time in spiked medium to assess the uptake of the chemical, after which they are transferred to clean medium for determining the elimination of the chemical. During both the uptake and the elimination phase, at different points in time, organisms are sampled and analyzed for the test chemical. The medium is also sampled frequently to check for a possible decline of the exposure concentration during the uptake phase. Also in dynamic tests, keeping exposure concentrations constant as much as possible is a major challenge, requiring frequent renewal (see above).

Toxicokinetic tests should also include controls, consisting of test organisms incubated in the clean medium and transferred to clean medium at the same time the organisms from the treated medium are transferred. Such controls may help identifying possible irregularities in the test, such as poor health of the test organisms or unexpected (cross)contamination occurring during the test.

The concentrations of the chemical measured in the test organisms are plotted against the exposure time, and a first-order one-compartment model is fitted to the data to estimate the uptake and elimination rate constants. The (dynamic) BSAF or BCF value is then determined as the ratio of the uptake and elimination rate constants (see section on Bioconcentration and kinetic models).

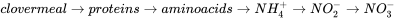

In a toxicokinetics test, usually replicate samples are taken at each point in time, both during the uptake and the elimination phase. The frequency of sampling may be higher at the beginning than at the end of both phases: a typical sampling scheme is shown in Figure 1. Since the analysis of toxicokinetics data using the one-compartment model is regression based, it is generally preferred to have more points in time rather than having many replicates per sampling time. From that perspective, often no more than 3-4 replicates are used per sampling time, and 5-6 sampling times for the uptake and elimination phases each.

Preferably, replicates are independent, so destructively sampled at a specific sampling point. Especially in aquatic ecotoxicology, mass exposures are sometimes used, having all test organisms in one or few replicate test containers. In this case, at each sampling time some replicate organisms are taken from the test container(s), and at the end of the uptake phase all organisms are transferred to (a) container(s) with clean medium.

Figure 2 shows the result of a test on the uptake and elimination kinetics of molybdenum in the earthworm Eisenia andrei. From the ratio of the uptake rate constant (k1) and elimination rate constant (k2) a BSAF of approx. 1.0 could be calculated, suggesting a low bioaccumulation potential of Mo in earthworms in the soil tested.

Another way of assessing the bioaccumulation potential of chemicals in organisms includes the use of radiolabeled chemicals, which may facilitate easy detection of the test chemical. The use of radiolabeled chemicals may however, overestimate bioaccumulation potential when no distinction is made between the parent compound and potential metabolites. In case of metals, stable isotopes may also offer an opportunity to assess bioaccumulation potential. Such an approach was also applied to distinguish the role of dissolved (ionic) Zn in the bioaccumulation of Zn in earthworms from ZnO nanoparticles. Earthworms were exposed to soils spiked with mixtures of 64ZnCl2 and 68ZnO nanoparticles. The results showed that dissolution of the nanoparticles was fast and that the earthworms mainly accumulated Zn present in ionic form in the soil solution (Laycock et al., 2017).

Standard test guidelines for assessing the bioaccumulation (kinetics) of chemicals have been published by the Organization for Economic Cooperation and Development (OECD) for sediment-dwelling oligochaetes (OECD, 2008), for earthworms/enchytraeids in soil (OECD, 2010) and for fish (OECD, 2012).

References

Diez-Ortiz, M., Giska, I., Groot, M., Borgman, E.M., Van Gestel, C.A.M. (2010). Influence of soil properties on molybdenum uptake and elimination kinetics in the earthworm Eisenia andrei. Chemosphere 80, 1036-1043.

Laycock, A., Romero-Freire, A., Najorka, J., Svendsen, C., Van Gestel, C.A.M., Rehkämper, M. (2017). Novel multi-isotope tracer approach to test ZnO nanoparticle and soluble Zn bioavailability in joint soil exposures. Environmental Science and Technology 51, 12756−12763.

OECD (2008). Guidelines for the testing of chemicals No. 315: Bioaccumulation in Sediment-dwelling Benthic Oligochaetes. Organization for Economic Cooperation and Development, Paris.

OECD (2010). Guidelines for the testing of chemicals No. 317: Bioaccumulation in Terrestrial Oligochaetes. Organization for Economic Cooperation and Development, Paris.

OECD (2012). Guidelines for the testing of chemicals No. 305: Bioaccumulation in Fish: Aqueous and Dietary Exposure. Organization for Economic Cooperation and Development, Paris.

Why may BSAF and BCF values obtained from static tests not reflect the real bioaccumulation potential of chemicals, even if it was possible to keep exposure concentrations constant?

Describe the experimental design of a test for assessing the uptake and elimination kinetics of chemicals in test organisms, in soil or water.

a. What experimental problem may be encountered when determining the bioaccumulation of chemicals in terrestrial or aquatic organisms?

b. And how may this problems be overcome in case of aquatic organisms?

c. Is such a solution also possible for terrestrial organisms?

4.3.2. Concentration-response relationships

Author: Kees van Gestel

Reviewers: Michiel Kraak, Thomas Backhaus

Learning goals:

You should be able to

- understand the concept of the concentration-response relationship

- define measures of toxicity

- distinguish quantal and continuous data

- mention the reasons for preferring ECx values above NOEC values

Keywords: concentration-related effects, measure of lethal effect, measure of sublethal effect, regression-based analysis

Key paradigm in human and environmental toxicology is that the dose determines the effect. This paradigm goes back to Paracelsus, stating that any chemical is toxic, but that the dose determines the severity of the effect. In practice, this paradigm is used to quantify the toxicity of chemicals. For that purpose, toxicity tests are performed in which organisms (microbes, plants, invertebrates, vertebrates) or cells are exposed to a range of concentrations of a chemical. Such tests also include incubations in non-treated control medium. The response of the test organisms is determined by monitoring selected endpoints, like survival, growth, reproduction or other parameters (see section on Endpoints). Endpoints can increase (e.g. mortality) or decrease with increasing exposure concentration (e.g. survival, reproduction, growth). The response of the endpoints is plotted against the exposure concentration, and so-called concentration-response curves (Figure 1) are fitted, from which measures of the toxicity of the chemical can be calculated.

The unit of exposure, the concentration or dose, may be expressed differently depending on the exposed subject. Dose is expressed as mg/kg body weight in human toxicology and following single (oral or dermal) exposure events in mammals or birds. For other orally or dermally exposed (invertebrate) organisms, like honey bees, the dose may be expressed per animal, e.g. µg/bee. Environmental exposures generally express exposure as the concentration in mg/kg food, mg/kg soil, mg/l surface, drinking or ground water, or mg/m3 air.

Ultimately, it is the concentration (number of molecules of the chemical) at the target site that determines the effect. Consequently, expressing exposure concentrations on a molar basis (mol/L, mol/kg) is preferred, but less frequently applied.

At low concentrations or doses, the endpoint measured is not affected by exposure. At increasing concentration, the endpoint shows a concentration-related decrease or increase. From this decrease or increase, different measures of toxicity can be calculated:

ECx/EDx: the "effective concentration" resp. "effective dose"; "x" denotes the percentage effect relative to an untreated control. This should always be followed by giving the selected endpoint.

LCx/LDx: same, but specified for a specific endpoint: lethality.

EC50/ED50: the median effect concentration or dose, with "x" set to 50%. This is the most common estimate used in environmental toxicology. This should always be followed by giving the selected endpoint.

LC50/LD50: same, but specified for a specific endpoint: lethality.

The terms LCx and LDx refer to the fraction of animals responding (dying), while the ECx and EDx indicate the degree of reduction of the measured parameter. The ECx/EDx describe the overall average performance of the test organisms in terms of the parameter measured (e.g., growth, reproduction). The meaning of an LCx/LDx seems obvious: it refers to lethality of the test chemical. The use of ECx/EDx, however, always requires explicit mentioning of the endpoint it concerns.

Concentration-response models usually distinguish quantal and continuous data. Quantal data refer to constrained ("yes/no") responses and include, for instance, survival data, but may also be applicable to avoidance responses. Continuous data refer to parameters like growth, reproduction (number of juveniles or eggs produced) or biochemical and physiological measurements. A crucial difference between quantal and continuous responses is that quantal responses are population-level responses, while continuous responses can also be observed on the level of individuals. An organism cannot be half-dead, but it can certainly grow at only half the control rate.

Concentration-response models are usually sigmoidal on a log-scale and are characterized by four parameters: minimum, maximum, slope and position. The minimum response is often set to the control level or to zero. The maximum response is often set to 100%, in relation to the control or the biologically plausible maximum (e.g. 100% survival). The slope identifies the steepness of the curve, and determines the distance between the EC50 and EC10. The position parameter indicates where on the x-axis the curve is placed. The position may equal the EC50 and in that case it is named the turning point. But this in fact holds only for a small fraction of models and not for models that are not symmetrical to the EC50.

In environmental toxicology, the parameter values are usually presented with 95% confidence intervals indicating the margins of uncertainty. Statistical software packages are used to calculate these corresponding 95% confidence intervals.

Regression-based test designs require several test concentrations, and the results are dependent on the used statistical model, especially in the low-effect region. Sometimes it is simply impossible to use a regression-based design because the endpoint does not cover a sufficiently high effect range (>50% effect is typically needed for an accurate fit).

In case of quantal responses, especially survival, the slope of the concentration-response curve is an indication of the sensitivity distribution of the individuals within the population of test organisms. For a very homogenous population of laboratory test animals having the same age and body size, a steeper concentration-response curve is expected than when using field-collected animals representing a wider range of ages and body sizes (Figure 2).

In addition to ECx values, toxicity tests may also be used to derive other measures of toxicity:

NOEC/NOEL: No-Observed Effect Concentration or Effect Level

LOEC/LOEL: Lowest Observed Effect Concentration or Effect Level

NOAEL: No-Observed Adverse Effect Level. Same as NOEL, but focusing on effects that are negative (adverse) compared to the control.

LOAEL: Lowest Observed Adverse Effect Level. Same as LOEL, but focusing on effects that are negative (adverse) compared to the control.

Where the ECx are derived by curve fitting, the NOEC and LOEC are derived by a statistical test comparing the response at each test concentration with that of the controls. The NOEC is defined as the highest test concentration where the response does not significantly differ from the control. The LOEC is the next higher concentration, so the lowest concentration tested at which the response significantly differs from the control. Figure 3 shows NOEC and LOEC values derived from a hypothetical test. Usually an Analysis of Variance (ANOVA) is used combined with a post-hoc test, e.g. Tukey, Bonferroni or Dunnett, to determine the NOEC and LOEC.

Most available toxicity data are NOECs, hence they are the most common values found in databases and therefore used for regulatory purposes. From a scientific point of view, however, there are quite some disadvantages related to the use of NOECs:

- Obtained by statistical test (hypothesis testing) (compared to regression analysis);

- Equal to one of the test concentrations, so not using all data from the toxicity test;

- Sensitive to the number of replicates used per exposure concentration and control;

- Sensitive to variation in response, so for differences between replicates;

- Depends on the statistical test chosen, and on the variance (σ);

- Does not have confidence intervals;

- Makes it hard to compare toxicity data between laboratories and between species.

The NOEC may, due to its sensitivity to variation and test design, sometimes be equal to or even higher than the EC50.

Because of the disadvantages of the NOEC, it is recommended to use measures of toxicity derived by fitting a concentration-response curve to the data obtained from a toxicity test. As an alternative to the NOEC, usually an EC10 or EC20 is used, which has the advantages that it is obtained using all data from the test and that it has a 95% confidence interval indicating its reliability. Having a 95% confidence interval also allows a statistical comparison of ECx values, which is not possible for NOEC values.

Which four parameters describe a dose-response curve?

What would be the preferred unit of measures of toxicity (e.g. EC20 or EC50) describing the effect of a chemical on the survival of soil invertebrates exposed in a standardized test soil?

Why would you expect that using an age-synchronized laboratory population of test organisms results in a much steeper concentration-response curve for effects on survival of a chemical than a field-collected population of non-synchronized individuals?

Why are EC10 values preferred over NOECs when using the outcomes of toxicity tests for the risk assessment of chemicals?

4.3.3. Endpoints

Author: Michiel Kraak

Reviewers: Kees van Gestel, Carlos Barata

Learning objectives:

You should be able to

- list the available whole organism endpoints in toxicity tests.

- motivate the importance of sublethal endpoints in acute and chronic toxicity tests.

- describe how sublethal endpoints in acute and chronic toxicity tests are measured.

Keywords: Mortality, survival, sublethal endpoints, growth, reproduction, behaviour, photosynthesis

Introduction

Most toxicity tests performed are short-term high-dose experiments, acute tests in which mortality is often the only endpoint. Mortality, however, is a crude parameter in response to relatively high and therefore often environmentally irrelevant toxicant concentrations. At much lower and therefore environmentally more relevant toxicant concentrations, organisms may suffer from a wide variety of sublethal effects. Hence, toxicity tests gain ecological realism if sublethal endpoints are addressed in addition to mortality.

Mortality

Mortality can be determined in both acute and chronic toxicity tests. In acute tests, mortality is often the only feasible endpoint, although some acute tests take long enough to also measure sublethal endpoints, especially growth. Generally though, this is restricted to chronic toxicity tests, in which a wide variety of sublethal endpoints can be assessed in addition to mortality (Table 1).

Mortality at the end of the exposure period is assessed by simply counting the number of surviving individuals, but it can also be expressed either as percentage of the initial number of individuals or as percentage of the corresponding control. The increasing mortality with increasing toxicant concentrations can be plotted in a dose-response relationship from which the LC50 can be derived (see section on Concentration-response relationship). If assessing mortality is non-destructive, for instance if this can be done by visual inspection, it can be scored at different time intervals during a toxicity test. Although repeated observations may take some effort, they generally do generate valuable insights in the course of the intoxication process over time.

Sublethal endpoints in acute toxicity tests

In acute toxicity tests it is difficult to assess other endpoints than mortality, since effects of toxicants on sublethal endpoints like growth and reproduction need much longer exposure times to become expressed (see section on Chronic toxicity). Incorporating sublethal endpoints in acute toxicity tests thus requires rapid responses to toxicant exposure. Photosynthesis of plants and behaviour of animals are elegant, sensitive and rapidly responding endpoints that can be incorporated into acute toxicity tests (Table 1).

Behavioural endpoints

Behaviour is an understudied but sensitive and ecologically relevant endpoint in ecotoxicity testing, since subtle changes in animal behaviour may affect trophic interactions and ecosystem functioning. Several studies reported effects on animal behaviour at concentrations orders of magnitudes lower than lethal concentrations. Van der Geest et al. (1999) showed that changes in ventilation behaviour of fifth instar larvae of the caddisfly Hydropsyche angustipennis occurred at approximately 150 times lower Cu concentrations than mortality of first instar larvae. Avoidance behaviour of the amphipod Corophium volutator to contaminated sediments was 1,000 times more sensitive than survival (Hellou et al., 2008). Chevalier et al. (2015) tested the effect of twelve compounds covering different modes of action on the swimming behaviour of daphnids and observed that most compounds induced an early and significant swimming speed increase at concentrations near or below the 10% effective concentration (48-h EC10) of the acute immobilization test. Barata et al. (2008) reported that the short term (24 h) D. magna feeding inhibition assay was on average 50 times more sensitive than acute standardized tests when assessing the toxicity of a mixture of 16 chemicals in different water types combinations. These and many other examples all show that organisms may exhibit altered behaviour at relatively low and therefore often environmentally relevant toxicant concentrations.

Behavioural responses to toxicant exposure can also be very fast, allowing organisms to avoid further exposure and subsequent bioaccumulation and toxicity. A wide array of such avoidance responses have been incorporated in ecotoxicity testing (Araújo et al., 2016), including the avoidance of contaminated soil by earthworms (Eisenia fetida) (Rastetter & Gerhardt; 2018), feeding inhibition of mussels (Corbicula fluminea) (Castro et al., 2018), aversive swimming response to silver nanoparticles by the unicellular green alga Chlamydomonas reinhardtii (Mitzel et al., 2017) and by daphnids to twelve compounds covering different modes of toxic action (Chevalier et al., 2015).

Photosynthesis

Photosynthesis is a sensitive and well-studied endpoint that can be applied to identify hazardous effects of herbicides on primary producers. In bioassays with plants or algae, photosynthesis is often quantified using pulse amplitude modulation (PAM) fluorometry, a rapid measurement technique suitable for quick screening purposes. Algal photosynthesis is preferably quantified in light adapted cells as effective photosystem II (PSII) efficiency (ΦPSII) (Ralph et al., 2007; Sjollema et al., 2014). This endpoint responds most sensitively to herbicide activity, as the most commonly applied herbicides either directly or indirectly affect PSII (see section on Herbicide toxicity).

Sublethal endpoints in chronic toxicity tests

Besides mortality, growth and reproduction are the most commonly assessed endpoints in ecotoxicity tests (Table 1). Growth can be measured in two ways, as an increase in length and as an increase in weight. Often only the length or weight at the end of the exposure period is determined. This, however, includes both the growth before and during exposure. It is therefore more distinctive to measure length or weight at the beginning as well as at the end of the exposure, and then subtract the individual or average initial length or weight from the final individual length or weight. Growth during the exposure period may subsequently be expressed as percentage of the initial lengths or weight. Ideally the initial length or weight is measured from the same individuals that will be exposed. When organisms are sacrificed to measure the initial length or weight, which is especially the case for dry weight, this is not feasible. In that case a subsample from the individuals is taken apart at the beginning of the test.

Reproduction is a sensitive and ecological relevant endpoint in chronic toxicity tests. It is an integrated parameter, incorporating many different aspects of the process, that can be assessed one by one. The first reproduction parameter is the day of first reproduction. This is an ecologically very relevant parameter, as delayed reproduction obviously has strong implications for population growth. The next reproduction parameter is the amount of offspring. In this case the number of eggs, seeds, neonates or juveniles can be counted. For organisms that produce egg ropes or egg masses, both the number of egg masses as well as the number of eggs per mass can be determined. Lastly the quality of the offspring can be quantified. This can be achieved by determining their physiological status (e.g. fat content), their size, survival and finally their chance or reaching adulthood.

Table 1. Whole organism endpoints often used in toxicity tests. Quantal refers to a yes/no endpoint, while graded refers to a continuous endpoint (see section on Concentration-response relationship).

|

Endpoint |

Acute/Chronic |

Quantal/Graded |

|

mortality |

both |

quantal |

|

behaviour |

acute |

graded |

|

avoidance |

acute |

quantal |

|

photosynthesis |

acute |

graded |

|

growth (length and weight) |

mostly chronic |

graded |

|

reproduction |

chronic |

graded |

A wide variety of other, less commonly applied sublethal whole organism endpoints can be assessed upon chronic exposure. The possibilities are endless, with some specific endpoints being designed for the effect of a single compound only, or species specific endpoints, sometimes described for only one organism. Sub-organismal endpoints are described in a separate chapter (see section on Molecular endpoints in toxicity tests).

References

Araujo, C.V.M., Moreira-Santos, M., Ribeiro, R. (2016). Active and passive spatial avoidance by aquatic organisms from environmental stressors: A complementary perspective and a critical review. Environment International 92-93, 405-415.

Barata, C., Alanon, P., Gutierrez-Alonso, S., Riva, M.C., Fernandez, C., Tarazona, J.V. (2008). A Daphnia magna feeding bioassay as a cost effective and ecological relevant sublethal toxicity test for environmental risk assessment of toxic effluents. Science of the Total Environment 405(1-3), 78-86.

Castro, B.B., Silva, C., Macario, I.P.E., Oliveira, B., Concalves, F., Pereira, J.L. (2018). Feeding inhibition in Corbicula fluminea (OF Muller, 1774) as an effect criterion to pollutant exposure: Perspectives for ecotoxicity screening and refinement of chemical control. Aquatic Toxicology 196, 25-34.

Chevalier, J., Harscoët, E., Keller, M., Pandard, P., Cachot, J., Grote, M. (2015). Exploration of Daphnia behavioral effect profiles induced by a broad range of toxicants with different modes of action. Environmental Toxicology and Chemistry 34, 1760-1769.

Hellou J., Cheeseman, K., Desnoyers, E., Johnston, D., Jouvenelle, M.L., Leonard, J., Robertson, S., Walker, P. (2008). A non-lethal chemically based approach to investigate the quality of harbor sediments. Science of the Total Environment 389, 178-187.

Mitzel, M.R., Lin, N., Whalen, J.K., Tufenkji, N. (2017). Chlamydomonas reinhardtii displays aversive swimming response to silver nanoparticles Environmental Science: Nano 4, 1328-1338.

Ralph, P.J., Smith, R.A., Macinnis-Ng, C.M.O., Seery, C.R. (2007). Use of fluorescence-based ecotoxicological bioassays in monitoring toxicants and pollution in aquatic systems: Review. Toxicological and Environmental Chemistry 89, 589-607.

Rastetter, N., Gerhardt, A. (2018). Continuous monitoring of avoidance behaviour with the earthworm Eisenia fetida. Journal of Soils and Sediments 18, 957-967.

Sjollema, S.B., Van Beusekom, S.A.M., Van der Geest, H.G., Booij, P., De Zwart, D., Vethaak, A.D., Admiraal, W. (2014). Laboratory algal bioassays using PAM fluorometry: Effects of test conditions on the determination of herbicide and field sample toxicity. Environmental Toxicology and Chemistry 33, 1017-1022.

Van der Geest, H.G., Greve, G.D., De Haas, E.M., Scheper, B.B., Kraak, M.H.S., Stuijfzand, S.C., Augustijn, C.H., Admiraal, W. (1999). Survival and behavioural responses of larvae of the caddisfly Hydropsyche angustipennis to copper and diazinon. Environmental Toxicology and Chemistry 18, 1965-1971.

What is the importance of incorporating sublethal endpoints in acute and chronic toxicity tests?

Name one animal and one plant specific sublethal endpoint that can be incorporated in acute toxicity test.

Name the two most commonly assessed endpoints in chronic toxicity tests.

4.3.4. Selection of test organisms - Eco animals

Author: Michiel Kraak

Reviewers: Kees van Gestel, Jörg Römbke

Learning objectives:

You should be able to

- name the requirements for suitable laboratory ecotoxicity test organisms.

- list the most commonly used standard test organisms per environmental compartment.

- argue the need for more than one test species and the need for non-standard test organisms.

Key words: Test organism, standardized laboratory ecotoxicity tests, environmental compartment, habitat, different trophic levels

Introduction

Standardized laboratory ecotoxicity tests require constant test conditions, standardized endpoints (see section on Endpoints) and good performance in control treatments. Actually, in reliable, reproducible and easy to perform toxicity tests, the test compound should be the only variable. This sets high demands on the choice of the test organisms.

For a proper risk assessment, it is crucial that test species are representative of the community or ecosystem to be protected. Criteria for selection of organisms to be used in toxicity tests have been summarized by Van Gestel et al. (1997). They include: 1. Practical arguments, including feasibility, cost-effectiveness and rapidity of the test, 2. Acceptability and standardisation of the tests, including the generation of reproducible results, and 3. Ecological significance, including sensitivity, biological validity etc. The most practical requirement is that the test organism should be easy to culture and maintain, but equally important is that the test species should be sensitive towards different stressors. These two main requirements are, however, frequently conflicting. Species that are easy to culture are often less sensitive, simply because they are mostly generalists, while sensitive species are often specialists, making it much harder to culture them. For scientific and societal support of the choice of the test organisms, preferably they should be both ecologically and economically relevant or serve as flagship species, but again, these are opposite requirements. Economically relevant species, like crops and cattle, hardly play any role in natural ecosystems, while ecologically highly relevant species have no obvious economic value. This is reflected by the research efforts on these species, since much more is known about economically relevant species than about ecologically relevant species.

There is no species that is most sensitive to all pollutants. Which species is most sensitive depends on the mode of action and possibly also other properties of the chemical, the exposure route, its availability and the properties of the organism (e.g., presence of specific targets, physiology, etc.). It is therefore important to always test a number of species, with different life traits, functions, and positions in the food web. According to Van Gestel et al. (1997) such a battery of test species should be:

1. Representative of the ecosystem to protect, so including organisms having different life-histories, representing different functional groups, different taxonomic groups and different routes of exposure;

2. Representative of responses relevant for the protection of populations and communities; and

3. Uniform, so all tests in a battery should be applicable to the same test media and applying to the same test conditions, e.g. the same range of pH values.

Representation of environmental compartments

Each environmental compartment, water, air, soil and sediment, requires its specific set of test organisms. The most commonly applied test organisms are daphnids (Daphnia magna) for water, chironomids (Chironomus riparius) for sediments and earthworms (Eisenia fetida) for soil. For air, in the field of inhalation toxicology, humans and rodents are actually the most studied organism. In ecotoxicology, air testing is mostly restricted to plants, concerning studies on toxic gasses. Besides the most commonly applied organisms, there is a long list of other standard test organisms for which test protocols are available (Table 1; OECD site).

Table 1. Non-exhaustive list of standard ecotoxicity test species.

|

Environmental compartment(s) |

Organism group |

Test species |

|

Water |

Plant |

Myriophyllum spicatum |

|

Water |

Plant |

Lemna |

|

Water |

Algae |

Species of choice |

|

Water |

Cyanobacteria |

Species of choice |

|

Water |

Fish |

Danio rerio |

|

Water |

Fish |

Oryzias latipes |

|

Water |

Amphibian |

Xenopus laevis |

|

Water |

Insect |

Chironomus riparius |

|

Water |

Crustacean |

Daphnia magna |

|

Water |

Snail |

Lymnaea stagnalis |

|

Water |

Snail |

Potamopyrgus antipodarum |

|

Water-sediment |

Plant |

Myriophyllum spicatum |

|

Water-sediment |

Insect |

Chironomus riparius |

|

Water-sediment |

Oligochaete worm |

Lumbriculus variegatus |

|

Sediment |

Anaerobic bacteria |

Sewage sludge |

|

Soil |

Plant |

Species of choice |

|

Soil |

Oligochaete worm |

Eisenia fetida or E. andrei |

|

Soil |

Oligochaete worm |

Enchytraeus albidus or E. crypticus |

|

Soil |

Collembolan |

Folsomia candida or F. fimetaria |

|

Soil |

Mite |

Hypoaspis (Geolaelaps) aculeifer |

|

Soil |

Microorganisms |

Natural microbial community |

|

Dung |

Insect |

Scathophaga stercoraria |

|

Dung |

Insect |

Musca autumnalis |

|

Air-soil |

Plant |

Species of choice |

|

Terrestrial |

Bird |

Species of choice |

|

Terrestrial |

Insect |

Apis mellifera |

|

Terrestrial |

Insect |

Bombus terrestris/B. impatiens |

|

Terrestrial |

Insect |

Aphidius rhopalosiphi |

|

Terrestrial |

Mite |

Typhlodromus pyri |

Non-standard test organisms

The use of standard test organisms in standard ecotoxicity tests performed according to internationally accepted protocols strongly reduces the uncertainties in ecotoxicity testing. Yet, there are good reasons for deviating from these protocols. The species in Table 1 are listed according to their corresponding environmental compartment, but ignores differences between ecosystems and habitats. Soils may differ extensively in composition, depending on e.g. the sand, clay or silt content, and properties, e.g. pH and water content, each harbouring different species. Likewise, stagnant and current water have few species in common. This implies that based on ecological arguments there may be good reasons to select non-standard test organisms. Effects of compounds in streams can be better estimated with riverine insects rather than with the stagnant water inhabiting daphnids, while the compost worm Eisenia fetida is not necessarily the most appropriate species for sandy soils. The list of non-standard test organisms is of course endless, but if the methods are well documented in the open literature, there are no limitations to employ these alternative species. They do involve, however, experimental challenges, since non-standard test organisms may be hard to culture and to maintain under laboratory conditions and no protocols are available for the ecotoxicity test. Thus increasing the ecological relevance of ecotoxicity tests also increases the logistical and experimental constraints (see chapter 6 on Risk assessment).

Increasing the number of test species

The vast majority of toxicity tests is performed with a single test species, resulting in large margins of uncertainty concerning the hazardousness of compounds. To reduce these uncertainties and to increase ecological relevance it is advised to incorporate more test species belonging to different trophic levels, for water e.g. algae, daphnids and fish. For deriving environmental quality standards from Species Sensitivity Distributions (see section on SSDs) toxicity data is required for minimal eight species belonging to different taxonomical groups. This obviously causes tension between the scientific requirements and the available financial resources.

References

OECD site. https://www.oecd-ilibrary.org/enviro...stems_20745761.

Van Gestel, C.A.M., Léon, C.D., Van Straalen, N.M. (1997). Evaluation of soil fauna ecotoxicity tests regarding their use in risk assessment. In: Tarradellas, J., Bitton, G., Rossel, D. (Eds). Soil Ecotoxicology. CRC Press, Inc., Boca Raton: 291-317.

Name the requirements for suitable laboratory ecotoxicity test organisms.

List the most commonly used standard test organisms per environmental compartment.

Argue 1] the need for more than one test species, and 2] the need for non-standard test organisms.

4.3.5. Selection of test organisms - Eco plants

Author: J. Arie Vonk

Reviewers: Michiel Kraak, Gertie Arts, Sergi Sabater

Learning objectives:

You should be able to

- name the requirements for suitable laboratory ecotoxicity tests for primary producers

- list the most commonly used primary producers and endpoints in standardized ecotoxicity tests

- argue the need for selecting primary producers from different environmental compartments as test organisms for ecotoxicity tests

Key words:Test organism, standardized laboratory ecotoxicity test, primary producers, algae, plants, environmental compartment, photosynthesis, growth

Introduction

Photo-autotrophic primary producers use chlorophyll to convert CO2 and H2O into organic matter through photosynthesis under (sun)light. These primary producers are the basis of the food web and form an essential component of ecosystems. Besides serving as a food source, multicellular photo-autotrophs also form habitat for other primary producers (epiphytes) and many fauna species. Primary producers are a very diverse group, ranging from tiny unicellular pico-plankton up to gigantic trees. For standardized ecotoxicity tests, primary producers are represented by (micro)algae, aquatic macrophytes and terrestrial plants. Since herbicides are the largest group of pesticides used globally to maintain high crop production in agriculture, it is important to assess their impact on primary producers (Wang & Freemark, 1995). However, concerning testing intensity, primary producers are understudied in comparison to animals.

Standardized laboratory ecotoxicity tests with primary producers require good control over test conditions, standardized endpoints (Arts et al., 2008; see the Section on Endpoints) and growth in the controls (i.e. doubling of cell counts, length and/or biomass within the experimental period). Since the metabolism of primary producers is strongly influenced by light conditions, availability of water and inorganic carbon (CO2 and/or HCO3- and CO32-), temperature and dissolved nutrient concentrations, all these conditions should be monitored closely. The general criteria for selection of test organisms are described in the previous chapter (see the section on the Selection of ecotoxicity test organisms). For primary producers, the choice is mainly based on the available test guidelines, test species and the environmental compartment of concern.

Standardized ecotoxicity testing with primary producers

There are a number of ecotoxicity tests with a variety of primary producers standardized by different organizations including the OECD and the USEPA (Table 1). Characteristic for most primary producers is that they are growing in more than one environmental compartment (soil/sediment; water; air). As a result of this, toxicant uptake for these photo-autotrophs might be diverse, depending on the chemical and the compartment where exposure occurs (air, water, sediment/soil).

For both marine and freshwater ecosystems, standardized ecotoxicity tests are available for microalgae (unicellular micro-organisms sometimes forming larger colonies) including the prokaryotic Cyanobacteria (blue-green algae) and the eukaryotic Chlorophyta (green algae) and Bacillariophyceae (diatoms). Macrophytes (macroalgae and aquatic plants) are multicellular organisms, the latter consisting of differentiated tissues, with a number of species included in standardized ecotoxicity tests. While macroalgae grow in the water compartment only, aquatic plants are divided into groups related to their growth form (emergent; free-floating; submerged and sediment-rooted; floating and sediment-rooted) and can extend from the sediment (roots and root-stocks) through the water into the air. Both macroalgae and aquatic plants contain a wide range of taxa and are present in both marine and freshwater ecosystems.

Terrestrial higher plants are very diverse, ranging from small grasses to large trees. Plants included in standardized ecotoxicity tests consist of crop and non-crop species. An important distinction in terrestrial plants is reflected in dicots and monocots, since both groups differ in their metabolic pathways and might reflect a difference in sensitivity to contaminants.

Table 1. Open source standard guidelines for testing the effect of compounds on primary producers. All tests are performed in (micro)cosms except marked with *

|

Primary producer |

Species |

Compartment |

Test number |

Organisation |

|

Microalgae & cyano-bacteria |

various species |

Freshwater |

201 |

|

Anabaena flos-aque |

Freshwater |

850.4550 |

||

|

Pseudokirchneriella subcapitata, Skeletonema costatum |

Freshwater, Marine water |

850.4500 |

||

|

Floating macrophytes |

Lemna spp. |

Freshwater |

221 |

|

|

Lemna spp. |

Freshwater |

850.4400 |

||

|

Submerged macrophytes |

Myriophyllum spicatum |

Freshwater |

238 |

|

Myriophyllum spicatum |

Sediment (Freshwater) |

239 |

||

|

Aquatic plants* |

not specified |

Freshwater |

850.4450 |

|

|

Terrestrial plants |

wide variety of species |

Air |

227 |

|

|

wide variety of species |

Air |

850.4150 |

||

|

wide variety of species (crops and non-crops) |

Soil & Air |

850.4230 |

||

|

legumes and rhizobium symbiont |

Soil & Air |

850.4600 |

||

|

wide variety of species (crops and non-crops) |

Soil |

208 |

||

|

wide variety of species (crops and non-crops) |

Soil |

850.4100 |

||

|

various crop species |

Soil & Air |

850.4800 |

||

|

Terrestrial plants* |

not specified |

Terrestrial |

850.4300 |

Representation of environmental compartments

Since primary producers can take up many compounds directly by cells and thalli (algae) or by their leaves, stems, roots and rhizomes (plants), different environmental compartments need to be included in ecotoxicity testing depending on the chemical characteristics of the contaminants. Moreover, the chemical characteristics of the compound under consideration determine if and how the compound might enter the primary producers and how it is transported through organisms.

For all aquatic primary producers, exposure through the water phase is relevant. Air exposure occurs in the emergent and floating aquatic plants, while rooting plants and algae with rhizoids might be exposed through sediment. Sediment exposure introduces additional challenges for standardized testing conditions, since changes in redox conditions and organic matter content of sediments can alter the behavior of compounds in this compartment.

All terrestrial plants are exposed through air, soil and water (soil moisture, rain, irrigation). Air exposure and water deposition (rain or spraying) directly exposes aboveground parts of terrestrial plants, while belowground plant parts and seeds are exposed through soil and soil moisture. Soil exposure introduces additional challenges for standardized testing conditions, since changes in water or sediment organic matter content of soils can alter the behavior of compounds in this compartment.

Test endpoints

Bioaccumulation after uptake and translocation to specific cell organelles or plant tissue can result in incorporation of compounds in primary producers. This has been observed for heavy metals, pesticides and other organic chemicals. The accumulated compounds in primary producers can then enter the food chain and be transferred to higher trophic levels (see the section on Biomagnification). Although concentrations in primary producers are indicative of the presence of bioavailable compounds, these concentrations do not necessarily imply adverse effects on these organisms. Bioaccumulation measurements can therefore be best combined with one or more of the following endpoint assessments.

Photosynthesis is the most essential metabolic pathway for primary producers. The mode of action of many herbicides is therefore photosynthesis inhibition, whereby different metabolic steps can be targeted (see the section on Herbicide toxicity). This endpoint is relevant for assessing acute effects on the chlorophyll electron transport using Pulse-Amplitude-Modulation (PAM) fluorometry or as a measure of oxygen or carbon production by primary producers.

Growth represents the accumulation of biomass (microalgae) or mass (multicellular primary producers). Growth inhibition is the most important endpoint in test with primary producers since this endpoint integrates responses of a wide range of metabolic effects into a whole organism or a population response of primary producers. However, it takes longer to assess, especially for larger primary producers. Cell counts, increase in size over time for either leaves, roots, or whole organisms, and (bio)mass (fresh weight and dry weight) are the growth endpoints mostly used.

Seedling emergence reflects the germination and early development of seedlings into plants. This endpoint is especially relevant for perennial and biannual plants depending on seed dispersal and successful germination to maintain healthy populations.

Other endpoints include elongation of different plant parts (e.g. roots), necrosis of leaves, or disturbances in plant-microbial symbiont relationships.

Current limitations and challenges for using primary producers in ecotoxicity tests

For terrestrial vascular plants, many crop and non-crop species can be used in standardized tests, however, for other environmental compartments (aquatic and marine) few species are available in standardized test guidelines. Also not all environmental compartments are currently covered by standardized tests for primary producers. In general, there are limited tests for aquatic sediments and there is a total lack of tests for marine sediments. Finally, not all major groups of primary producers are represented in standardized toxicity tests, for example mosses and some major groups of algae are absent.

Challenges to improve ecotoxicity tests with plants would be to include more sensitive and early response endpoints. For soil and sediment exposure of plants to contaminants, development of endpoints related to root morphology and root metabolism could provide insights into early impact of substances to exposed plant parts. Also the development of ecotoxicogenomic endpoints (e.g. metabolomics) (see the section on Metabolomics) in the field of plant toxicity tests would enable us to determine effects on a wider range of plant metabolic pathways.

References

Arts, G.H.P., Belgers, J.D.M., Hoekzema, C.H., Thissen, J.T.N.M. (2008). Sensitivity of submersed freshwater macrophytes and endpoints in laboratory toxicity tests. Environmental Pollution 153, 199-206.

Wang, W.C., Freemark, K. (1995) The use of plants for environmental monitoring and assessment. Ecotoxicology and Environmental Safety 30: 289-301.

Which conditions need to be controlled carefully in laboratory ecotoxicity tests with primary producers?

List the different groups of primary producers used in standardized tests for each environmental compartment.

Argue why testing of primary producers is relevant in relation to [A] environmental exposure of ecosystems to pesticides and [B] the role of primary producers in ecosystems

4.3.6. Selection of test organisms - Microorganisms

Author: Patrick van Beelen

Reviewers: Kees van Gestel, Erland Bååth, Maria Niklinska

Learning objectives:

You should be able to

- describe the vital role of microorganisms in ecosystems.

- explain the difference between toxicity tests for protecting biodiversity and for protecting ecosystem services.

- explain why short-term microbial tests can be more sensitive than long-term ones.

Keywords: microorganisms, processes, nitrogen conversion, test methods

The importance of microorganisms

Most organisms are microorganisms, which means they are generally too small to see with the naked eye. Nevertheless, microorganisms affect almost all aspects of our lives. Viruses are the smallest of microorganisms, the prokaryotic bacteria and archaea are bigger (in the micrometer range), and the sizes of eukaryotic microorganisms range from three to hundred micrometers. The microscopic eukaryotes have larger cells with a nucleus and come in different shapes like green algae, protists and fungi.

Cyanobacteria and eukaryotic algae perform photosynthesis in the oceans, seas, brackish and freshwater ecosystems. They fix carbon dioxide into biomass and form the basis of the largest aquatic ecosystems. Bacteria and fungi degrade complex organic molecules into carbon dioxide and minerals, which are needed for plant growth.

Plants often live in symbiosis with specialized microorganisms on their roots, which facilitate their growth by enhancing uptake of water and nutrients, speeding up plant growth. Invertebrate and vertebrate animals, including humans, have bacteria and other microorganisms in their intestines to facilitate the digestion of food. Cows for example cannot digest grass without the microorganisms in their rumen. Also, termites would not be able to digest lignin, a hard to digest wood polymer, without the aid of gut fungi. Leaf cutter ants transport leaves into their nest to feed the fungi which they depend on. Also, humans consume many foodstuffs with yeasts, fungi or bacteria for preservation of the food and a pleasant taste. Beer, wine, cheese, yogurt, sauerkraut, vinegar, bread, tempeh, sausage and may other foodstuffs need the right type of microorganisms to be palatable. Having the right type of microorganisms is also vital for human health. Human mother's milk contains oligosaccharides, which are indigestible for the newborn child. These serve as a major food source for the intestinal bacteria in the baby, which reduce the risk of dangerous infections.

This shows that the interaction between specific microorganisms and higher organisms are often highly specific. Marine viruses are very abundant and can limit algal blooms promoting a more diverse marine phytoplankton. Pathogenic viruses, bacteria, fungi and protists enhance the biodiversity of plants and animals by the following mechanism: The densest populations are more susceptible to diseases since the transmission of the disease becomes more frequent. When the most abundant species become less frequent, there is more room for the other species and biodiversity is enhanced. In agriculture, this enhanced biodiversity is unwanted since the livestock and crop are the most abundant species. That is why disease control becomes more important in high intensity livestock farming and in large monocultures of crops. Microorganisms are at the base of all ecosystems and are vital for human health and the environment.

The microbiological society has a nice video explaining why microbiology matters.

Protection goals

The functioning of natural ecosystems on earth is threatened by many factors, such as habitat loss, habitat fragmentation, global warming, species extinction, over fertilization, acidification and pollution. Natural and man-made chemicals can exhibit toxic effects on the different organisms in natural ecosystems. Toxic chemicals released in the environment may have negative effects on biodiversity or microbial processes. In the ecosystem strongly affected by such changes, the abundance of different species could be smaller. The loss of biodiversity of the species in a specific ecosystem can be used as a measure for the degradation of the ecosystem. Humans benefit from the presence of properly functioning ecosystems. These benefits can be quantified as ecosystem services. Microbial processes contribute heavily to many ecosystem services. Groundwater for example, is often a suitable source of drinking water since microorganisms have removed pollutants and pathogens from the infiltrating water. See Section on Ecosystem services and protection goals.

Environmental toxicity tests

Most environmental toxicity tests are single species tests. Such tests typically determine toxicity of a chemical to a specific biological species like for example the bioluminescence by the Allivibrio fisheri bacteria in the Microtox test or the growth inhibition test on freshwater algae and cyanobacteria (see Section on Selection of test organisms - Eco plants). These tests are relatively simple using a specific toxic chemical on a specific biological species in an optimal setting. The OECD guidelines for the testing of chemicals, section 2, effects on biotic systems gives a list of standard tests. Table 1 lists different tests with microorganisms standardized by the Organization for Economic Cooperation and Development (OECD).

Table 1. Generally accepted environmental toxicity tests using microorganisms, standardized by the Organization for Economic Cooperation and Development (OECD).

|

OECD test No |

Title |

Medium |

Test type |

|

Freshwater algae and cyanobacteria, growth inhibition test (chapter reference) |

Aquatic |

Single species |

|

|

Activated sludge, respiration inhibition test |

Sediment |

Process |

|

|

224 (draft guideline) |

Determination of the inhibition of the activity of anaerobic bacteria |

Sediment |

Process |

|

Soil microorganisms: carbon transformation test |

Soil |

Process |

|

|

Soil microorganisms: nitrogen transformation test |

Soil |

Process |

The outcome of these tests can be summarized as EC10 values (see Section on Concentration-response relationships), which can be used in risk assessment (see Sections on Predictive risk assessment approaches and tools and on Diagnostic risk assessment approaches and tools) Basically, there are three types of tests. Single species tests, community tests and tests using microbial processes.

Single species tests

The ecological relevance of a single species test can be a matter of debate. In most cases it is not practical to work with ecologically relevant species since these can be hard to maintain under laboratory conditions. Each ecosystem will also have its own ecologically relevant species, which would require an extremely large battery of different test species and tests, which are difficult to perform in a reproducible way. As a solution to these problems, the test species are assumed to exhibit similar sensitivity for toxicants as the ecological relevant species. This assumption was confirmed in a number of cases. If the sensitivity distribution of a given toxicant for a number of test species would be similar to the sensitivity distribution of the relevant species in a specific ecosystem, one could use a statistic method to estimate a safe concentration for most of the species.

Toxicity tests with short incubation times are often disputed since it takes time for toxicants to accumulate in the test animals. This is not a problem in microbial toxicity tests since the small size of the test organisms allows a rapid equilibrium of the concentrations of the toxicant in the water and in the test organism. On the contrary, long incubation times under conditions that promote growth, can lead to the occurrence of resistant mutants, which will decrease the apparent sensitivity of the test organism. This selection and growth of resistant mutants cannot, however, be regarded as a positive thing since these mutants are different from the parent strain and might also have different ecological properties. In fact, the selection of antibiotic resistant microorganisms in the environment is considered to be a problem since these might transfer to pathogenic (disease promoting) microorganisms which gives problems for patients treated with antibiotics.

The OECD test no 201, which uses freshwater algae and cyanobacteria, is a well-known and sensitive single species microbial ecotoxicity test. These are explained in more detail in the Section on Selection of test organisms - Eco plants.

Community tests

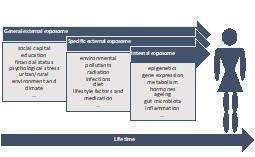

Microorganisms have a very wide range of metabolic diversity. This makes it more difficult to extrapolate from a single species test to all possible microbial species including fungi, protists, bacteria, archaea and viruses. One solution is to test a multitude of species (a whole community) exposed in a single toxicity experiment, it becomes more difficult to attribute the decline or increase of species to toxic effects. The rise and decline of species can also be caused by other factors, including species interactions. The method of Pollution-induced community tolerance is used for the detection of toxic effects on communities. Organisms survive in polluted environments only when they can tolerate toxic chemical concentrations in their habitat. During exposure to pollution the sensitive species become extinct and tolerant species take over their place and role in the ecosystem (Figure 1). This takeover can be monitored by very simple toxicity tests using a part of the community extracted from the environment. Some tests use the incorporation of building blocks for DNA (thymidine) and protein (leucine). Other tests use different substrates for microbial growth. The observation that this part of the community becomes more tolerant as measured by these simple toxicity tests reveals that the pollutant really affects the microbial community. This is especially helpful when complex and diverse environments like biofilms, sediments and soils are studied.

Tests using microbial processes

The protection of ecosystem services is fundamentally different from the protection of biodiversity. When one wants to protect biodiversity all species are equally important and are worth protecting. When one wants to protect ecosystem services only the species that perform the process have to be protected. Many contributing species can be intoxicated without having much impact on the process. An example is nitrogen transformation, which is tested by measuring the conversion of ammonium into nitrite and nitrate (see box).

The inactivation of the most sensitive species can be compensated by the prolonged activity or growth of less sensitive species. The test design of microbial process tests aims to protect the process and not the contributing species. Consequently, the process tests from Table 1 seldom play a decisive role in reducing the maximum tolerable concentration of a chemical. Reason is that the single species toxicity tests generally are more sensitive since they use a specific biological species as test organism instead of a process.

|

Box: Nitrogen transformation test The OECD test no. 216 Soil Microorganisms: Nitrogen Transformation Test is a very well-known toxicity test using the soil process of nitrogen transformation. The test for non-agrochemicals is designed to detect persistent adverse effects of a toxicant on the process of nitrogen transformation in soils. Powdered clover meal contains nitrogen mainly in the form of proteins which can be degraded and oxidized to produce nitrate. Soil is amended with clover meal and treated with different concentrations of a toxicant. The soil provides both the test organisms and the test medium. A sandy soil with a low organic carbon content is used to minimize sorption of the toxicant to the soil. Sorption can decrease the toxicity of a toxicant in soil. According to the guideline, the soil microorganisms should not be exposed to fertilizers, crop protection products, biological materials or accidental contaminations for at least three months before the soil is sampled. In addition, the soil microorganisms should at least form 1% of the soil organic carbon. This indicates that the microorganisms are still alive. The soil is incubated with clover meal and the toxicant under favorable growth conditions (optimal temperature, moisture) for the microorganisms. The quantities of nitrate formed are measured after 7 and 28 days of incubation. This allows for the growth of microorganisms resistant to the toxicant during the test, which can make the longer incubation time less sensitive. The nitrogen in the proteins of clover meal will be converted to ammonia by general degradation processes. The conversion of clover meal to ammonia can be performed by a multitude of species and is therefore not very sensitive to inhibition by toxic compounds.

The conversion of ammonia to nitrate generally is performed in two steps. First, ammonia oxidizing bacteria or archaea, oxidize ammonia into nitrite. Second, nitrite is oxidized by nitrite oxidizing bacteria into nitrate. These latter two steps are generally much slower than ammonium production, since they require specialized microorganisms. These specialized microorganisms also have a lower growth rate than the common microorganisms involved in the general degradation of proteins into amino acids. This makes the nitrogen transformation test much more sensitive compared to the carbon transformation test, which uses more common microorganisms. Under the optimal conditions in the nitrogen transformation test some minor ammonia or nitrite oxidizing species might seem unimportant since they do not contribute much to the overall process. Nevertheless these minor species can become of major importance under less optimal conditions. Under acid conditions for example, only the archaea oxidize ammonia into nitrite while the ammonia oxidizing bacteria become inhibited. The nitrogen transformation test has a minimum duration of 28 days at 20°C under optimal moisture conditions, but can be prolonged to 100 days. Shorter incubation times would make the test more sensitive. |

What is the disadvantage of growth during a toxicity test using a microbial process?

Mention the intermediates during the degradation of clover meal to nitrate.

Why are shorter microbial tests often more sensitive than longer ones?

When one microbial species in the environment is replaced by another one, can it have an effect on animals or plants?

4.3.7. Selection of test organisms - Birds

Author: Annegaaike Leopold

Reviewers: Nico van den Brink, Kees van Gestel, Peter Edwards

Learning objectives:

You should be able to

- Understand and argue why birds are an important model in ecotoxicology;

- understand and argue the objective of avian toxicity testing performed for regulatory purposes;