4.4: The Distribution of Measurements and Results

- Page ID

- 5745

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)Earlier we reported results for a determination of the mass of a circulating United States penny, obtaining a mean of 3.117 g and a standard deviation of 0.051 g. Table 4.11 shows results for a second, independent determination of a penny’s mass, as well as the data from the first experiment. Although the means and standard deviations for the two experiments are similar, they are not identical. The difference between the experiments raises some interesting questions. Are the results for one experiment better than those for the other experiment? Do the two experiments provide equivalent estimates for the mean and the standard deviation? What is our best estimate of a penny’s expected mass? To answers these questions we need to understand how to predict the properties of all pennies by analyzing a small sample of pennies. We begin by making a distinction between populations and samples.

| First Experiment | Second Experiment | ||

|---|---|---|---|

| Penny | Mass (g) | Penny | Mass (g) |

| 1 | 3.080 | 1 | 3.052 |

| 2 | 3.094 | 2 | 3.141 |

| 3 | 3.107 | 3 | 3.083 |

| 4 | 3.056 | 4 | 3.083 |

| 5 | 3.112 | 5 | 3.048 |

| 6 | 3.174 | ||

| 7 | 3.198 | ||

| X | 3.117 | 3.081 | |

| s | 0.051 | 0.037 | |

4.4.1 Populations and Samples

A population is the set of all objects in the system we are investigating. For our experiment, the population is all United States pennies in circulation. This population is so large that we cannot analyze every member of the population. Instead, we select and analyze a limited subset, or sample of the population. The data in Table 4.11, for example, are results for two samples drawn from the larger population of all circulating United States pennies.

4.4.2 Probability Distributions for Populations

Table 4.11 provides the mean and standard deviation for two samples of circulating United States pennies. What do these samples tell us about the population of pennies? What is the largest possible mass for a penny? What is the smallest possible mass? Are all masses equally probable, or are some masses more common?

To answer these questions we need to know something about how the masses of individual pennies are distributed around the population’s average mass. We represent the distribution of a population by plotting the probability or frequency of obtaining an specific result as a function of the possible results. Such plots are called probability distributions.

There are many possible probability distributions. In fact, the probability distribution can take any shape depending on the nature of the population. Fortunately many chemical systems display one of several common probability distributions. Two of these distributions, the binomial distribution and the normal distribution, are discussed in this section.

Binomial Distribution

The binomial distribution describes a population in which the result is the number of times a particular event occurs during a fixed number of trials. Mathematically, the binomial distribution is

\[P(X,N) = \dfrac{N!}{X!(N−X)!} ×p^X × (1−p)^{N− X}\]

where P(X,N) is the probability that an event occurs X times during N trials, and p is the event’s probability for a single trial. If you flip a coin five times, P(2,5) is the probability that the coin will turn up “heads” exactly twice.

Note

The term N! reads as N-factorial and is the product N × (N-1) × (N-2) ×…× 1. For example, 4! is 4 × 3 × 2 × 1 = 24. Your calculator probably has a key for calculating factorials.

A binomial distribution has well-defined measures of central tendency and spread. The expected mean value is

\[μ = Np\]

and the expected spread is given by the variance

\[σ^2 = Np(1-p)\]

or the standard deviation.

\[σ = \sqrt{Np(1-p)}\]

The binomial distribution describes a population whose members can take on only specific, discrete values. When you roll a die, for example, the possible values are 1, 2, 3, 4, 5, or 6. A roll of 3.45 is not possible. As shown in Example 4.10, one example of a chemical system obeying the binomial distribution is the probability of finding a particular isotope in a molecule.

Example 4.10

Carbon has two stable, non-radioactive isotopes, 12C and 13C, with relative isotopic abundances of, respectively, 98.89% and 1.11%.

- What are the mean and the standard deviation for the number of 13C atoms in a molecule of cholesterol (C27H44O)?

- What is the probability that a molecule of cholesterol has no atoms of 13C?

Solution

The probability of finding an atom of 13C in a molecule of cholesterol follows a binomial distribution, where X is the number of 13C atoms, N is the number of carbon atoms in a molecule of cholesterol, and p is the probability that any single atom of carbon in 13C.

(a) The mean number of 13C atoms in a molecule of cholesterol is

\[μ = Np = 27 × 0.0111 = 0.300\]

with a standard deviation of

\[σ = \sqrt{Np(1-p)} = \sqrt{27 × 0.0111 × (1−0.0111)} = 0.172\]

(b) The probability of finding a molecule of cholesterol without an atom of 13C is

\[P(0,27) = \dfrac{27!}{0!(27− 0)!} × (0.0111)^0 × (1−0.0111)^{27−0} = 0.740\]

There is a 74.0% probability that a molecule of cholesterol will not have an atom of 13C, a result consistent with the observation that the mean number of 13C atoms per molecule of cholesterol, 0.300, is less than one.

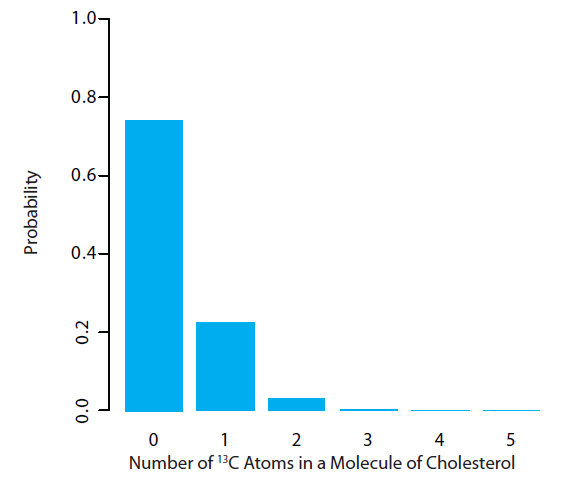

A portion of the binomial distribution for atoms of 13C in cholesterol is shown in Figure 4.6. Note in particular that there is little probability of finding more than two atoms of 13C in any molecule of cholesterol.

Figure 4.6 Portion of the binomial distribution for the number of naturally occurring 13C atoms in a molecule of cholesterol. Only 3.6% of cholesterol molecules contain more than one atom of 13C, and only 0.33% contain more than two atoms of 13C.

Normal Distribution

A binomial distribution describes a population whose members have only certain, discrete values. This is the case with the number of 13C atoms in cholesterol. A molecule of cholesterol, for example, can have two 13C atoms, but it can not have 2.5 atoms of 13C. A population is continuous if its members may take on any value. The efficiency of extracting cholesterol from a sample, for example, can take on any value between 0% (no cholesterol extracted) and 100% (all cholesterol extracted).

The most common continuous distribution is the Gaussian, or normal distribution, the equation for which is

\[f(X) = \dfrac{1}{\sqrt{2πσ^2}} e^{-\Large{\frac{(X - μ)^2}{2σ^2}}}\]

where μ is the expected mean for a population with n members

\[μ = \dfrac{\sum_{i}X_i}{n}\]

and σ2 is the population’s variance.

\[σ^2 = \dfrac{\sum_{i} (X_i - μ)^2}{n}\tag{4.8}\]

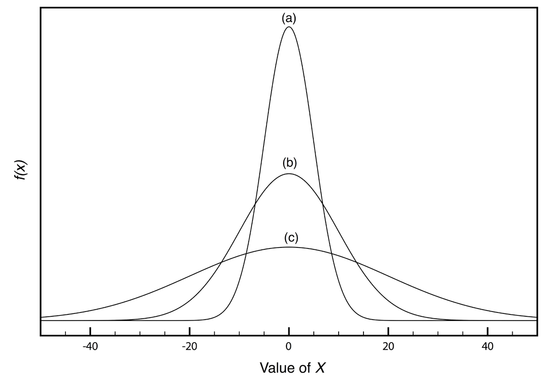

Examples of normal distributions, each with an expected mean of 0 and with variances of 25, 100, or 400, are shown in Figure 4.7. Two features of these normal distribution curves deserve attention. First, note that each normal distribution has a single maximum corresponding to μ, and that the distribution is symmetrical about this value. Second, increasing the population’s variance increases the distribution’s spread and decreases its height; the area under the curve, however, is the same for all three distribution.

The area under a normal distribution curve is an important and useful property as it is equal to the probability of finding a member of the population with a particular range of values. In Figure 4.7, for example, 99.99% of the population shown in curve (a) have values of X between -20 and 20. For curve (c), 68.26% of the population’s members have values of X between -20 and 20.

Because a normal distribution depends solely on μ and σ2, the probability of finding a member of the population between any two limits is the same for all normally distributed populations. Figure 4.8, for example, shows that 68.26% of the members of a normal distribution have a value within the range μ ± 1σ, and that 95.44% of population’s members have values within the range μ ± 2σ. Only 0.17% members of a population have values exceeding the expected mean by more than ± 3σ. Additional ranges and probabilities are gathered together in a probability table that you will find in Appendix 3. As shown in Example 4.11, if we know the mean and standard deviation for a normally distributed population, then we can determine the percentage of the population between any defined limits.

Figure 4.7 Normal distribution curves for:

(a) μ = 0; σ2 = 25

(b) μ = 0; σ2 = 100

(c) μ = 0; σ2= 400

Figure 4.8 Normal distribution curve showing the area under the curve for several different ranges of values of X. As shown here, 68.26% of the members of a normally distributed population have values within ±1σ of the population’s expected mean, and 13.59% have values between μ–1σ and μ–2σ. The area under the curve between any two limits can be found using the probability table in Appendix 3.

Example 4.11

The amount of aspirin in the analgesic tablets from a particular manufacturer is known to follow a normal distribution with μ = 250 mg and σ2 = 25. In a random sampling of tablets from the production line, what percentage are expected to contain between 243 and 262 mg of aspirin?

Solution

We do not determine directly the percentage of tablets between 243 mg and 262 mg of aspirin. Instead, we first find the percentage of tablets with less than 243 mg of aspirin and the percentage of tablets having more than 262 mg of aspirin. Subtracting these results from 100%, gives the percentage of tablets containing between 243 mg and 262 mg of aspirin.

To find the percentage of tablets with less than 243 mg of aspirin or more than 262 mg of aspirin we calculate the deviation, z, of each limit from μ in terms of the population’s standard deviation, σ

\[z = \dfrac{X− µ}{σ}\]

where X is the limit in question. The deviation for the lower limit is

\[z_\ce{lower} = \dfrac{243−250}{5} = −1.4\]

and the deviation for the upper limit is

\[z_\ce{upper}= \dfrac{262− 250}{5} = +2.4\]

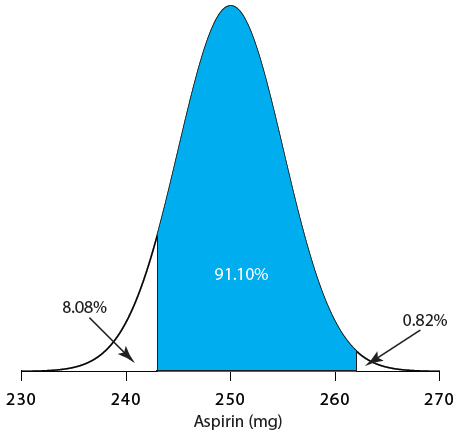

Using the table in Appendix 3, we find that the percentage of tablets with less than 243 mg of aspirin is 8.08%, and the percentage of tablets with more than 262 mg of aspirin is 0.82%. Therefore, the percentage of tablets containing between 243 and 262 mg of aspirin is

\[100.00\% - 8.08\% - 0.82\% = 91.10\%\]

Figure 4.9 shows the distribution of aspiring in the tablets, with the area in blue showing the percentage of tablets containing between 243 mg and 262 mg of aspirin.

Figure 4.9 Normal distribution for the population of aspirin tablets in Example 4.11. The population’s mean and standard deviation are 250 mg and 5 mg, respectively. The shaded area shows the percentage of tablets containing between 243 mg and 262 mg of aspirin.

Practice Exercise 4.5

What percentage of aspirin tablets will contain between 240 mg and 245 mg of aspirin if the population’s mean is 250 mg and the population’s standard deviation is 5 mg.

Click here to review your answer to this exercise.

4.4.3 Confidence Intervals for Populations

If we randomly select a single member from a population, what is its most likely value? This is an important question, and, in one form or another, it is at the heart of any analysis in which we wish to extrapolate from a sample to the sample’s parent population. One of the most important features of a population’s probability distribution is that it provides a way to answer this question.

Figure 4.8 shows that for a normal distribution, 68.26% of the population’s members are found within the range of µ ± 1σ. Stating this another way, there is a 68.26% probability that the result for a single sample drawn from a normally distributed population is in the interval µ ± 1σ. In general, if we select a single sample we expect its value, Xi to be in the range

\[X_i = μ ± zσ\tag{4.9}\]

where the value of z is how confident we are in assigning this range. Values reported in this fashion are called confidence intervals. Equation 4.9, for example, is the confidence interval for a single member of a population. Table 4.12 gives the confidence intervals for several values of z. For reasons we will discuss later in the chapter, a 95% confidence level is a common choice in analytical chemistry. When z = 1, we call this the 68.26% confidence interval.

| z | Confidence Interval (%) |

|---|---|

| 0.50 | 38.30 |

| 1.00 | 68.26 |

| 1.50 | 86.64 |

| 1.96 | 95.00 |

| 2.00 | 95.44 |

| 2.50 | 98.76 |

| 3.00 | 99.73 |

| 3.50 | 99.95 |

Example 4.12

What is the 95% confidence interval for the amount of aspirin in a single analgesic tablet drawn from a population for which μ is 250 mg and σ2 is 25?

Solution

Using Table 4.12, we find that z is 1.96 for a 95% confidence interval. Substituting this into equation 4.9, gives the confidence interval for a single tablet as

\[X_i = μ ± 1.96σ = \mathrm{250\: mg ± (1.96 × 5) = 250\: mg ± 10\: mg}\]

A confidence interval of 250 mg ± 10 mg means that 95% of the tablets in the population contain between 240 and 260 mg of aspirin.

Alternatively, we can express a confidence interval for the expected mean in terms of the population’s standard deviation and the value of a single member drawn from the population.

\[μ = X_i ± zσ\tag{4.10}\]

Example 4.13

The population standard deviation for the amount of aspirin in a batch of analgesic tablets is known to be 7 mg of aspirin. If you randomly select and analyze a single tablet and find that it contains 245 mg of aspirin, what is the 95% confidence interval for the population’s mean?

Solution

The 95% confidence interval for the population mean is given as

\[μ = X_i ± zσ = \mathrm{245\: mg ± (1.96 × 7)\: mg = 245\: mg ± 14\: mg}\]

Therefore, there is 95% probability that the population’s mean, μ, lies within the range of 231 mg to 259 mg of aspirin.

It is unusual to predict the population’s expected mean from the analysis of a single sample. We can extend confidence intervals to include the mean of n samples drawn from a population of known σ. The standard deviation of the mean, σX, which also is known as the standard error of the mean, is

\[σ_{\overline{X}} = \dfrac{σ}{\sqrt{n}}\]

(Problem 8 at the end of the chapter asks you to derive this equation using a propagation of uncertainty.) The confidence interval for the population’s mean, therefore, is

\[μ = \overline{X} ± \dfrac{zσ}{\sqrt{n}}\tag{4.11}\]

Example 4.14

What is the 95% confidence interval for the analgesic tablets described in Example 4.13, if an analysis of five tablets yields a mean of 245 mg of aspirin?

Solution

In this case the confidence interval is

\[\mathrm{μ = 245\: mg ± \dfrac{196×7}{\sqrt{5}}\: mg = 245\: mg ± 6\: mg}\]

Thus, there is a 95% probability that the population’s mean is between 239 to 251 mg of aspirin. As expected, the confidence interval when using the mean of five samples is smaller than that for a single sample.

Practice Exercise 4.6

An analysis of seven aspirin tablets from a population known to have a standard deviation of 5, gives the following results in mg aspirin per tablet:

246 249 255 251 251 247 250

What is the 95% confidence interval for the population’s expected mean?

Click here when you are ready to review your answer.

4.4.4 Probability Distributions for Samples

In working example 4.11–4.14 we assumed that the amount of aspirin in analgesic tablets is normally distributed. Without analyzing every member of the population, how can we justify this assumption? In situations where we can not study the whole population, or when we can not predict the mathematical form of a population’s probability distribution, we must deduce the distribution from a limited sampling of its members.

Sample Distributions and the Central Limit Theorem

Let’s return to the problem of determining a penny’s mass to explore further the relationship between a population’s distribution and the distribution of a sample drawn from that population. The two sets of data in Table 4.11 are too small to provide a useful picture of a sample’s distribution. To gain a better picture of the distribution of pennies we need a larger sample, such as that shown in Table 4.13. The mean and the standard deviation for this sample of 100 pennies are 3.095 g and 0.0346 g, respectively.

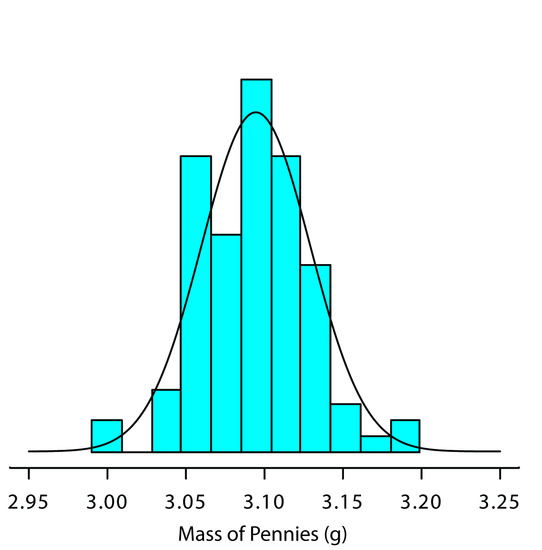

A histogram (Figure 4.10) is a useful way to examine the data in Table 4.13. To create the histogram, we divide the sample into mass intervals and determine the percentage of pennies within each interval (Table 4.14). Note that the sample’s mean is the midpoint of the histogram.

| Penny | Mass (g) | Penny | Mass (g) | Penny | Mass (g) | Penny | Mass (g) |

|---|---|---|---|---|---|---|---|

| 1 | 3.126 | 26 | 3.073 | 51 | 3.101 | 76 | 3.086 |

| 2 | 3.140 | 27 | 3.084 | 52 | 3.049 | 77 | 3.123 |

| 3 | 3.092 | 28 | 3.148 | 53 | 3.082 | 78 | 3.115 |

| 4 | 3.095 | 29 | 3.047 | 54 | 3.142 | 79 | 3.055 |

| 5 | 3.080 | 30 | 3.121 | 55 | 3.082 | 80 | 3.057 |

| 6 | 3.065 | 31 | 3.116 | 56 | 3.066 | 81 | 3.097 |

| 7 | 3.117 | 32 | 3.005 | 57 | 3.128 | 82 | 3.066 |

| 8 | 3.034 | 33 | 3.115 | 58 | 3.112 | 83 | 3.113 |

| 9 | 3.126 | 34 | 3.103 | 59 | 3.085 | 84 | 3.102 |

| 10 | 3.057 | 35 | 3.086 | 60 | 3.086 | 85 | 3.033 |

| 11 | 3.053 | 36 | 3.103 | 61 | 3.084 | 86 | 3.112 |

| 12 | 3.099 | 37 | 3.049 | 62 | 3.104 | 87 | 3.103 |

| 13 | 3.065 | 38 | 2.998 | 63 | 3.107 | 88 | 3.198 |

| 14 | 3.059 | 39 | 3.063 | 64 | 3.093 | 89 | 3.103 |

| 15 | 3.068 | 40 | 3.055 | 65 | 3.126 | 90 | 3.126 |

| 16 | 3.060 | 41 | 3.181 | 66 | 3.138 | 91 | 3.111 |

| 17 | 3.078 | 42 | 3.108 | 67 | 3.131 | 92 | 3.126 |

| 18 | 3.125 | 43 | 3.114 | 68 | 3.120 | 93 | 3.052 |

| 19 | 3.090 | 44 | 3.121 | 69 | 3.100 | 94 | 3.113 |

| 20 | 3.100 | 45 | 3.105 | 70 | 3.099 | 95 | 3.085 |

| 21 | 3.055 | 46 | 3.078 | 71 | 3.097 | 96 | 3.117 |

| 22 | 3.105 | 47 | 3.147 | 72 | 3.091 | 97 | 3.142 |

| 23 | 3.063 | 48 | 3.104 | 73 | 3.077 | 98 | 3.031 |

| 24 | 3.083 | 49 | 3.146 | 74 | 3.178 | 99 | 3.083 |

| 25 | 3.065 | 50 | 3.095 | 75 | 3.054 | 100 | 3.104 |

| Mass Interval | Frequency (as %) | Mass Interval | Frequency (as %) |

|---|---|---|---|

| 2.991–3.009 | 2 | 3.104–3.123 | 19 |

| 3.010–3.028 | 0 | 3.124–3.142 | 12 |

| 3.029–3.047 | 4 | 3.143–3.161 | 3 |

| 3.048–3.066 | 19 | 3.162–3.180 | 1 |

| 3.067–3.085 | 15 | 3.181–3.199 | 2 |

| 3.086–3.104 | 23 |

Figure 4.10 The blue bars show a histogram for the data in Table 4.13. The height of a bar corresponds to the percentage of pennies within the mass intervals shown in Table 4.14. Superimposed on the histogram is a normal distribution curve assuming that μ and σ2 for the population are equivalent to X and s2 for the sample. The total area of the histogram’s bars and the area under the normal distribution curve are equal.

Figure 4.10 also includes a normal distribution curve for the population of pennies, assuming that the mean and variance for the sample provide appropriate estimates for the mean and variance of the population. Although the histogram is not perfectly symmetric, it provides a good approximation of the normal distribution curve, suggesting that the sample of 100 pennies is normally distributed. It is easy to imagine that the histogram will more closely approximate a normal distribution if we include additional pennies in our sample.

We will not offer a formal proof that the sample of pennies in Table 4.13 and the population of all circulating U. S. pennies are normally distributed. The evidence we have seen, however, strongly suggests that this is true. Although we can not claim that the results for all analytical experiments are normally distributed, in most cases the data we collect in the laboratory are, in fact, drawn from a normally distributed population. According to the central limit theorem, when a system is subject to a variety of indeterminate errors, the results approximate a normal distribution.6 As the number of sources of indeterminate error increases, the results more closely approximate a normal distribution. The central limit theorem holds true even if the individual sources of indeterminate error are not normally distributed. The chief limitation to the central limit theorem is that the sources of indeterminate error must be independent and of similar magnitude so that no one source of error dominates the final distribution.

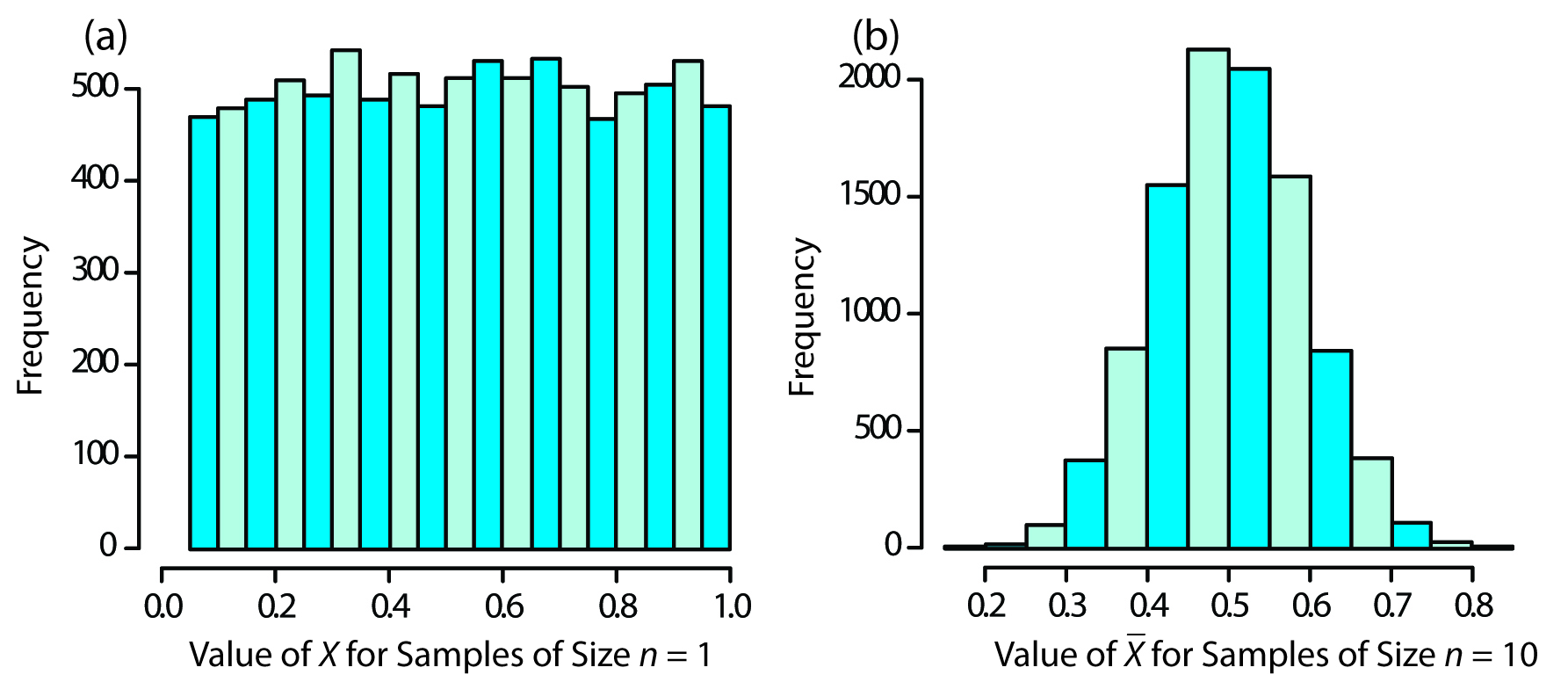

An additional feature of the central limit theorem is that a distribution of means for samples drawn from a population with any distribution will closely approximate a normal distribution if the size of the samples is large enough. Figure 4.11 shows the distribution for two samples of 10 000 drawn from a uniform distribution in which every value between 0 and 1 occurs with an equal frequency. For samples of size n = 1, the resulting distribution closely approximates the population’s uniform distribution. The distribution of the means for samples of size n = 10, however, closely approximates a normal distribution.

Note

You might reasonably ask whether this aspect of the central limit theorem is important as it is unlikely that we will complete 10 000 analyses, each of which is the average of 10 individual trials. This is deceiving. When we acquire a sample for analysis—a sample of soil, for example—it consists of many individual particles, each of which is an individual sample of the soil. Our analysis of the gross sample, therefore, is the mean for this large number of individual soil particles. Because of this, the central limit theorem is relevant.

Figure 4.11 Histograms for (a) 10 000 samples of size n = 1 drawn from a uniform distribution with a minimum value of 0 and a maximum value of 1, and (b) the means for 10 000 samples of size n = 10 drawn from the same uniform distribution. For (a) the mean of the 10 000 samples is 0.5042, and for (b) the mean of the 10 000 samples is 0.5006. Note that for (a) the distribution closely approximates a uniform distribution in which every possible result is equally likely, and that for (b) the distribution closely approximates a normal distribution.

Degrees of Freedom

In reading to this point, did you notice the differences between the equations for the standard deviation or variance of a population and the standard deviation or variance of a sample? If not, here are the two equations:

\[σ^2 = \dfrac{\sum_{i}(X_i − μ)^2}{n}\]

\[s^2 = \dfrac{\sum_{i}(X_i − \overline{X})^2}{n − 1}\]

Both equations measure the variance around the mean, using μ for a population and X for a sample. Although the equations use different measures for the mean, the intention is the same for both the sample and the population. A more interesting difference is between the denominators of the two equations. In calculating the population’s variance we divide the numerator by the population’s size, n. For the sample’s variance we divide by n – 1, where n is the size of the sample. Why do we make this distinction?

A variance is the average squared deviation of individual results from the mean. In calculating an average we divide by the number of independent measurements, also known as the degrees of freedom, contributing to the calculation. For the population’s variance, the degrees of freedom is equal to the total number of members, n, in the population. In measuring every member of the population we have complete information about the population.

When calculating the sample’s variance, however, we first replace μ with X, which we also calculate from the same data. If there are n members in the sample, we can deduce the value of the nth member from the remaining n – 1 members. For example, if n = 5 and we know that the first four samples are 1, 2, 3 and 4, and that the mean is 3, then the fifth member of the sample must be

\[X_5 = (\overline{X}×n) − X_1− X_2− X_3− X_4 = (3×5) − 1 − 2 − 3 − 4 = 5\]

Using n – 1 in place of n when calculating the sample’s variance ensures that s2 is an unbiased estimator of σ2.

(Here is another way to think about degrees of freedom. We analyze samples to make predictions about the underlying population. When our sample consists of n measurements we cannot make more than n independent predictions about the population. Each time we estimate a parameter, such as the population’s mean, we lose a degree of freedom. If there are n degrees of freedom for calculating the sample’s mean, then there are n – 1 degrees of freedom remaining for calculating the sample’s variance.)

4.4.5 Confidence Intervals for Samples

Earlier we introduced the confidence interval as a way to report the most probable value for a population’s mean, μ,

\[μ = \overline{X} ± \dfrac{zσ}{\sqrt{n}}\tag{4.11}\]

where X is the mean for a sample of size n, and σ is the population’s standard deviation. For most analyses we do not know the population’s standard deviation. We can still calculate a confidence interval, however, if we make two modifications to equation 4.11.

The first modification is straightforward—we replace the population’s standard deviation, σ, with the sample’s standard deviation, s. The second modification is less obvious. The values of z in Table 4.12 are for a normal distribution, which is a function of σ2, not s2. Although the sample’s variance, s2, provides an unbiased estimate for the population’s variance, σ2, the value of s2 for any sample may differ significantly from σ2. To account for the uncertainty in estimating σ2, we replace the variable z in equation 4.11 with the variable t, where t is defined such that t ≥ z at all confidence levels.

\[μ = \overline{X} ± \dfrac{ts}{\sqrt{n}}\tag{4.12}\]

Values for t at the 95% confidence level are shown in Table 4.15. Note that t becomes smaller as the number of degrees of freedom increases, approaching z as n approaches infinity. The larger the sample, the more closely its confidence interval approaches the confidence interval given by equation 4.11. Appendix 4 provides additional values of t for other confidence levels.

| Degrees of Freedom | t | Degrees of Freedom | t |

|---|---|---|---|

| 1 | 12.706 | 12 | 2.179 |

| 2 | 4.303 | 14 | 2.145 |

| 3 | 3.181 | 16 | 2.120 |

| 4 | 2.776 | 18 | 2.101 |

| 5 | 2.571 | 20 | 2.086 |

| 6 | 2.447 | 30 | 2.042 |

| 7 | 2.365 | 40 | 2.021 |

| 8 | 2.306 | 60 | 2.000 |

| 9 | 2.262 | 100 | 1.984 |

| 10 | 2.228 | ∞ | 1.960 |

Example 4.15

What are the 95% confidence intervals for the two samples of pennies in Table 4.11?

Solution

The mean and standard deviation for first experiment are, respectively, 3.117 g and 0.051 g. Because the sample consists of seven measurements, there are six degrees of freedom. The value of t from Table 4.15, is 2.447. Substituting into equation 4.12 gives

\[\mathrm{μ = 3.117\: g ± \dfrac{2.447 × 0.051\: g}{\sqrt{7}} = 3.117\: g ± 0.047\: g}\]

For the second experiment the mean and standard deviation are 3.081 g and 0.073 g, respectively, with four degrees of freedom. The 95% confidence interval is

\[\mathrm{μ = 3.081\: g ± \dfrac{2.776 × 0.037\: g}{\sqrt{5}} = 3.081\: g ± 0.046\: g}\]

Based on the first experiment, there is a 95% probability that the population’s mean is between 3.070 to 3.164 g. For the second experiment, the 95% confidence interval spans 3.035 g–3.127 g. The two confidence intervals are not identical, but the mean for each experiment is contained within the other experiment’s confidence interval. There also is an appreciable overlap of the two confidence intervals. Both of these observations are consistent with samples drawn from the same population.

Note

Our comparison of these two confidence intervals is rather vague and unsatisfying. We will return to this point in the next section, when we consider a statistical approach to comparing the results of experiments.

Practice Exercise 4.7

What is the 95% confidence interval for the sample of 100 pennies in Table 4.13? The mean and the standard deviation for this sample are 3.095 g and 0.0346 g, respectively. Compare your result to the confidence intervals for the samples of pennies in Table 4.11.

Click here when to review your answer to this exercise.

4.4.6 A Cautionary Statement

There is a temptation when analyzing data to plug numbers into an equation, carry out the calculation, and report the result. This is never a good idea, and you should develop the habit of constantly reviewing and evaluating your data. For example, if an analysis on five samples gives an analyte’s mean concentration as 0.67 ppm with a standard deviation of 0.64 ppm, then the 95% confidence interval is

\[\mathrm{μ = 0.67\: ppm ± \dfrac{2.776 × 0.64\: ppm}{\sqrt{5}} = 0.67\: ppm ± 0.79\: ppm}\]

This confidence interval suggests that the analyte’s true concentration lies within the range of –0.12 ppm to 1.46 ppm. Including a negative concentration within the confidence interval should lead you to reevaluate your data or conclusions. A closer examination of your data may convince you that the standard deviation is larger than expected, making the confidence interval too broad, or you may conclude that the analyte’s concentration is too small to detect accurately. (We will return to the topic of detection limits near the end of the chapter.)

Here is a second example of why you should closely examine your data. The results for samples drawn from a normally distributed population must be random. If the results for a sequence of samples show a regular pattern or trend, then the underlying population may not be normally distributed, or there may be a time-dependent determinate error. For example, if we randomly select 20 pennies and find that the mass of each penny is larger than that for the preceding penny, we might suspect that our balance is drifting out of calibration.