4.6: Statistical Methods for Normal Distributions

- Page ID

- 5747

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\dsum}{\displaystyle\sum\limits} \)

\( \newcommand{\dint}{\displaystyle\int\limits} \)

\( \newcommand{\dlim}{\displaystyle\lim\limits} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\(\newcommand{\longvect}{\overrightarrow}\)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)The most common distribution for our results is a normal distribution. Because the area between any two limits of a normal distribution is well defined, constructing and evaluating significance tests is straightforward.

4.6.1 Comparing X to μ

One approach for validating a new analytical method is to analyze a sample containing a known amount of analyte, μ. To judge the method’s accuracy we analyze several portions of the sample, determine the average amount of analyte in the sample, X, and use a significance test to compare it to μ. Our null hypothesis, H0 : X = μ, is that any difference between X and μ is the result of indeterminate errors affecting the determination of X. The alternative hypothesis, HA : X ≠ μ , is that the difference between X and μ is too large to be explained by indeterminate error.

The test statistic is texp, which we substitute into the confidence interval for μ (equation 4.12).

\[μ = \overline{X} ± \dfrac{t_\ce{exp}s}{\sqrt{n}}\tag{4.14}\]

Rearranging this equation and solving for texp

\[t_\ce{exp} = \dfrac{|μ − \overline{X}| \sqrt{n}}{s}\tag{4.15}\]

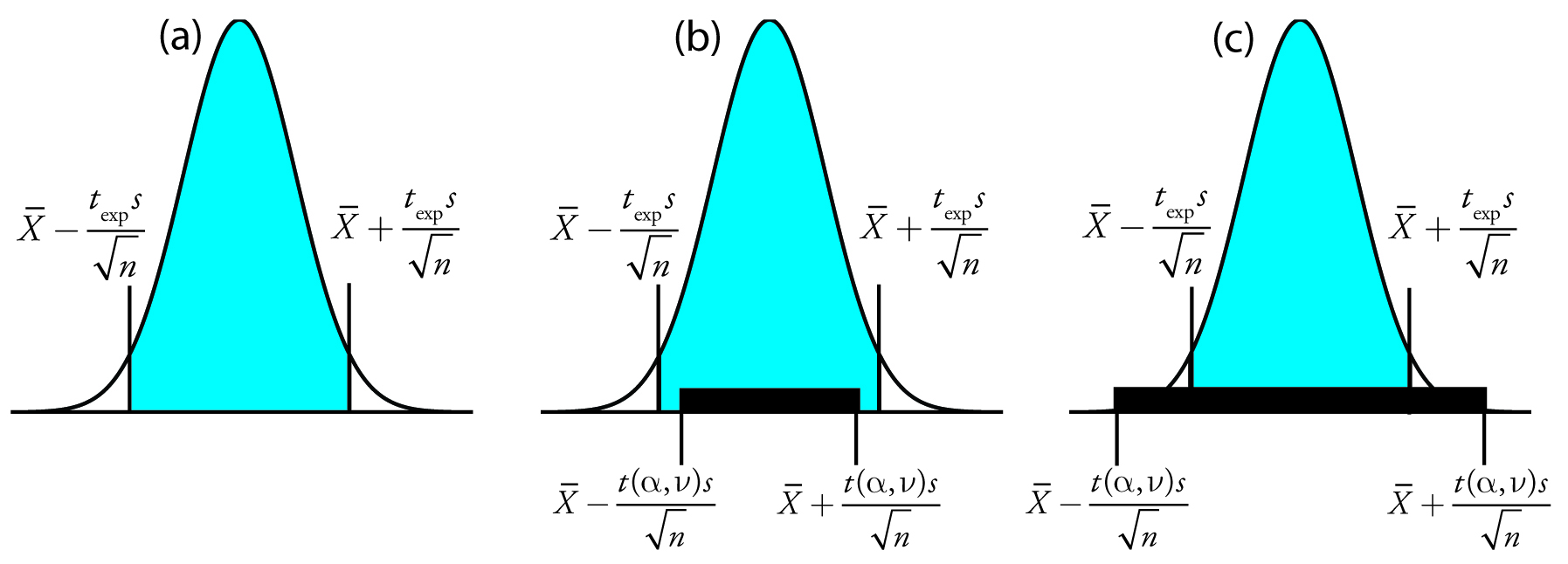

gives the value of texp when μ is at either the right edge or the left edge of the sample’s confidence interval (Figure 4.14a).

To determine if we should retain or reject the null hypothesis, we compare the value of texp to a critical value, t(α,ν), where α is the confidence level and ν is the degrees of freedom for the sample. (Values for t(α,ν) are in Appendix 4.) The critical value t(α,ν) defines the largest confidence interval resulting from indeterminate errors. If texp > t(α,ν), then our sample’s confidence interval is too large to be explained by indeterminate errors (Figure 4.14b). In this case, we reject the null hypothesis and accept the alternative hypothesis. If texp ≤ t(α,ν), then the confidence interval for our sample can be explained indeterminate error, and we retain the null hypothesis (Figure 4.14c).

Example 4.16 provides a typical application of this significance test, which is known as a t-test of X to μ.

Note

Another name for the t-test is Student’s t-test. Student was the pen name for William Gossett (1876-1927) who developed the t-test while working as a statistician for the Guiness Brewery in Dublin, Ireland. He published under the name Student because the brewery did not want its competitors to know they were using statistics to help improve the quality of their products.

Figure 4.14 Relationship between confidence intervals and the result of a significance test. (a) The shaded area under the normal distribution curve shows the confidence interval for the sample based on texp. Based on the sample, we expect μ to fall within the shaded area. The solid bars in (b) and (c) show the confidence intervals for μ that can be explained by indeterminate error given the choice of α and the available degrees of freedom, ν. For (b) we must reject the null hypothesis because there are portions of the sample’s confidence interval that lie outside the confidence interval due to indeterminate error. In the case of (c) we retain the null hypothesis because the confidence interval due to indeterminate error completely encompasses the sample’s confidence interval.

Example 4.16

Before determining the amount of Na2CO3 in a sample, you decide to check your procedure by analyzing a sample known to be 98.76% w/w Na2CO3. Five replicate determinations of the %w/w Na2CO3 in the standard gave the following results.

98.71% 98.59% 98.62% 98.44% 98.58%

Using α = 0.05, is there any evidence that the analysis is giving inaccurate results?

Solution

The mean and standard deviation for the five trials are

\[\overline{X} = 98.59 \hspace{40px} s = 0.0973\]

Because there is no reason to believe that the results for the standard must be larger (or smaller) than μ, a two-tailed t-test is appropriate. The null hypothesis and alternative hypothesis are

\[H_0 : \overline{X} = μ \hspace{40px} H_A : \overline{X} ≠ μ\]

The test statistic, texp, is

\[t_\ce{exp} = \dfrac{|μ − \overline{X} | \sqrt{n}}{s} = \dfrac{|98.76 − 98.59| \sqrt{5}}{0.0973} = 3.91\]

The critical value for t(0.05,4) from Appendix 4 is 2.78. Since texp is greater than t(0.05,4) we reject the null hypothesis and accept the alternative hypothesis. At the 95% confidence level the difference between X and μ is too large to be explained by indeterminate sources of error, suggesting that a determinate source of error is affecting the analysis.

Note

There is another way to interpret the result of this t-test. Knowing that texp is 3.91 and that there are 4 degrees of freedom, we use Appendix 4 to estimate the a value corresponding to a t(a,4) of 3.91. From Appendix 4, t(0.02,4) is 3.75 and t(0.01, 4) is 4.60. Although we can reject the null hypothesis at the 98% confidence level, we cannot reject it at the 99% confidence level.

For a discussion of the advantages of this approach, see J. A. C. Sterne and G. D. Smith “Sifting the evidence—what’s wrong with significance tests?” BMJ 2001, 322, 226–231.

Practice Exercise 4.8

To evaluate the accuracy of a new analytical method, an analyst determines the purity of a standard for which μ is 100.0%, obtaining the following results.

99.28% 103.93% 99.43% 99.84% 97.60% 96.70% 98.02%

Is there any evidence at α = 0.05 that there is a determinate error affecting the results?

Click here to review your answer to this exercise.

Earlier we made the point that you need to exercise caution when interpreting the results of a statistical analysis. We will keep returning to this point because it is an important one. Having determined that a result is inaccurate, as we did in Example 4.16, the next step is to identify and to correct the error. Before expending time and money on this, however, you should first critically examine your data. For example, the smaller the value of s, the larger the value of texp. If the standard deviation for your analysis is unrealistically small, the probability of a type 2 error increases. Including a few additional replicate analyses of the standard and reevaluating the t-test may strengthen your evidence for a determinate error, or it may show that there is no evidence for a determinate error.

4.6.2 Comparing s2 to σ2

If we regularly analyze a particular sample, we may be able to establish the expected variance, σ2, for the analysis. This often is the case, for example, in clinical labs that routinely analyze hundreds of blood samples each day. A few replicate analyses of a single sample gives a sample variance, s2, whose value may or may not differ significantly from σ2.

We can use an F-test to evaluate whether a difference between s2 and σ2 is significant. The null hypothesis is H0 : s2= σ2 and the alternative hypothesis is HA : s2 ≠ σ2. The test statistic for evaluating the null hypothesis is Fexp, which is given as either

\[\begin{align}

&F_\ce{exp} = \dfrac{s^2}{σ^2} && &&F_\ce{exp} = \dfrac{σ^2}{s^2}\\

& &&\textrm{or} &&\\

&(s^2 > σ^2) && &&(s^2 < σ^2)

\end{align}\tag{4.16}\]

depending on whether s2 is larger or smaller than σ2. This way of defining Fexp ensures that its value is always greater than or equal to one.

If the null hypothesis is true, then Fexp should equal one. Because of indeterminate errors, however, Fexp usually is greater than one. A critical value, F(α, νnum, νden), gives the largest value of Fexp that we can attribute to indeterminate error. It is chosen for a specified significance level, α, and for the degrees of freedom for the variance in the numerator, νnum, and the variance in the denominator,

νden. The degrees of freedom for s2 is n – 1, where n is the number of replicates used to determine the sample’s variance, and the degrees of freedom for σ2 is ∞. Critical values of F for α = 0.05 are listed in Appendix 5 for both one-tailed and two-tailed F-tests.

Example 4.17

A manufacturer’s process for analyzing aspirin tablets has a known variance of 25. A sample of 10 aspirin tablets is selected and analyzed for the amount of aspirin, yielding the following results in mg aspirin/tablet.

254 249 252 252 249 249 250 247 251 252

Determine whether there is any evidence of a significant difference between that the sample’s variance the expected variance at α=0.05.

Solution

The variance for the sample of 10 tablets is 4.3. The null hypothesis and alternative hypotheses are

\[H_0 : s^2 = σ^2 \hspace{40px} H_A : s^2 ≠ σ^2\]

The value for Fexp is

\[F_\ce{exp} = \dfrac{σ^2}{s^2} = \dfrac{25}{4.3} = 5.8\]

The critical value for F(0.05,∞,9) from Appendix 5 is 3.333. Since Fexp is greater than F(0.05,∞,9), we reject the null hypothesis and accept the alternative hypothesis that there is a significant difference between the sample’s variance and the expected variance. One explanation for the difference might be that the aspirin tablets were not selected randomly.

4.6.3 Comparing Two Sample Variances

We can extend the F-test to compare the variances for two samples, A and B, by rewriting equation 4.16 as

\[F_\ce{exp} = \dfrac{s_\ce{A}^2}{s_\ce{B}^2}\]

defining A and B so that the value of Fexp is greater than or equal to 1.

Example 4.18

Table 4.11 shows results for two experiments to determine the mass of a circulating U.S. penny. Determine whether there is a difference in the precisions of these analyses at α = 0.05.

Solution

The variances for the two experiments are 0.00259 for the first experiment (A) and 0.00138 for the second experiment (B). The null and alternative hypotheses are

\[H_0 : s_\ce{A}^2 = s_\ce{B}^2 \hspace{40px} H_\ce{A} : s_\ce{A}^2 ≠ s_\ce{B}^2\]

and the value of Fexp is

\[F_\ce{exp} = \dfrac{s_\ce{A}^2}{s_\ce{B}^2} = \dfrac{0.00259}{0.00138} = 1.88\]

From Appendix 5, the critical value for F(0.05,6,4) is 9.197. Because Fexp < F(0.05,6,4), we retain the null hypothesis. There is no evidence at α = 0.05 to suggest that the difference in precisions is significant.

Practice Exercise 4.9

To compare two production lots of aspirin tablets, you collect samples from each and analyze them, obtaining the following results (in mg aspirin/tablet).

Lot 1: 256 248 245 245 244 248 261

Lot 2: 241 258 241 244 256 254

Is there any evidence at α = 0.05 that there is a significant difference in the variance between the results for these two samples?

Click here to review your answer to this exercise.

4.6.4 Comparing Two Sample Means

Three factors influence the result of an analysis: the method, the sample, and the analyst. We can study the influence of these factors by conducting experiments in which we change one of the factors while holding the others constant. For example, to compare two analytical methods we can have the same analyst apply each method to the same sample, and then examine the resulting means. In a similar fashion, we can design experiments to compare analysts or to compare samples.

Note

It also is possible to design experiments in which we vary more than one of these factors. We will return to this point in Chapter 14.

Before we consider the significance tests for comparing the means of two samples, we need to make a distinction between unpaired data and paired data. This is a critical distinction and learning to distinguish between the two types of data is important. Here are two simple examples that highlight the difference between unpaired data and paired data. In each example the goal is to compare two balances by weighing pennies.

- Example 1: Collect 10 pennies and weigh each penny on each balance. This is an example of paired data because we use the same 10 pennies to evaluate each balance.

- Example 2: Collect 10 pennies and divide them into two groups of five pennies each. Weigh the pennies in the first group on one balance and weigh the second group of pennies on the other balance. Note that no penny is weighed on both balances. This is an example of unpaired data because we evaluate each balance using a different sample of pennies.

Note

One simple test for determining whether data are paired or unpaired is to look at the size of each sample. If the samples are of different size, then the data must be unpaired. The converse is not true. If two samples are of equal size, they may be paired or unpaired.

In both examples the samples of pennies are from the same population. The difference is how we sample the population. We will learn why this distinction is important when we review the significance test for paired data; first, however, we present the significance test for unpaired data.

Unpaired Data

Consider two analyses, A and B with means of XA and XB, and standard deviations of sA and sB. The confidence intervals for μA and for μB are

\[μ_\ce{A} = \overline{X}_\ce{A} ± \dfrac{ts_\ce{A}}{\sqrt{n_\ce{A}}}\tag{4.17}\]

\[μ_\ce{B} = \overline{X}_\ce{B} ± \dfrac{ts_\ce{B}}{\sqrt{n_\ce{B}}}\tag{4.18}\]

where nA and nB are the sample sizes for A and B. Our null hypothesis, H0 : μA = μB, is that and any difference between μA and μB is the result of indeterminate errors affecting the analyses. The alternative hypothesis, HA : μA ≠ μB, is that the difference between μA and μB means is too large to be explained by indeterminate error.

To derive an equation for texp, we assume that μA equals μB, and combine equations 4.17 and 4.18.

\[\overline{X}_\ce{A} ± \dfrac{t_\ce{exp}s_\ce{A}}{\sqrt{n_\ce{A}}} = \overline{X}_\ce{B} ± \dfrac{t_\ce{exp}s_\ce{B}}{\sqrt{n_\ce{B}}}\]

Solving for |XA − XB| and using a propagation of uncertainty, gives

\[|\overline{X}_\ce{A} - \overline{X}_\ce{B}| = t_\ce{exp} × \sqrt{\dfrac{s_\ce{A}^2}{n_\ce{A}} + \dfrac{s_\ce{B}^2}{n_\ce{B}}}\tag{4.19}\]

(Problem 9 asks you to use a propagation of uncertainty to show that equation 4.19 is correct.) Finally, we solve for texp

\[t_\ce{exp} = \dfrac{|\overline{X}_\ce{A} − \overline{X}_\ce{B}|}{\sqrt{\dfrac{s_\ce{A}^2}{n_\ce{A}} + \dfrac{s_\ce{B}^2}{n_\ce{B}}}}\tag{4.20}\]

and compare it to a critical value, t(α,ν), where α is the probability of a type 1 error, and ν is the degrees of freedom.

Thus far our development of this t-test is similar to that for comparing X to μ, and yet we do not have enough information to evaluate the t-test. Do you see the problem? With two independent sets of data it is unclear how many degrees of freedom we have.

Suppose that the variances sA2 and sB2 provide estimates of the same σ2. In this case we can replace sA2 and sB2 with a pooled variance, (spool)2, that provides a better estimate for the variance. (So how do you determine if it is okay to pool the variances? Use an F-test.) Thus, equation 4.20 becomes

\[t_\ce{exp} = \dfrac{|\overline{X}_\ce{A} − \overline{X}_\ce{B}|}{s_\ce{pool} \sqrt{\dfrac{1}{n_\ce{A}} + \dfrac{1}{n_\ce{B}}}} = \dfrac{|\overline{X}_\ce{A} − \overline{X}_\ce{B}|}{s_\ce{pool}} × \sqrt{\dfrac{n_\ce{A}n_\ce{B}}{n_\ce{A} + n_\ce{B}}}\tag{4.21}\]

where spool, the pooled standard deviation, is

\[s_\ce{pool} = \sqrt{\dfrac{(n_\ce{A} - 1)s_\ce{A}^2+ (n_\ce{B}− 1)s_\ce{B}^2}{n_\ce{A} + n_\ce{B} − 2}}\tag{4.22}\]

The denominator of equation 4.22 shows us that the degrees of freedom for a pooled standard deviation is nA + nB − 2, which also is the degrees of freedom for the t-test.

If sA2 and sB2 are significantly different we must calculate texp using equation 4.20. In this case, we find the degrees of freedom using the following imposing equation.

\[ν = \dfrac{\left[\dfrac{s_\ce{A}^2}{n_\ce{A}}+ \dfrac{s_\ce{B}^2}{n_\ce{B}}\right]^2}{\dfrac{\left(\dfrac{s_\ce{A}^2}{n_\ce{A}}\right)^2}{n_\ce{A}+ 1} + \dfrac{\left(\dfrac{s_\ce{B}^2}{n_\ce{B}}\right)^2}{n_\ce{B}+ 1}} - 2\tag{4.23}\]

Since the degrees of freedom must be an integer, we round to the nearest integer the value of ν obtained using equation 4.23.

Regardless of whether we calculate texp using equation 4.20 or equation 4.21, we reject the null hypothesis if texp is greater than t(α,ν), and retained the null hypothesis if texp is less than or equal to t(α,ν).

Example 4.19

Table 4.11 provides results for two experiments to determine the mass of a circulating U.S. penny. Determine whether there is a difference in the means of these analyses at α=0.05.

Solution

First we must determine whether we can pool the variances. This is done using an F-test. We did this analysis in Example 4.18, finding no evidence of a significant difference. The pooled standard deviation is

\[s_\ce{pool} = \sqrt{\dfrac{(7−1)(0.00259)^2+(5−1)(0.00138)^2}{7+5−2} = 0.00459}\]

with 10 degrees of freedom. To compare the means the null hypothesis and alternative hypotheses are

\[H_0 : μ_\ce{A} = μ_\ce{B} \hspace{20px} H_\ce{A} : μ_\ce{A} ≠ μ_\ce{B}\]

Because we are using the pooled standard deviation, we calculate texp using equation 4.21.

\[t_\ce{exp} = \dfrac{|3.117 - 3.081|}{0.0459} × \sqrt{\dfrac{7×5}{7+5}} = 1.34\]

The critical value for t(0.05,10), from Appendix 4, is 2.23. Because texp is less than t(0.05,10) we retain the null hypothesis. For α = 0.05 there is no evidence that the two sets of pennies are significantly different.

Example 4.20

One method for determining the %w/w Na2CO3 in soda ash is an acid–base titration. When two analysts analyze the same sample of soda ash they obtain the results shown here.

| Analyst A | Analyst B |

|

86.82 87.04 86.93 87.01 86.20 87.00 |

81.01 86.15 81.73 83.19 80.27 83.94 |

Determine whether the difference in the mean values is significant at α=0.05.

Solution

We begin by summarizing the mean and standard deviation for each analyst.

\[\overline{X}_\ce{A} = 86.83\% \hspace{40px} \overline{X}_\ce{B} = 82.71\%\]

\[s_\ce{A} = 0.32 \hspace{40px} s_\ce{B} = 2.16\]

To determine whether we can use a pooled standard deviation, we first complete an F-test of the following null and alternative hypotheses.

\[H_0 : s_\ce{A}^2 = s_\ce{B}^2 \hspace{40px} H_\ce{A} : s_\ce{A}^2 ≠ s_\ce{B}^2\]

Calculating Fexp, we obtain a value of

\[F_\ce{exp} = \dfrac{(2.16)^2}{(0.32)^2} = 45.6\]

Because Fexp is larger than the critical value of 7.15 for F(0.05,5,5) from Appendix 5, we reject the null hypothesis and accept the alternative hypothesis that there is a significant difference between the variances. As a result, we cannot calculate a pooled standard deviation.

To compare the means for the two analysts we use the following null and alternative hypotheses.

\[H_0 : μ_\ce{A} = μ_\ce{B} \hspace{20px} H_\ce{A} : μ_\ce{A} ≠ μ_\ce{B}\]

Because we cannot pool the standard deviations, we calculate texp using equation 4.20 instead of equation 4.21

\[t_\ce{exp}= \dfrac{|86.83−82.71|}{\sqrt{\dfrac{(0.32)^2}{6} + \dfrac{(2.16)^2}{6}}} = 4.62\]

and calculate the degrees of freedom using equation 4.23.

\[ν = \dfrac{\left[\dfrac{(0.32)^2}{6}+ \dfrac{(2.16)^2}{6}\right]^2}{\dfrac{\left(\dfrac{(0.32)^2}{6}\right)^2}{6 + 1} + \dfrac{\left(\dfrac{(2.16)^2}{6}\right)^2}{6+ 1}} - 2 = 5.3 ≈ 5\]

From Appendix 4, the critical value for t(0.05,5) is 2.57. Because texp is greater than t(0.05,5) we reject the null hypothesis and accept the alternative hypothesis that the means for the two analysts are significantly different at α = 0.05.

Practice Exercise 4.10

To compare two production lots of aspirin tablets, you collect samples from each and analyze them, obtaining the following results (in mg aspirin/tablet).

Lot 1: 256 248 245 245 244 248 261

Lot 2: 241 258 241 244 256 254

Is there any evidence at α = 0.05 that there is a significant difference in the variance between the results for these two samples? This is the same data from Practice Exercise 4.9.

Click here to review your answer to this exercise.

Paired Data

Suppose we are evaluating a new method for monitoring blood glucose concentrations in patients. An important part of evaluating a new method is to compare it to an established method. What is the best way to gather data for this study? Because the variation in the blood glucose levels amongst patients is large we may be unable to detect a small, but significant difference between the methods if we use different patients to gather data for each method. Using paired data, in which the we analyze each patient’s blood using both methods, prevents a large variance within a population from adversely affecting a t-test of means.

Note

Typical blood glucose levels for most non-diabetic individuals ranges between 80–120 mg/dL (4.4–6.7 mM), rising to as high as 140 mg/dL (7.8 mM) shortly after eating. Higher levels are common for individuals who are pre-diabetic or diabetic.

When using paired data we first calculate the difference, di, between the paired values for each sample. Using these difference values, we then calculate the average difference, d, and the standard deviation of the differences, sd. The null hypothesis, H0 : d = 0, is that there is no difference between the two samples, and the alternative hypothesis, HA : d ≠ 0, is that the difference between the two samples is significant.

The test statistic, texp, is derived from a confidence interval around d

\[t_\ce{exp} = \dfrac{|d|\sqrt{n}}{s_d}\]

where n is the number of paired samples. As is true for other forms of the t-test, we compare texp to t(α,ν), where the degrees of freedom, ν, is n – 1. If texp is greater than t(α,ν), then we reject the null hypothesis and accept the alternative hypothesis. We retain the null hypothesis if texp is less than or equal to t(α,ν). This is known as a paired t-test.

Example 4.21

Marecek et. al. developed a new electrochemical method for rapidly determining the concentration of the antibiotic monensin in fermentation vats.7 The standard method for the analysis, a test for microbiological activity, is both difficult and time consuming. Samples were collected from the fermentation vats at various times during production and analyzed for the concentration of monensin using both methods. The results, in parts per thousand (ppt), are reported in the following table.

| Sample | Microbiological | Electrochemical |

| 1 | 129.5 | 132.3 |

| 2 | 89.6 | 91.0 |

| 3 | 76.6 | 73.6 |

| 4 | 52.2 | 58.2 |

| 5 | 110.8 | 104.2 |

| 6 | 50.4 | 49.9 |

| 7 | 72.4 | 82.1 |

| 8 | 141.4 | 154.1 |

| 9 | 75.0 | 73.4 |

| 10 | 34.1 | 38.1 |

| 11 | 60.3 | 60.1 |

Is there a significant difference between the methods at α = 0.05?

Solution

Acquiring samples over an extended period of time introduces a substantial time-dependent change in the concentration of monensin. Because the variation in concentration between samples is so large, we use paired t-test with the following null and alternative hypotheses.

\[H_0 : \overline{d} = 0 \hspace{20px} H_\ce{A} : \overline{d} ≠ 0\]

Defining the difference between the methods as

\[d_i = \left(X_\ce{elect}\right)_i − \left(X_\ce{micro}\right)_i\]

we can calculate the following differences for the samples.

| Sample | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| di | 2.8 | 1.4 | -3.0 | 6.0 | -6.6 | -0.5 | 9.7 | 12.7 | -1.6 | 4.0 | -0.2 |

The mean and standard deviation for the differences are, respectively, 2.25 and 5.63. The value of texp is

\[t_\ce{exp} = \dfrac{|2.25| \sqrt{11}}{5.63} = 1.33\]

which is smaller than the critical value of 2.23 for t(0.05,10) from Appendix 4. We retain the null hypothesis and find no evidence for a significant difference in the methods at α = 0.05.

Practice Exercise 4.11

|

Location |

Air-Water Interface |

Sediment-Water Interface |

| 1 | 0.430 | 0.415 |

| 2 | 0.266 | 0.238 |

| 3 | 0.457 | 0.390 |

| 4 | 0.531 | 0.410 |

| 5 | 0.707 | 0.605 |

| 6 | 0.716 | 0.609 |

Suppose you are studying the distribution of zinc in a lake and want to know if there is a significant difference between the concentration of Zn2+ at the sediment-water interface and its concentration at the air-water interface. You collect samples from six locations—near the lake’s center, near its drainage outlet, etc.—obtaining the results (in mg/L) shown in the table. Using the data in the table shown to the right, determine if there is a significant difference between the concentration of Zn2+ at the two interfaces at α = 0.05.

Complete this analysis treating the data as (a) unpaired, and (b) paired. Briefly comment on your results.

Click here to review your answers to this exercise.

One important requirement for a paired t-test is that determinate and indeterminate errors affecting the analysis must be independent of the analyte's concentration. If this is not the case, then a sample with an unusually high concentration of analyte will have an unusually large di. Including this sample in the calculation of d and sd leads to a biased estimate of the expected mean and standard deviation. This is rarely a problem for samples spanning a limited range of analyte concentrations, such as those in Example 4.21 or Practice Exercise 4.11. When paired data span a wide range of concentrations, however, the magnitude of the determinate and indeterminate sources of error may not be independent of the analyte's concentration. In such cases a paired t-test may give misleading results since the paired data with the largest absolute determinate and indeterminate errors will dominate d. In this situation a regression analysis, which is the subject of the next chapter, is more appropriate method for comparing the data.

4.6.5 Outliers

Earlier in the chapter we examined several data sets consisting of the mass of a circulating United States penny. Table 4.16 provides one more data set. Do you notice anything unusual in this data? Of the 112 pennies included in Table 4.11 and Table 4.13, no penny weighed less than 3 g. In Table 4.16, however, the mass of one penny is less than 3 g. We might ask whether this penny’s mass is so different from the other pennies that it is in error.

Data that are not consistent with the remaining data are called outliers. An outlier might exist for many reasons: the outlier might be from a different population (Is this a Canadian penny?); the outlier might be a contaminated or otherwise altered sample (Is the penny damaged?); or the outlier may result from an error in the analysis (Did we forget to tare the balance?). Regardless of its source, the presence of an outlier compromises any meaningful analysis of our data. There are many significance tests for identifying potential outliers, three of which we present here.

| 3.067 | 2.514 | 3.094 |

| 3.049 | 3.048 | 3.109 |

| 3.039 | 3.079 | 3.102 |

Dixon’s Q-Test

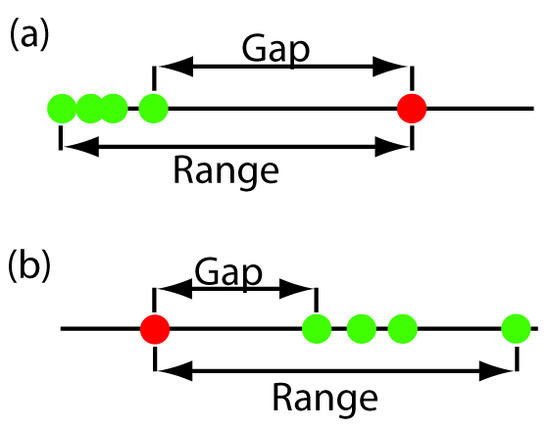

One of the most common significance tests for outliers is Dixon’s Q‑test. The null hypothesis is that there are no outliers, and the alternative hypothesis is that there is an outlier. The Q-test compares the gap between the suspected outlier and its nearest numerical neighbor to the range of the entire data set (Figure 4.15). The test statistic, Qexp, is

\[\mathrm{\mathit{Q}_{exp} = \dfrac{gap}{range} = \dfrac{|outlier's\: value - nearest\: value|}{largest\: value - smallest\: value}}\]

This equation is appropriate for evaluating a single outlier. Other forms of Dixon’s Q-test allow its extension to detecting multiple outliers.8

Figure 4.15 Dotplots showing the distribution of two data sets containing a possible outlier. In (a) the possible outlier’s value is larger than the remaining data, and in (b) the possible outlier’s value is smaller than the remaining data.

The value of Qexp is compared to a critical value, Q(α, n), where α is the probability of rejecting a valid data point (a type 1 error) and n is the total number of data points. To protect against rejecting a valid data point, we usually apply the more conservative two-tailed Q-test, even though the possible outlier is the smallest or the largest value in the data set. If Qexp is greater than Q(α, n), then we reject the null hypothesis and may exclude the outlier. We retain the possible outlier when Qexp is less than or equal to Q(α, n). Table 4.17 provides values for Q(0.05, n) for a sample containing 3–10 values. A more extensive table is in Appendix 6. Values for Q(α, n) assume an underlying normal distribution.

| n | Q(0.05, n) |

|---|---|

| 3 | 0.970 |

| 4 | 0.829 |

| 5 | 0.710 |

| 6 | 0.625 |

| 7 | 0.568 |

| 8 | 0.526 |

| 9 | 0.493 |

| 10 | 0.466 |

Grubb’s Test

Although Dixon’s Q-test is a common method for evaluating outliers, it is no longer favored by the International Standards Organization (ISO), which now recommends Grubb’s test.9 There are several versions of Grubb’s test depending on the number of potential outliers. Here we will consider the case where there is a single suspected outlier.

The test statistic for Grubb’s test, Gexp, is the distance between the sample’s mean, X, and the potential outlier, Xout, in terms of the sample’s standard deviation, s.

\[G_\ce{exp} = \dfrac{|X_\ce{out} - \overline{X}|}{s}\]

We compare the value of Gexp to a critical value G(α,n), where α is the probability of rejecting a valid data point and n is the number of data points in the sample. If Gexp is greater than G(α,n), we may reject the data point as an outlier, otherwise we retain the data point as part of the sample. Table 4.18 provides values for G(0.05, n) for a sample containing 3–10 values. A more extensive table is in Appendix 7. Values for G(α,n) assume an underlying normal distribution.

| n | G(0.05, n) |

|---|---|

| 3 | 1.115 |

| 4 | 1.481 |

| 5 | 1.715 |

| 6 | 1.887 |

| 7 | 2.020 |

| 8 | 2.126 |

| 9 | 2.215 |

| 10 | 2.290 |

Chauvenet’s Criterion

Our final method for identifying outliers is Chauvenet’s criterion. Unlike Dixon’s Q-Test and Grubb’s test, you can apply this method to any distribution as long as you know how to calculate the probability for a particular outcome. Chauvenet’s criterion states that we can reject a data point if the probability of obtaining the data point’s value is less than (2n)–1, where n is the size of the sample. For example, if n = 10, a result with a probability of less than (2×10)–1, or 0.05, is considered an outlier.

To calculate a potential outlier’s probability we first calculate its standardized deviation, z

\[z = \dfrac{|X_\ce{out} - \overline{X}|}{s}\]

where Xout is the potential outlier, X is the sample’s mean and s is the sample’s standard deviation. Note that this equation is identical to the equation for Gexp in the Grubb’s test. For a normal distribution, you can find the probability of obtaining a value of z using the probability table in Appendix 3.

Example 4.22

Table 4.16 contains the masses for nine circulating United States pennies. One of the values, 2.514 g, appears to be an outlier. Determine if this penny is an outlier using the Q-test, Grubb’s test, and Chauvenet’s criterion. For the Q-test and Grubb’s test, let α = 0.05.

Solution

For the Q-test the value for Qexp is

\[Q_\ce{exp} = \dfrac{|2.514 - 3.039|}{3.109 - 2.514} = 0.882\]

From Table 4.17, the critical value for Q(0.05,9) is 0.493. Because Qexp is greater than Q(0.05,9), we can assume that penny weighing 2.514 g is an outlier.

For Grubb’s test we first need the mean and the standard deviation, which are 3.011 g and 0.188 g, respectively. The value for Gexp is

\[G_\ce{exp} = \dfrac{|2.514 - 3.011|}{0.188} = 2.64\]

Using Table 4.18, we find that the critical value for G(0.05,9) is 2.215. Because Gexp is greater than G(0.05,9), we can assume that the penny weighing 2.514 g is an outlier.

For Chauvenet’s criterion, the critical probability is (2×9)–1, or 0.0556. The value of z is the same as Gexp, or 2.64. Using Appendix 3, the probability for z = 2.64 is 0.00415. Because the probability of obtaining a mass of 0.2514 g is less than the critical probability, we can assume that the penny weighing 2.514 g is an outlier.

You should exercise caution when using a significance test for outliers because there is a chance you will reject a valid result. In addition, you should avoid rejecting an outlier if it leads to a precision that is unreasonably better than that expected based on a propagation of uncertainty. Given these two concerns it is not surprising that some statisticians caution against the removal of outliers.10

Note

You also can adopt a more stringent requirement for rejecting data. When using the Grubb’s test, for example, the ISO 5752 guidelines suggest retaining a value if the probability for rejecting it is greater than α = 0.05, and flagging a value as a “straggler” if the probability for rejecting it is between α = 0.05 and 0.01. A “straggler” is retained unless there is compelling reason for its rejection. The guidelines recommend using α = 0.01 as the minimum criterion for rejecting a data point.

On the other hand, testing for outliers can provide useful information if you try to understand the source of the suspected outlier. For example, the outlier in Table 4.16 represents a significant change in the mass of a penny (an approximately 17% decrease in mass), which is the result of a change in the composition of the U.S. penny. In 1982 the composition of a U.S. penny was changed from a brass alloy consisting of 95% w/w Cu and 5% w/w Zn, to a zinc core covered with copper.11 The pennies in Table 4.16, therefore, were drawn from different populations.