18.3: Entropy - A Microscopic Understanding

- Page ID

- 60790

Introduction

Ludwig Boltzmann developed a molecular-scale statistical model that related the entropy of a system to the number of microstates possible for the system. A microstate (\(\Omega\)) is a specific configuration of the locations and energies of the atoms or molecules that comprise a system like the following:

\[S=k \ln \Omega \\ or \\ S=k \ln W \label{Eq2}\]

Here k is the Boltzmann constant and has a value of 1.38 × 10−23 J/K.

In general chemistry 1 we calculated ground state electron distributions where electrons resided in specific orbitals, which if we followed the Aufbau principle, the lowest energy state as the ground state. But if we added energy to the system, excited states could be obtained. Each of these states is a type of microstate. The ground state of helium would be 1S2 with both electrons being in the ground state. As we add energy to the system the temperature goes up, and the electrons can exist in various excited states. Entropy is related to the number of available states, and increases as the number of states increases. In reality we are discussing the ways energy and particles can be distributed in a system, and there are microstates related to the various translational, vibrational, and rotational modes energy can be distributed in a system

Entropy is also a state function, and so the energy difference between its final (Sf) and initial (Si) states is independent of the path:

\[ΔS=S_\ce{f}−S_\ce{i}=k \ln \Omega_\ce{f} − k \ln \Omega_\ce{i}=k \ln\dfrac{\Omega_\ce{f}}{\Omega_\ce{i}} \label{Eq2a}\]

For processes involving an increase in the number of microstates, \(\Omega_f > \Omega_i\), the entropy of the system increases, ΔS > 0. Conversely, processes that reduce the number of microstates, \(\Omega_f < \Omega_i\), yield a decrease in system entropy, ΔS < 0.

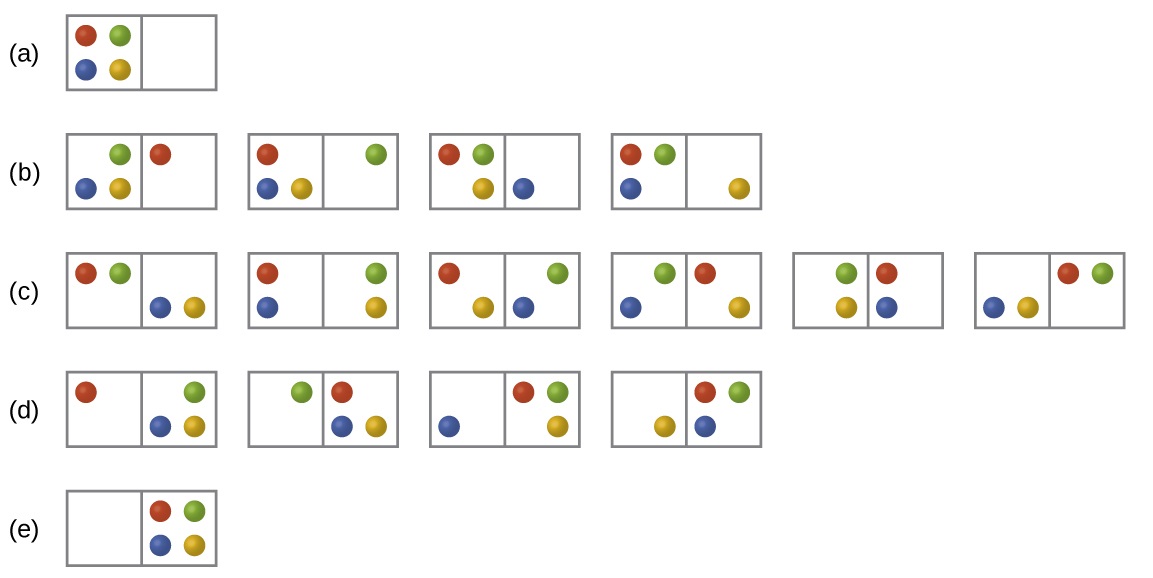

Consider the general case of a system composed of N particles distributed among n boxes. The number of microstates possible for such a system is nN. For example, distributing four particles among two boxes will result in 24 = 16 different microstates as illustrated in Figure \(\PageIndex{2}\). Microstates with equivalent particle arrangements (not considering individual particle identities) are grouped together and are called distributions. The probability that a system will exist with its components in a given distribution is proportional to the number of microstates within the distribution. Since entropy increases logarithmically with the number of microstates, the most probable distribution is therefore the one of greatest entropy.

Figure \(\PageIndex{2}\): The sixteen microstates associated with placing four particles in two boxes are shown. The microstates are collected into five distributions—(a), (b), (c), (d), and (e)—based on the numbers of particles in each box.

The most probable distribution is therefore the one of greatest entropy.

For this system, the most probable configuration is one of the six microstates associated with distribution (c) where the particles are evenly distributed between the boxes, that is, a configuration of two particles in each box. The probability of finding the system in this configuration is

\[\dfrac{6}{16} = \dfrac{3}{8}\]

The least probable configuration of the system is one in which all four particles are in one box, corresponding to distributions (a) and (e), each with a probability of

\[\dfrac{1}{16}\]

The probability of finding all particles in only one box (either the left box or right box) is then

\[\left(\dfrac{1}{16}+\dfrac{1}{16}\right)=\dfrac{2}{16} = \dfrac{1}{8}\]

As you add more particles to the system, the number of possible microstates increases exponentially (2N). A macroscopic (laboratory-sized) system would typically consist of moles of particles (N ~ 1023), and the corresponding number of microstates would be staggeringly huge. Regardless of the number of particles in the system, however, the distributions in which roughly equal numbers of particles are found in each box are always the most probable configurations.

In section 18.1.3 we looked at the expansion of a gas into a vacuum. We took a statistical thermodynamic approach, the least probable configuration would be for all the particles to be in one bulb and none in the other (analogous to (a) and (s) of figure 18.3.2 (above) and this state would have the lowest entropy. The state with the highest entropy would have an equivalent number of particles in both bulbs, analogous to (c) above, and so going from the initial state to the final state would represent an increase in entropy.

Contributors and Attributions

Robert E. Belford (University of Arkansas Little Rock; Department of Chemistry). The breadth, depth and veracity of this work is the responsibility of Robert E. Belford, rebelford@ualr.edu. You should contact him if you have any concerns. This material has both original contributions, and content built upon prior contributions of the LibreTexts Community and other resources, including but not limited to:

Some of this material was borrowed from OpenStax.,