14.3: Validating the Method as a Standard Method

- Page ID

- 220784

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\(\newcommand{\avec}{\mathbf a}\) \(\newcommand{\bvec}{\mathbf b}\) \(\newcommand{\cvec}{\mathbf c}\) \(\newcommand{\dvec}{\mathbf d}\) \(\newcommand{\dtil}{\widetilde{\mathbf d}}\) \(\newcommand{\evec}{\mathbf e}\) \(\newcommand{\fvec}{\mathbf f}\) \(\newcommand{\nvec}{\mathbf n}\) \(\newcommand{\pvec}{\mathbf p}\) \(\newcommand{\qvec}{\mathbf q}\) \(\newcommand{\svec}{\mathbf s}\) \(\newcommand{\tvec}{\mathbf t}\) \(\newcommand{\uvec}{\mathbf u}\) \(\newcommand{\vvec}{\mathbf v}\) \(\newcommand{\wvec}{\mathbf w}\) \(\newcommand{\xvec}{\mathbf x}\) \(\newcommand{\yvec}{\mathbf y}\) \(\newcommand{\zvec}{\mathbf z}\) \(\newcommand{\rvec}{\mathbf r}\) \(\newcommand{\mvec}{\mathbf m}\) \(\newcommand{\zerovec}{\mathbf 0}\) \(\newcommand{\onevec}{\mathbf 1}\) \(\newcommand{\real}{\mathbb R}\) \(\newcommand{\twovec}[2]{\left[\begin{array}{r}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\ctwovec}[2]{\left[\begin{array}{c}#1 \\ #2 \end{array}\right]}\) \(\newcommand{\threevec}[3]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\cthreevec}[3]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \end{array}\right]}\) \(\newcommand{\fourvec}[4]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\cfourvec}[4]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \end{array}\right]}\) \(\newcommand{\fivevec}[5]{\left[\begin{array}{r}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\cfivevec}[5]{\left[\begin{array}{c}#1 \\ #2 \\ #3 \\ #4 \\ #5 \\ \end{array}\right]}\) \(\newcommand{\mattwo}[4]{\left[\begin{array}{rr}#1 \amp #2 \\ #3 \amp #4 \\ \end{array}\right]}\) \(\newcommand{\laspan}[1]{\text{Span}\{#1\}}\) \(\newcommand{\bcal}{\cal B}\) \(\newcommand{\ccal}{\cal C}\) \(\newcommand{\scal}{\cal S}\) \(\newcommand{\wcal}{\cal W}\) \(\newcommand{\ecal}{\cal E}\) \(\newcommand{\coords}[2]{\left\{#1\right\}_{#2}}\) \(\newcommand{\gray}[1]{\color{gray}{#1}}\) \(\newcommand{\lgray}[1]{\color{lightgray}{#1}}\) \(\newcommand{\rank}{\operatorname{rank}}\) \(\newcommand{\row}{\text{Row}}\) \(\newcommand{\col}{\text{Col}}\) \(\renewcommand{\row}{\text{Row}}\) \(\newcommand{\nul}{\text{Nul}}\) \(\newcommand{\var}{\text{Var}}\) \(\newcommand{\corr}{\text{corr}}\) \(\newcommand{\len}[1]{\left|#1\right|}\) \(\newcommand{\bbar}{\overline{\bvec}}\) \(\newcommand{\bhat}{\widehat{\bvec}}\) \(\newcommand{\bperp}{\bvec^\perp}\) \(\newcommand{\xhat}{\widehat{\xvec}}\) \(\newcommand{\vhat}{\widehat{\vvec}}\) \(\newcommand{\uhat}{\widehat{\uvec}}\) \(\newcommand{\what}{\widehat{\wvec}}\) \(\newcommand{\Sighat}{\widehat{\Sigma}}\) \(\newcommand{\lt}{<}\) \(\newcommand{\gt}{>}\) \(\newcommand{\amp}{&}\) \(\definecolor{fillinmathshade}{gray}{0.9}\)For an analytical method to be useful, an analyst must be able to achieve results of acceptable accuracy and precision. Verifying a method, as described in the previous section, establishes this goal for a single analyst. Another requirement for a useful analytical method is that an analyst should obtain the same result from day-to-day, and different labs should obtain the same result when analyzing the same sample. The process by which we approve a method for general use is known as validation and it involves a collaborative test of the method by analysts in several laboratories. Collaborative testing is used routinely by regulatory agencies and professional organizations, such as the U. S. Environmental Protection Agency, the American Society for Testing and Materials, the Association of Official Analytical Chemists, and the American Public Health Association. Many of the representative methods in earlier chapters are identified by these agencies as validated methods.

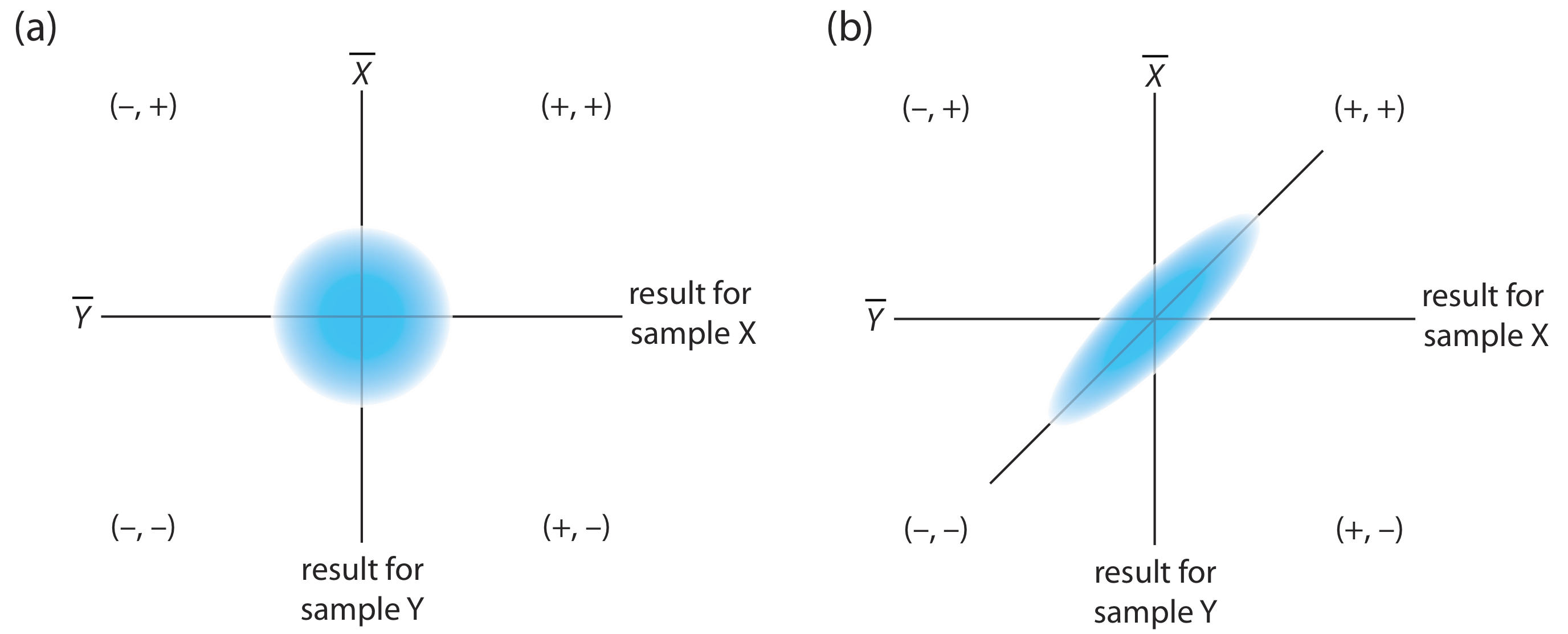

When an analyst performs a single analysis on a single sample the difference between the experimentally determined value and the expected value is influenced by three sources of error: random errors, systematic errors inherent to the method, and systematic errors unique to the analyst. If the analyst performs enough replicate analyses, then we can plot a distribution of results, as shown in Figure \(\PageIndex{1}\)a. The width of this distribution is described by a standard deviation that provides an estimate of the random errors affecting the analysis. The position of the distribution’s mean, \(\overline{X}\), relative to the sample’s true value, \(\mu\), is determined both by systematic errors inherent to the method and those systematic errors unique to the analyst. For a single analyst there is no way to separate the total systematic error into its component parts.

The goal of a collaborative test is to determine the magnitude of all three sources of error. If several analysts each analyze the same sample one time, the variation in their collective results (see Figure \(\PageIndex{1}\)b) includes contributions from random errors and systematic errors (biases) unique to the analysts. Without additional information, we cannot separate the standard deviation for this pooled data into the precision of the analysis and the systematic errors introduced by the analysts. We can use the position of the distribution, to detect the presence of a systematic error in the method.

Two-Sample Collaborative Testing

The design of a collaborative test must provide the additional information needed to separate random errors from the systematic errors introduced by the analysts. One simple approach—accepted by the Association of Official Analytical Chemists—is to have each analyst analyze two samples that are similar in both their matrix and in their concentration of analyte. To analyze the results we represent each analyst as a single point on a two-sample scatterplot, using the result for one sample as the x-coordinate and the result for the other sample as the y-coordinate [Youden, W. J. “Statistical Techniques for Collaborative Tests,” in Statistical Manual of the Association of Official Analytical Chemists, Association of Official Analytical Chemists: Washington, D. C., 1975, pp 10–11].

As shown in Figure \(\PageIndex{2}\), a two-sample chart places each analyst into one of four quadrants, which we identify as (+, +), (–, +), (–, –) and (+, –). A plus sign indicates the analyst’s result for a sample is greater than the mean for all analysts and a minus sign indicates the analyst’s result is less than the mean for all analysts. The quadrant (+, –), for example, contains those analysts that exceeded the mean for sample X and that undershot the mean for sample Y. If the variation in results is dominated by random errors, then we expect the points to be distributed randomly in all four quadrants, with an equal number of points in each quadrant. Furthermore, as shown in Figure \(\PageIndex{2}\)a, the points will cluster in a circular pattern whose center is the mean values for the two samples. When systematic errors are significantly larger than random errors, then the points fall primarily in the (+, +) and the (–, –) quadrants, forming an elliptical pattern around a line that bisects these quadrants at a 45o angle, as seen in Figure \(\PageIndex{2}\)b.

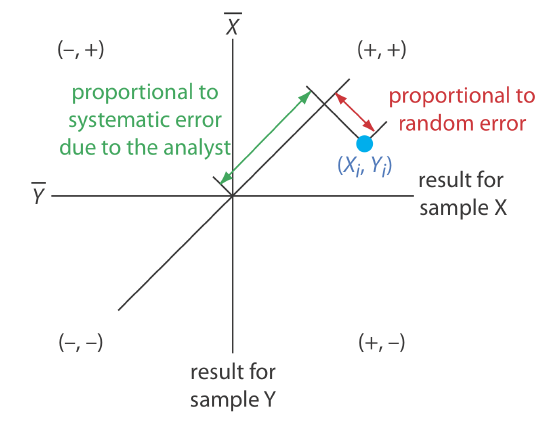

A visual inspection of a two-sample chart is an effective method for qualitatively evaluating the capabilities of a proposed standard method, as shown in Figure \(\PageIndex{3}\). The length of a perpendicular line from any point to the 45o line is proportional to the effect of random error on that analyst’s results. The distance from the intersection of the axes—which corresponds to the mean values for samples X and Y—to the perpendicular projection of a point on the 45o line is proportional to the analyst’s systematic error. An ideal standard method has small random errors and small systematic errors due to the analysts, and has a compact clustering of points that is more circular than elliptical.

We also can use the data in a two-sample chart to separate the total variation in the data, \(\sigma_\text{tot}\), into contributions from random error, \(\sigma_\text{rand}\), and from systematic errors due to the analysts, \(\sigma_\text{syst}\) [Youden, W. J. “Statistical Techniques for Collaborative Tests,” in Statistical Manual of the Association of Official Analytical Chemists, Association of Official Analytical Chemists: Washington, D. C., 1975, pp 22–24]. Because an analyst’s systematic errors are present in his or her analysis of both samples, the difference, D, between the results estimates the contribution of random error.

\[D = X_i - Y_i \nonumber\]

To estimate the total contribution from random error we use the standard deviation of these differences, sD, for all analysts

\[s_D = \sqrt{\frac {\sum_{i = 1}^n (D_i - \overline{D})^2} {2(n-1)}} = s_\text{rand} \approx \sigma_\text{rand} \label{14.1}\]

where n is the number of analysts. The factor of 2 in the denominator of equation \ref{14.1} is the result of using two values to determine Di. The total, T, of each analyst’s results

\[T_i = X_i + Y_i \nonumber\]

contains contributions from both random error and twice the analyst’s systematic error.

\[\sigma_{\mathrm{tot}}^{2}=\sigma_{\mathrm{rand}}^{2}+2 \sigma_{\mathrm{syst}}^{2} \label{14.2}\]

The standard deviation of the totals, sT, provides an estimate for \(\sigma_\text{tot}\).

\[s_{T}=\sqrt{\frac{\sum_{i=1}^{n}\left(T_{i}-\overline{T}\right)^{2}}{2(n-1)}}=s_{tot} \approx \sigma_{tot} \label{14.3}\]

Again, the factor of 2 in the denominator is the result of using two values to determine Ti.

If the systematic errors are significantly larger than the random errors, then sT is larger than sD, a hypothesis we can evaluate using a one-tailed F-test

\[F=\frac{s_{T}^{2}}{s_{D}^{2}} \nonumber\]

where the degrees of freedom for both the numerator and the denominator are n – 1. As shown in the following example, if sT is significantly larger than sD we can use equation \ref{14.2} to separate \(\sigma_\text{tot}^2\) into components that represent the random error and the systematic error.

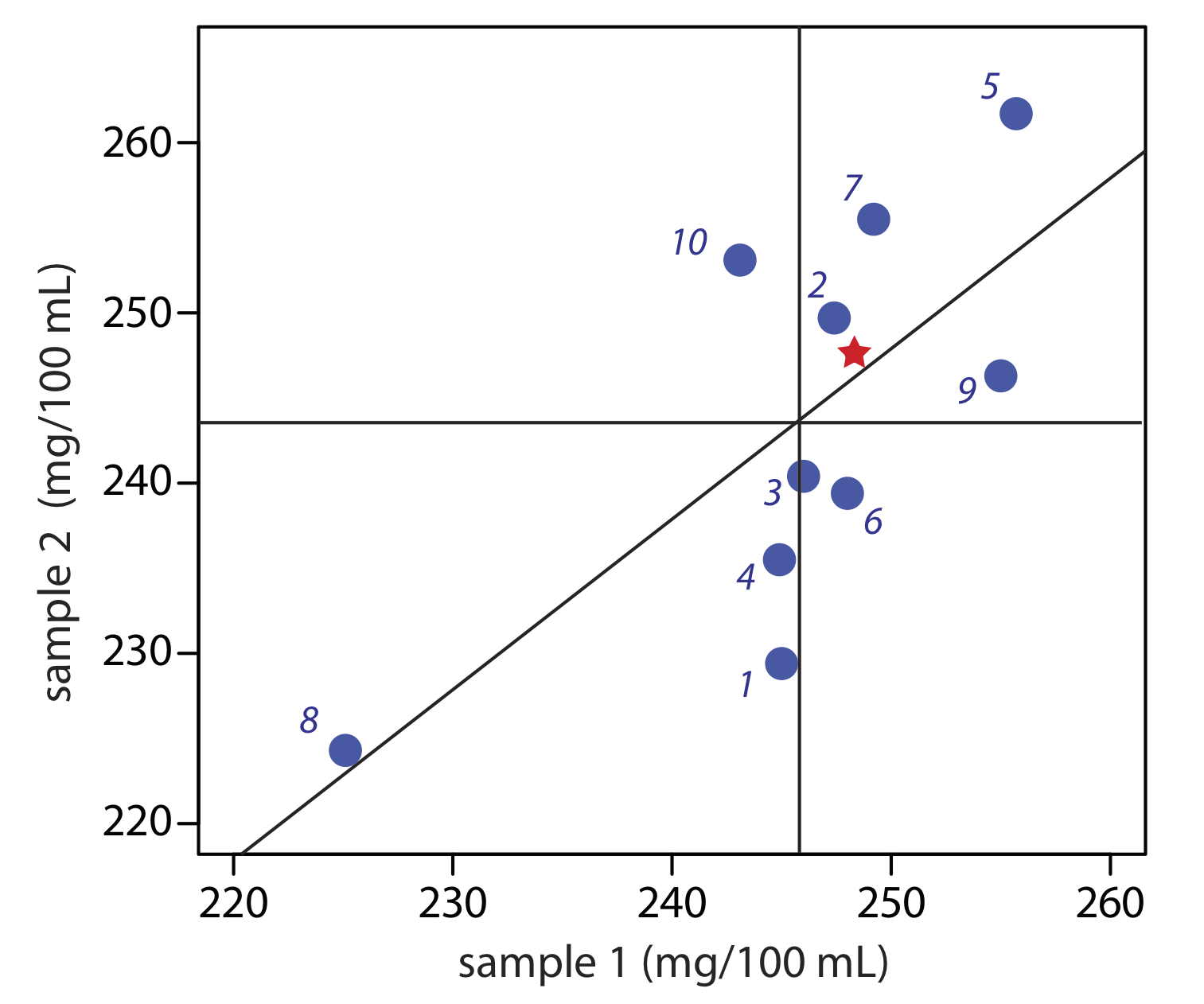

As part of a collaborative study of a new method for determining the amount of total cholesterol in blood, you send two samples to 10 analysts with instructions that they analyze each sample one time. The following results, in mg total cholesterol per 100 mL of serum, are returned to you.

| analyst | sample 1 | sample 2 |

|---|---|---|

| 1 | 245.0 | 229.4 |

| 2 | 247.4 | 249.7 |

| 3 | 246.0 | 240.4 |

| 4 | 244.9 | 235.5 |

| 5 | 255.7 | 261.7 |

| 6 | 248.0 | 239.4 |

| 7 | 249.2 | 255.5 |

| 8 | 255.1 | 224.3 |

| 9 | 255.0 | 246.3 |

| 10 | 243.1 | 253.1 |

Use this data estimate \(\sigma_\text{rand}\) and \(\sigma_\text{syst}\) for the method.

Solution

Figure \(\PageIndex{4}\) provides a two-sample plot of the results. The clustering of points suggests that the systematic errors of the analysts are significant. The vertical line at 245.9 mg/100 mL is the average value for sample 1 and the average value for sample 2 is indicated by the horizontal line at 243.5 mg/100 mL. To estimate \(\sigma_\text{rand}\) and \(\sigma_\text{syst}\) we first calculate values for Di and Ti.

| analyst | Di | Ti |

|---|---|---|

| 1 | 15.6 | 474.4 |

| 2 | –2.3 | 497.1 |

| 3 | 5.6 | 486.4 |

| 4 | 9.4 | 480.4 |

| 5 | –6.0 | 517.4 |

| 6 | 8.6 | 487.4 |

| 7 | –6.3 | 504.7 |

| 8 | 0.8 | 449.4 |

| 9 | 8.7 | 501.3 |

| 10 | –10.0 | 496.2 |

Next, we calculate the standard deviations for the differences, sD, and the totals, sT, using equation \ref{14.1} and equation \ref{14.2}, obtaining sD = 5.95 and sT = 13.3. To determine if the systematic errors between the analysts are significant, we use an F-test to compare sT and sD.

\[F=\frac{s_{T}^{2}}{s_{D}^{2}}=\frac{(13.3)^{2}}{(5.95)^{2}}=5.00 \nonumber\]

Because the F-ratio is larger than F(0.05,9,9), which is 3.179, we conclude that the systematic errors between the analysts are significant at the 95% confidence level. The estimated precision for a single analyst is

\[\sigma_{\mathrm{rand}} \approx s_{\mathrm{rand}}=s_{D}=5.95 \nonumber\]

The estimated standard deviation due to systematic errors between analysts is calculated from equation \ref{14.2}.

\[\sigma_\text{syst} = \sqrt{\frac {\sigma_\text{tot}^2 - \sigma_\text{rand}^2} {2}} \approx \sqrt{\frac {s_t^2 - s_D^2} {2}} = \sqrt{\frac {(13.3)^2-(5.95)^2} {2}} = 8.41 \nonumber\]

If the true values for the two samples are known, we also can test for the presence of a systematic error in the method. If there are no systematic method errors, then the sum of the true values, \(\mu_\text{tot}\), for samples X and Y

\[\mu_{\mathrm{tot}}=\mu_{X}+\mu_{Y} \nonumber\]

should fall within the confidence interval around T . We can use a two-tailed t-test of the following null and alternate hypotheses

\[H_{0} : \overline{T}=\mu_{\mathrm{tot}} \quad H_{\mathrm{A}} : \overline{T} \neq \mu_{\mathrm{tot}} \nonumber\]

to determine if there is evidence for a systematic error in the method. The test statistic, texp, is

\[t_\text{exp} = \frac {|\overline{T} - \mu_\text{tot}|\sqrt{n}} {s_T\sqrt{2}} \label{14.4}\]

with n – 1 degrees of freedom. We include the 2 in the denominator because sT (see equation \ref{14.3} underestimates the standard deviation when comparing \(\overline{T}\) to \(\mu_\text{tot}\).

The two samples analyzed in Example \(\PageIndex{1}\) are known to contain the following concentrations of cholesterol: \(\mu_\text{samp 1}\) = 248.3 mg/100 mL and \(\mu_\text{samp 2}\) = 247.6 mg/100 mL. Determine if there is any evidence for a systematic error in the method at the 95% confidence level.

Solution

Using the data from Example \(\PageIndex{1}\) and the true values for the samples, we know that sT is 13.3, and that

\[\overline{T} = \overline{X}_\text{samp 1 } + \overline{X}_\text{samp 2 } = 245.9 + 243.5 = 489.4 \text{ mg/100 mL} \nonumber\]

\[\mu_\text{tot} = \mu_\text{samp 1 } + mu_\text{samp 2 } = 248.3 + 247.6 = 495.9 \text{ mg/100 mL} \nonumber\]

Substituting these values into equation \ref{14.4} gives

\[t_{\mathrm{exp}}=\frac{|489.4-495.9| \sqrt{10}}{13.3 \sqrt{2}}=1.09 \nonumber\]

Because this value for texp is smaller than the critical value of 2.26 for t(0.05, 9), there is no evidence for a systematic error in the method at the 95% confidence level.

Example \(\PageIndex{1}\) and Example \(\PageIndex{2}\) illustrate how we can use a pair of similar samples in a collaborative test of a new method. Ideally, a collaborative test involves several pairs of samples that span the range of analyte concentrations for which we plan to use the method. In doing so, we evaluate the method for constant sources of error and establish the expected relative standard deviation and bias for different levels of analyte.

Collaborative Testing and Analysis of Variance

In a two-sample collaborative test we ask each analyst to perform a single determination on each of two separate samples. After reducing the data to a set of differences, D, and a set of totals, T, each characterized by a mean and a standard deviation, we extract values for the random errors that affect precision and the systematic differences between then analysts. The calculations are relatively simple and straightforward.

An alternative approach to a collaborative test is to have each analyst perform several replicate determinations on a single, common sample. This approach generates a separate data set for each analyst and requires a different statistical treatment to provide estimates for \(\sigma_\text{rand}\) and for \(\sigma_\text{syst}\).

There are several statistical methods for comparing three or more sets of data. The approach we consider in this section is an analysis of variance (ANOVA). In its simplest form, a one-way ANOVA allows us to explore the importance of a single variable—the identity of the analyst is one example—on the total variance. To evaluate the importance of this variable, we compare its variance to the variance explained by indeterminate sources of error.

We first introduced variance in Chapter 4 as one measure of a data set’s spread around its central tendency. In the context of an analysis of variance, it is useful for us to understand that variance is simply a ratio of two terms: a sum of squares for the differences between individual values and their mean, and the degrees of freedom. For example, the variance, s2, of a data set consisting of n measurements is

\[s^{2}=\frac{\sum_{i=1}^{n}\left(X_{i}-\overline{X}\right)^{2}}{n-1} \nonumber\]

where Xi is the value of a single measurement and \(\overline{X}\) is the mean. The ability to partition the variance into a sum of squares and the degrees of freedom greatly simplifies the calculations in a one-way ANOVA.

Let’s use a simple example to develop the rationale behind a one-way ANOVA calculation. The data in Table \(\PageIndex{1}\) are from four analysts, each asked to determine the purity of a single pharmaceutical preparation of sulfanilamide. Each column in Table \(\PageIndex{1}\) provides the results for an individual analyst. To help us keep track of this data, we will represent each result as Xij, where i identifies the analyst and j indicates the replicate. For example, X3,5 is the fifth replicate for the third analyst, or 94.24%.

The data in Table \(\PageIndex{1}\) show variability in the results obtained by each analyst and in the difference in the results between the analysts. There are two sources for this variability: indeterminate errors associated with the analytical procedure that are experienced equally by each analyst, and systematic or determinate errors introduced by the individual analysts.

One way to view the data in Table \(\PageIndex{1}\) is to treat it as a single large sample, characterized by a global mean and a global variance

\[\overline{\overline{X}}=\frac{\sum_{i=1}^{h} \sum_{i=1}^{n} X_{ij}}{N} \label{14.5}\]

\[\overline{\overline{s^{2}}}=\frac{\sum_{i=1}^{h} \sum_{j=1}^{n_{i}}\left(X_{i j}-\overline{\overline{X}}\right)^{2}}{N-1} \label{14.6}\]

where h is the number of samples (in this case the number of analysts), ni is the number of replicates for the ith sample (in this case the ith analyst), and N is the total number of data points (in this case 22). The global variance—which includes all sources of variability that affect the data—provides an estimate of the combined influence of indeterminate errors and systematic errors.

A second way to work with the data in Table \(\PageIndex{1}\) is to treat the results for each analyst separately. If we assume that each analyst experiences the same indeterminate errors, then the variance, s2, for each analyst provides a separate estimate of \(\sigma_\text{rand}^2\). To pool these individual variances, which we call the within-sample variance, \(s_w^2\), we square the difference between each replicate and its corresponding mean, add them up, and divide by the degrees of freedom.

\[\sigma_{\mathrm{rnd}}^{2} \approx s_{w}^{2}=\frac{\sum_{i=1}^{h} \sum_{j=1}^{n_{i}}\left(X_{i j}-\overline{X}_{i}\right)^{2}}{N-h} \label{14.7}\]

Carefully compare our description of equation \ref{14.7} to the equation itself. It is important that you understand why equation \ref{14.7} provides our best estimate of the indeterminate errors that affect the data in Table \(\PageIndex{1}\). Note that we lose one degree of freedom for each of the h means included in the calculation.

To estimate the systematic errors, \(\sigma_\text{syst}^2\), that affect the results in Table \(\PageIndex{1}\) we need to consider the differences between the analysts. The variance of the individual mean values about the global mean, which we call the between-sample variance, \(s_b^2\), is

\[s_{b}^{2}=\frac{\sum_{i=1}^{h} n_{i}\left(\overline{X}_{i}-\overline{\overline{X}}\right)^{2}}{h-1} \label{14.8}\]

where we lose one degree of freedom for the global mean. The between-sample variance includes contributions from both indeterminate errors and systematic errrors; thus

\[s_b^2 = \sigma_\text{rand}^2 + \overline{n}\sigma_\text{syst}^2 \label{14.9}\]

where \(\overline{n}\) is the average number of replicates per analyst.

\[\overline{n}=\frac{\sum_{i=1}^{h} n_{i}}{h} \nonumber\]

Note the similarity between equation \ref{14.9} and equation \ref{14.2}. The analysis of the data in a two-sample plot is the same as a one-way analysis of variance with h = 2.

In a one-way ANOVA of the data in Table \(\PageIndex{1}\) we make the null hypothesis that there are no significant differences between the mean values for the analysts. The alternative hypothesis is that at least one of the mean values is significantly different. If the null hypothesis is true, then \(\sigma_\text{syst}^2\) must be zero and \(s_w^2\) and \(s_b^2\) should have similar values. If \(s_b^2\) is significantly greater than \(s_w^2\) , then \(\sigma_\text{syst}^2\) is greater than zero. In this case we must accept the alternative hypothesis that there is a significant difference between the means for the analysts. The test statistic is the F-ratio

\[F_{\mathrm{exp}}=\frac{s_{b}^{2}}{s_{w}^{2}} \nonumber\]

which is compared to the critical value F(a, h – 1, N – h). This is a one-tailed significance test because we are interested only in whether \(s_b^2\) is significantly greater than \(s_w^2\).

Both \(s_b^2\) and \(s_w^2\) are easy to calculate for small data sets. For larger data sets, calculating \(s_w^2\) is tedious. We can simplify the calculations by taking advantage of the relationship between the sum-of-squares terms for the global variance (equation \ref{14.6}), the within-sample variance (equation ref{14.7}), and the between-sample variance (equation \ref{14.8}). We can split the numerator of equation \ref{14.6}, which is the total sum-of-squares, SSt, into two terms

\[SS_t = SS_w + SS_b \nonumber\]

where SSw is the sum-of-squares for the within-sample variance and SSb is the sum-of-squares for the between-sample variance. Calculating SSt and SSb gives SSw by difference. Finally, dividing SSw and SSb by their respective degrees of freedom gives \(s_w^2\) and \(s_b^2\). Table \(\PageIndex{2}\) summarizes the equations for a one-way ANOVA calculation. Example \(\PageIndex{3}\) walks you through the calculations, using the data in Table \(\PageIndex{1}\).

The data in Table \(\PageIndex{1}\) are from four analysts, each asked to determine the purity of a single pharmaceutical preparation of sulfanilamide. Determine if the difference in their results is significant at \(\alpha = 0.05\). If such a difference exists, estimate values for \(\sigma_\text{rand}^2\) and \(\sigma_\text{syst}\).

Solution

To begin we calculate the global mean (equation \ref{14.5}) and the global variance (equation \ref{14.6}) for the pooled data, and the means for each analyst; these values are summarized here.

\[\overline{\overline{X}} = 95.87 \quad \quad \overline{\overline{s^2}} = 5.506 \nonumber\]

\[\overline{X}_A = 94.56 \quad \overline{X}_B = 99.88 \quad \overline{X}_C = 94.77 \quad \overline{X}_D = 94.75 \nonumber\]

Using these values we calculate the total sum of squares

\[S S_{t}=\overline{\overline{s^{2}}}(N-1)=(5.506)(22-1)=115.63 \nonumber\]

the between sample sum of squares

\[S S_{b}=\sum_{i=1}^{h} n_{i}\left(\overline{X}_{i}-\overline{\overline{X}}\right)^{2}=6(94.56-95.87)^{2}+5(99.88-95.87)^{2}+5(94.77-95.87)^{2}+6(94.75-95.87)^{2}=104.27 \nonumber\]

and the within sample sum of squares

\[S S_{w}=S S_{t}-S S_{b}=115.63-104.27=11.36 \nonumber\]

The remainder of the necessary calculations are summarized in the following table.

| source | sum of squares | degrees of freedom | variance |

|---|---|---|---|

| between samples | 104.27 | \(h - 1 = 4 - 1 = 3\) | 34.76 |

| within samples | 11.36 | \(N - h = 22 - 4 = 18\) | 0.631 |

Comparing the variances we find that

\[F_{\mathrm{exp}}=\frac{s_{b}^{2}}{s_{w}^{2}}=\frac{34.76}{0.631}=55.09 \nonumber\]

Because Fexp is greater than F(0.05, 3, 18), which is 3.16, we reject the null hypothesis and accept the alternative hypothesis that the work of at least one analyst is significantly different from the remaining analysts. Our best estimate of the within sample variance is

\[\sigma_{\text {rand}}^{2} \approx s_{w}^{2}=0.631 \nonumber\]

and our best estimate of the between sample variance is

\[\sigma_\text{syst}^2 = \frac {s_b^2 - s_w^2} {\overline{n}} = \frac {35.76 - 0.631} {22/4} = 6.205 \nonumber\]

In this example the variance due to systematic differences between the analysts is almost an order of magnitude greater than the variance due to the method’s precision.

Having demonstrated that there is significant difference between the analysts, we can use a modified version of the t-test—known as Fisher’s least significant difference—to determine the source of the difference. The test statistic for comparing two mean values is the t-test from Chapter 4, except we replace the pooled standard deviation, spool, by the square root of the within-sample variance from the analysis of variance.

\[t_{\mathrm{exp}}=\frac{\left|\overline{X}_{1}-\overline{X}_{2}\right|}{\sqrt{s_{w}^{2}}} \times \sqrt{\frac{n_{1} n_{2}}{n_{1}+n_{2}}} \label{14.10}\]

We compare texp to its critical value \(t(\alpha, \nu)\) using the same significance level as the ANOVA calculation. The degrees of freedom are the same as that for the within sample variance. Since we are interested in whether the larger of the two means is significantly greater than the other mean, the value of \(t(\alpha, \nu)\) is that for a one-tailed significance test.

You might ask why we bother with the analysis of variance if we are planning to use a t-test to compare pairs of analysts. Each t-test carries a probability, \(\alpha\), of claiming that a difference is significant even though it is not (a type 1 error). If we set \(\alpha\) to 0.05 and complete six t-tests, the probability of a type 1 error increases to 0.265. Knowing that there is a significant difference within a data set—what we gain from the analysis of variance—protects the t-test.

In Example \(\PageIndex{3}\) we showed that there is a significant difference between the work of the four analysts in Table \(\PageIndex{1}\). Determine the source of this significant difference.

Solution

Individual comparisons using Fisher’s least significant difference test are based on the following null hypothesis and the appropriate one-tailed alternative hypothesis.

\[H_0: \overline{X}_1 = \overline{X}_2 \quad \quad H_A: \overline{X}_1 > \overline{X}_2 \quad \text{or} \quad H_A: \overline{X}_1 < \overline{X}_2 \nonumber\]

Using equation \ref{14.10} we calculate values of texp for each possible comparison and compare them to the one-tailed critical value of 1.73 for t(0.05, 18). For example, texp for analysts A and B is

\[\left(t_{exp}\right)_{AB}=\frac{|94.56-99.88|}{\sqrt{0.631}} \times \sqrt{\frac{6 \times 5}{6+5}}=11.06 \nonumber\]

Because (texp)AB is greater than t(0.05,18) we reject the null hypothesis and accept the alternative hypothesis that the results for analyst B are significantly greater than those for analyst A. Continuing with the other pairs it is easy to show that (texp)AC is 0.437, (texp)AD is 0.414, (texp)BC is 10.17, (texp)BD is 10.67, and (texp)CD is 0.04.. Collectively, these results suggest that there is a significant systematic difference between the work of analyst B and the work of the other analysts. There is, of course no way to decide whether any of the four analysts has done accurate work.

We have evidence that analyst B’s result is significantly different than the results for analysts A, C, and D, and we have no evidence that there is any significant difference between the results of analysts A, C, and D. We do not know if analyst B’s results are accurate, or if the results of analysts A, C, and D are accurate. In fact, it is possible that none of the results in Table \(\PageIndex{1}\) are accurate.

We can extend an analysis of variance to systems that involve more than a single variable. For example, we can use a two-way ANOVA to determine the effect on an analytical method of both the analyst and the instrumentation. The treatment of multivariate ANOVA is beyond the scope of this text, but is covered in several of the texts listed in this chapter’s additional resources.

What is a Reasonable Result for a Collaborative Study?

Collaborative testing provides us with a method for estimating the variability (or reproducibility) between analysts in different labs. If the variability is significant, we can determine what portion is due to indeterminate method errors, \(\sigma_\text{rand}^2\), and what portion is due to systematic differences between the analysts, \(\sigma_\text{syst}^2\). What is left unanswered is the following important question: What is a reasonable value for a method’s reproducibility?

An analysis of nearly 10 000 collaborative studies suggests that a reasonable estimate for a method’s reproducibility is

\[R=2^{(1-0.5 \log C)} \label{14.11}\]

where R is the percent relative standard deviation for the results included in the collaborative study and C is the fractional amount of analyte in the sample on a weight-to-weight basis. Equation \ref{14.1} is thought to be independent of the type of analyte, the type of matrix, and the method of analysis. For example, when a sample in a collaborative study contains 1 microgram of analyte per gram of sample, C is 10–6 the estimated relative standard deviation is

\[R=2^{\left(1-0.5 \log 10^{-6}\right)}=16 \% \nonumber\]

What is the estimated relative standard deviation for the results of a collaborative study when the sample is pure analyte (100% w/w analyte)? Repeat for the case where the analyte’s concentration is 0.1% w/w.

Solution

When the sample is 100% w/w analyte (C = 1) the estimated relative standard deviation is

\[R=2^{(1-0.5 \log 1)}=2 \% \nonumber\]

We expect that approximately two-thirds of the participants in the collaborative study (\(\pm 1 \sigma\)) will report the analyte’s concentration within the range of 98% w/w to 102% w/w. If the analyte’s concentration is 0.1% w/w (C = 0.001), the estimated relative standard deviation is

\[R=2^{(1-0.5 \log 0.01)}=5.7 \% \nonumber\]

and we expect that approximately two-thirds of the analysts will report the analyte’s concentration within the range of 0.094% w/w to 0.106% w/w.

Of course, equation \ref{14.11} only estimates the expected relative standard. If the method’s relative standard deviation falls with a range of one-half to twice the estimated value, then it is acceptable for use by analysts in different laboratories. The percent relative standard deviation for a single analyst should be one-half to two-thirds of that for the variability between analysts.

For details on equation \ref{14.11}, see (a) Horwitz, W. Anal. Chem. 1982, 54, 67A–76A; (b) Hall, P.; Selinger, B. Anal. Chem. 1989, 61, 1465–1466; (c) Albert, R.; Horwitz, W. Anal. Chem. 1997, 69, 789–790, (d) “The Amazing Horwitz Function,” AMC Technical Brief 17, July 2004; (e) Lingser, T. P. J. Trends Anal. Chem. 2006, 25, 1125. For a discussion of the equation's limitations, see Linsinger, T. P. J.; Josephs, R. D. “Limitations of the Application of the Horwitz Equation,” Trends Anal. Chem. 2006, 25, 1125–1130, as well as a rebuttal (Thompson, M. “Limitations of the Application of the Horwitz Equation: A Rebuttal,” Trends Anal. Chem. 2007, 26, 659–661) and response to the rebuttal (Linsinger, T. P. J.; Josephs, R. D. “Reply to Professor Michael Thompson’s Rebuttal,” Trends Anal. Chem. 2007, 26, 662–663.